Caching is a big part of how Cloudflare CDN makes the Internet faster and more reliable. When a visitor to a customer’s website requests an asset, we retrieve it from the customer’s origin server. After that first request, in many cases we cache that asset. Whenever anyone requests it again, we can serve it from one of our data centers close to them, dramatically speeding up load times.

Did you notice the small caveat? We cache after the first request in many cases, not all. One notable exception since 2010 up until now: requests with query strings. When a request came with a query string (think https://example.com/image.jpg?width=500; the ?width=500 is the query string), we needed to see it a whole three times before we would cache it on our default cache level. Weird!

This is a short tale of that strange exception, why we thought we needed it, and how, more than ten years later, we showed ourselves that we didn’t.

Two MISSes too many

To see the exception in action, here’s a command we ran a couple weeks ago. It requests an image hosted on example.com five times and prints each response’s CF-Cache-Status header. That header tells us what the cache did while serving the request.

❯ for i in {1..5}; do curl -svo /dev/null example.com/image.jpg 2>&1 | grep -e 'CF-Cache-Status'; sleep 3; done

< CF-Cache-Status: MISS

< CF-Cache-Status: HIT

< CF-Cache-Status: HIT

< CF-Cache-Status: HIT

< CF-Cache-Status: HIT

The MISS means that we couldn’t find the asset in the cache and had to retrieve it from the origin server. On the HITs, we served the asset from cache.

Now, just by adding a query string to the same request (?query=val):

❯ for i in {1..5}; do curl -svo /dev/null example.com/image.jpg\?query\=val 2>&1 | grep -e 'CF-Cache-Status'; sleep 3; done

< CF-Cache-Status: MISS

< CF-Cache-Status: MISS

< CF-Cache-Status: MISS

< CF-Cache-Status: HIT

< CF-Cache-Status: HIT

There they are - three MISSes, meaning two extra trips to the customer origin!

We traced this surprising behavior back to a git commit from Cloudflare’s earliest days in 2010. Since then, it has spawned chat threads, customer escalations, and even an internal Solutions Engineering blog post that christened it the “third time’s the charm” quirk.

It would be much less confusing if we could make the query string behavior align with the others, but why was the quirk here to begin with?

Unpopular queries

From an engineering perspective, forcing three MISSes for query strings can make sense as a way to protect ourselves from unnecessary disk writes.

That’s because for many requests with query strings in the URL, we may never see that URL requested again.

- Some query strings simply pass along data to the origin server that may not actually result in a different response from the base URL, for example, a visitor’s browser metadata. We end up caching the same response behind many different, potentially unique, cache keys.

- Some query strings are intended to bypass caches. These “cache busting” requests append randomized query strings in order to force pulling from the origin, a strategy that some ad managers use to count impressions.

Unpopular requests are not a new problem for us. Previously, we wrote about how we use an in-memory transient cache to store requests we only ever see once (dubbed “one-hit-wonders”) so that they are never written to disk. This transient cache, enabled for just a subset of traffic, reduces our disk writes by 20-25%.

Was this behavior from 2010 giving us similar benefits? Since then, our network has grown from 10,000 Internet properties to 25 million. It was time to re-evaluate. Just how much would our disk writes increase if we cached on the first request? How much better would cache hit ratios be?

Hypotheses: querying the cache

Good news: our metrics showed only around 3.5% of requests use the default cache level with query strings. Thus, we shouldn’t expect more than a few percentage points difference in disk writes. Less good: cache hit rate shouldn’t increase much either.

On the other hand, we also found that a significant portion of these requests are images, which could potentially take up lots of disk space and hike up cache eviction rates. Enough napkin math - we needed to start assessing real world data.

A/B testing: caching the queries

We were able to validate our hypotheses using an A/B test, where half the machines in a data center use the old behavior and half do not. This enabled us to control for Internet traffic fluctuations over time and get more precise impact numbers.

Other considerations: we limited the test to one data center handling a small slice of our traffic to minimize the impact of any negative side effects. We also disabled transient cache, which would nullify some of the I/O costs we expected to observe. By turning transient cache off for the experiment, we should see the upper bound of the disk write increase.

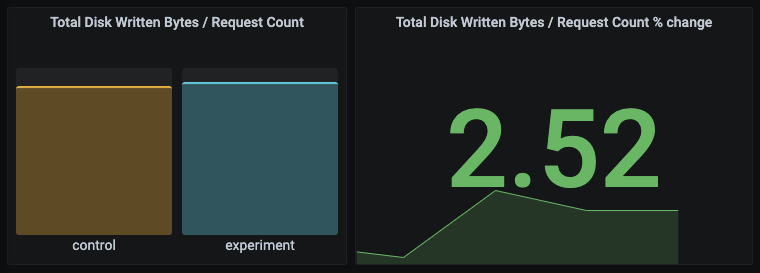

We ran this test for a couple days and, as hypothesized, found a minor but acceptable increase in disk writes per request to the tune of 2.5%.

What’s more, we saw a modest hit rate increase of around +3% on average for our Enterprise customers. More hits meant fewer trips to origin servers and thus bandwidth savings for customers: in this case, a -5% decrease in total bytes served from origin.

Note that we saw hit rate increases for certain customers across all plan types. Those with the biggest boost had a variety of query strings across different visitor populations (e.g. appending query strings such as ?resize=100px:*&output-quality=60 to optimize serving images for different screen sizes). A few additional HITs for many different, but still popular, cache keys really added up.

Revisiting old assumptions

What the aggregate hit rate increase implied was that for a significant portion of customers, our old query string behavior was too defensive. We were overestimating the proportion of query string requests that were one-hit-wonders.

Given that these requests are a relatively small percentage of total traffic, we didn’t expect to see massive hit rate improvements. In fact, if the assumptions about many query string requests being one-hit-wonders were correct, we should hardly be seeing any difference at all. (One-hit-wonders are actually “one MISS wonders” in the context of hit rate.) Instead, we saw hit rate increases proportional to the affected traffic percentage. This, along with the minor I/O cost increases, signaled that the benefits of removing the behavior would outweigh the benefits of keeping it.

Using a data-driven approach, we determined our caution around caching query string requests wasn’t nearly as effective at preventing disk writes of unpopular requests compared to our newer efforts, such as transient cache. It was a good reminder for us to re-examine past assumptions from time-to-time and see if they still held.

Post-experiment, we gradually rolled out the change to verify that our cost expectations were met. At this time, we’re happy to report that we’ve left the “third time’s the charm” quirk behind for the history books. Long live “cache at first sight.”