Back when Cloudflare was created, over 10 years ago now, the dominant HTTP server used to power websites was Apache httpd. However, we decided to build our infrastructure using the then relatively new NGINX server.

There are many differences between the two, but crucially for us, the event loop architecture of NGINX was the key differentiator. In a nutshell, event loops work around the need to have one thread or process per connection by coalescing many of them in a single process, this reduces the need for expensive context switching from the operating system and also keeps the memory usage predictable. This is done by processing each connection until it wants to do some I/O, at that point, the said connection is queued until the I/O task is complete. During that time the event loop is available to process other in-flight connections, accept new clients, and the like. The loop uses a multiplexing system call like epoll (or kqueue) to be notified whenever an I/O task is complete among all the running connections.

In this article we will see that despite its advantages, event loop models also have their limits and falling back to good old threaded architecture is sometimes a good move.

Photo credit: Flickr CC-BY-SA

The key assumption of an event loop architecture

For an event loop to work correctly, there is one key requirement that has to be held: every piece of work has to finish quickly. This is because, as with other collaborative multitasking approaches, once a piece of work is started, there is no preemption mechanism.

For a proxy service like Cloudflare, this assumption works quite well as we spend most of the time waiting for the client to send data, or the origin to answer. This model is also widely successful for web applications that spend most of their time waiting for the database or other kind of RPC.

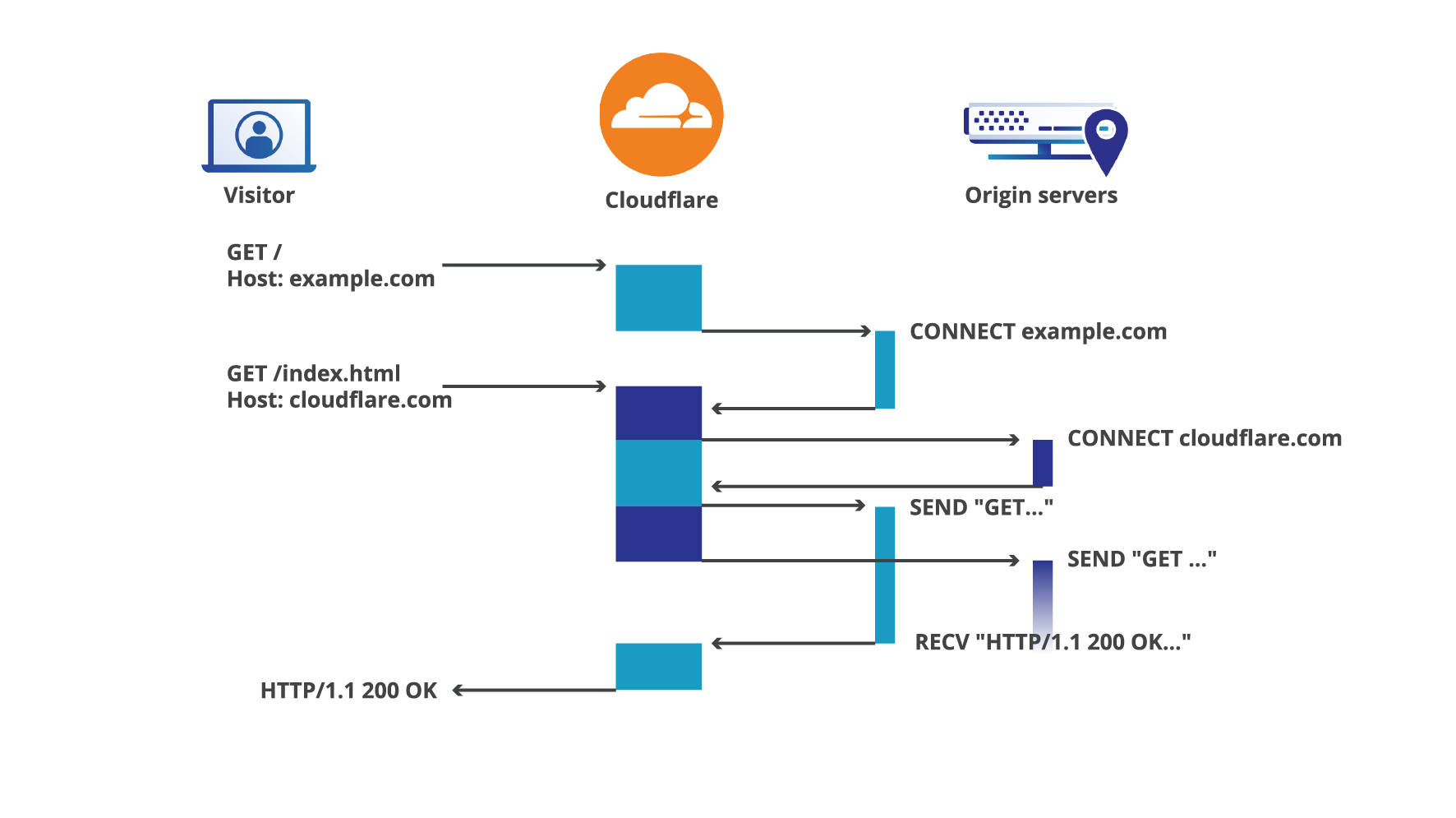

Let's take an example at a situation where two requests hit a Cloudflare server at the same time:

In this case, requests don't block each other too much… However, if one of the work units takes an unreasonable amount of time, it will start blocking other requests and the whole model will fall apart very quickly.

Such long operations might be CPU-intensive tasks, or blocking system calls. A common mistake is to call a library that uses blocking system calls internally: in this case an event-based program will perform a lot worse than a threaded one.

Issues with the Cloudflare workload

As I said previously, most of the work done by Cloudflare servers to process an HTTP request is quick and fits the event loop model well. However, a few places might require more CPU. Most notably, our Web Application Firewall (WAF) is in charge of inspecting incoming requests to look for potential threats.

Although this process only takes a few milliseconds in the large majority of cases, a tiny portion of requests might need more time. It might be tempting to say that it is rare enough to be ignored. However, a typical worker process can have hundreds of requests in flight at the same time. This means that halting the event loop could slow down any of these unrelated requests as well. Keep in mind that the median web page requires around 70 requests to fully load, and pages well over 100 assets are common. So looking at the average metrics is not very useful in this case, the 99th percentile is more relevant as a regular page load will likely hit that case at some point.

We want to make the web as fast as possible, even in edge cases. So we started to think about solutions to remove or mitigate that delay. There are quite a few options:

Increase the number of worker processes: this merely mitigates the problem, and creates more pressure on the kernel. Worse, spawning more workers does not cause a linear increase in capacity (even with spare CPU cores) because it also means that critical sections of code will have more lock contention.

Create a separate service dedicated to the WAF: this is a more realistic solution, it makes more sense to adopt a thread-based model for CPU-intensive tasks. A separate process allows that. However it would make migration from the existing codebase more difficult, and adding more IPC also has costs: serialization/deserialization, latency, more error cases, etc.

Offload CPU-intensive tasks to a thread pool: this is a hybrid approach where we could just hand over the WAF processing to a dedicated thread. There are still some costs of doing that, but overall using a thread pool is a lot faster and simpler than calling an external service. Moreover, we keep roughly the same code, we just call it differently.

All of these solutions are valid and this list is far from exhaustive. As in many situations, there is no one right solution: we have to weigh the different tradeoffs. In this case, given that we already have working code in NGINX, and that the process must be as quick as possible, we chose the thread pool approach.

NGINX already has thread pools!

Okay, I omitted one detail: NGINX already takes this hybrid approach for other reasons. Which made our task easier.

It is not always easy to avoid blocking system calls. The filesystem operations on Linux are famously known to be tricky in an asynchronous mode: among other limitations, files have to be read using "direct" mode, bypassing the filesystem cache. Quite annoying for a static file server[1].

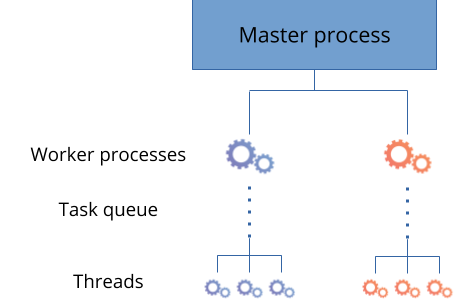

This is why thread pools were introduced back in 2015. The idea is quite simple: each worker process will spawn a group of threads that will be dedicated to process these synchronous operations. We use (and improved) that feature ourselves with great success for our caching layer.

Whenever the event loop wants to have such an operation performed, it will push it into a queue that gets processed by the threads, and it will be notified with a result when it is done.

This approach is not unique to NGINX: libuv (that powers node.js) also uses thread pools for filesystem operations.

Can we repurpose this system to offload our CPU-intensive sections too? It turns out we can. The threading model here is quite simple: nearly nothing is shared between the main loop, only a struct describing the operation to perform is sent and a result is sent back to the event loop[2]. This share-nothing approach also has some drawbacks, notably memory usage: in our case, every thread has its own Lua VM to run the WAF code and its own compiled regular expression cache. Some of it can be improved, but as our code was written assuming there were no data races, changing that assumption would require a significant refactoring.

The best of both worlds? Not quite yet.

It's not a secret that epoll is very difficult to use correctly, in the past my colleague Marek wrote extensively about its challenges. But more importantly in our case, its load balancing issues were a real problem.

In a nutshell, when multiple processes listen on the same socket, some processes will be busier than others. This is due to the fact that whenever the event loop sits idle, it is free to accept new connections.

Offloading the WAF to a thread pool means freeing up time on the event loop, that can accept even more connections for the unlucky processes, which in turn will need the WAF. In this case, making this change would only make a bad situation worse: the WAF tasks would start piling up in the job queue waiting for the threads to process them.

Fortunately, this problem is quite well known and even if there is no solution yet in the upstream kernel, people have already attempted to fix it. We applied that patch, adding the EPOLLROUNDROBIN flag to our kernels quite some time ago and it played a crucial role in this case.

That's a lot of text… Show us the numbers!

Alright, that was a lot of talking, let's have a look at actual numbers. I will examine how our servers behaved before (baseline) and after offloading our WAF into thread pools[3].

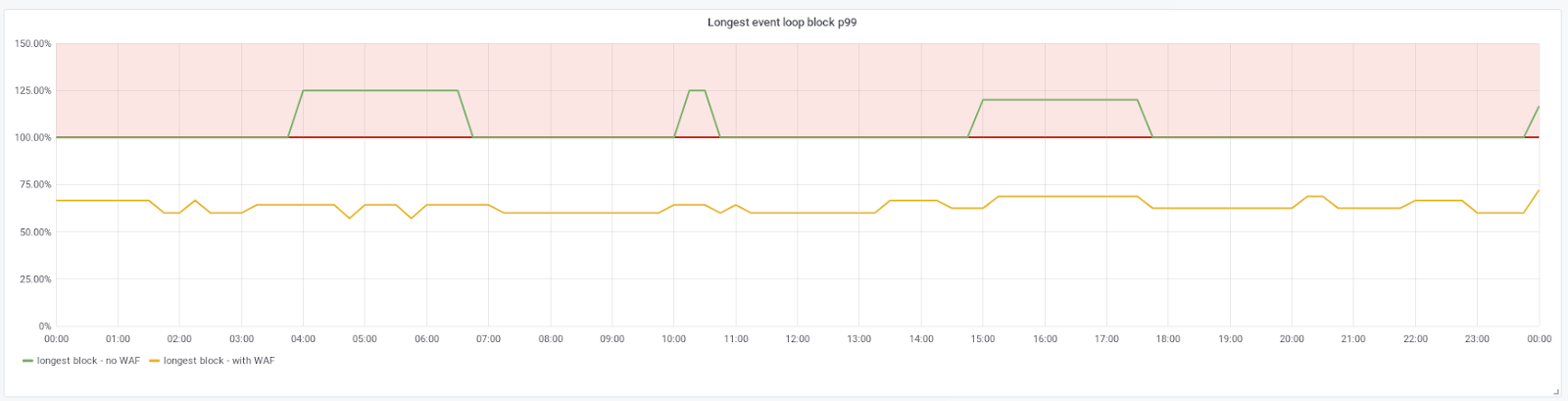

First let's have a look at the NGINX event loop itself. Did our change really improve the situation?

We have a metric telling us the maximum amount of time a request blocked the event loop during its processing. Let's look at its 99th percentile:

Using 100% as our baseline, we can see that this metric is 30% to 40% lower for the requests using the WAF when it is offloaded (yellow line). This is quite expected as we just offloaded a fair chunk of processing to a thread. For other requests (green line), the situation seems a tiny bit worse, but can be explained by the fact that the kernel has more threads to care about now, so the main event loop is more likely to be interrupted by the scheduler while it is running.

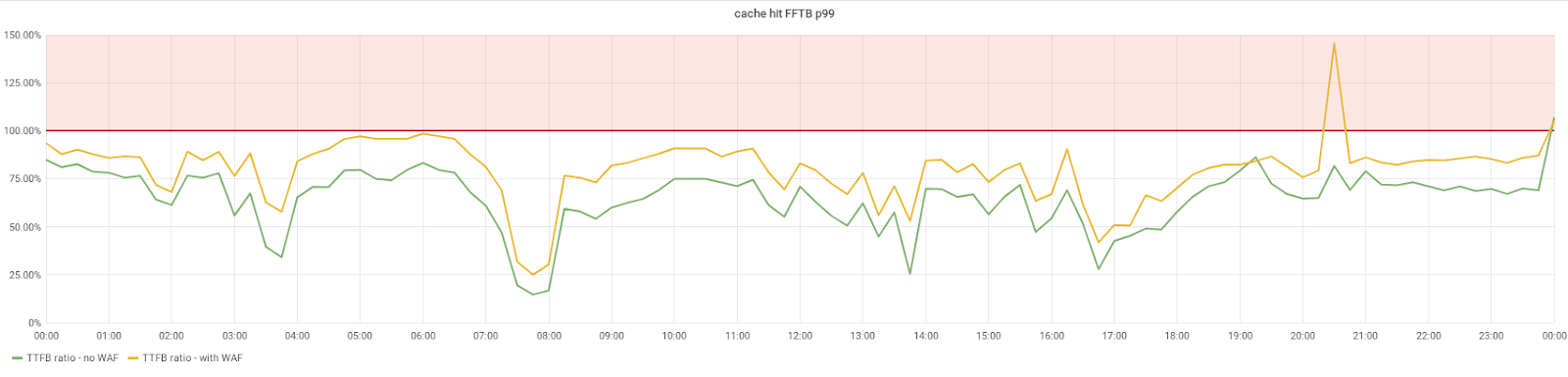

This is encouraging (at least for the requests with WAF enabled), but this doesn't really say what is the value for our customers, so let's look at more concrete metrics. First, the Time To First Byte (TTFB), the following graph only takes cache hits into account to reduce the noise due to other moving parts.

The 99th percentile is significantly reduced for both WAF and non-WAF requests, overall the gains of freeing up time on the event loop dwarfs the slight penalty we saw on the previous graph.

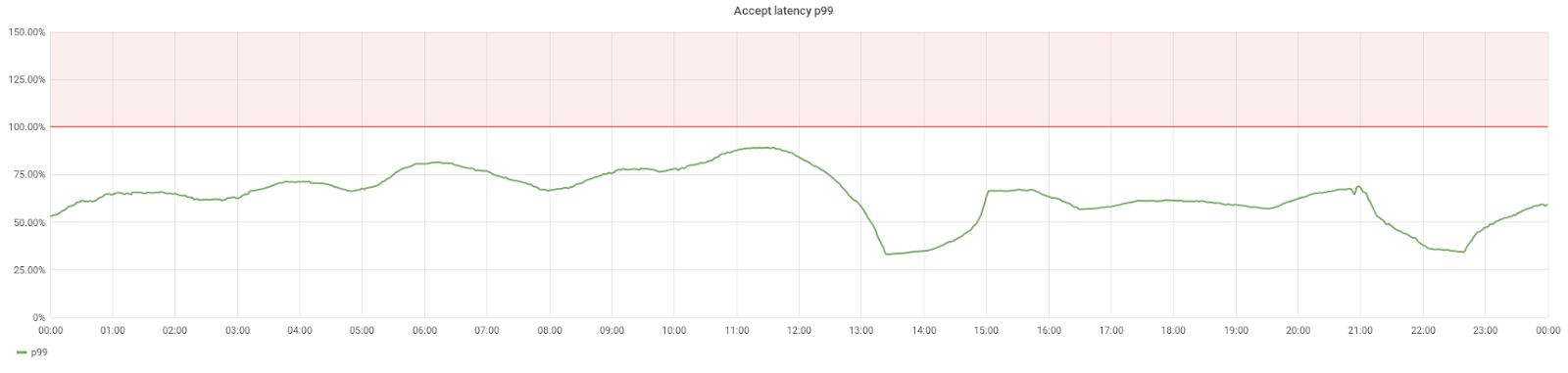

Let's finish by looking at another metric: the TTFB here starts only when a request has been accepted by the server. But now we can assume that these requests will be accepted faster as the event loop spends more time idle. Is that the case?

Success! The accept latency is also a lot lower. Not only the requests are faster, but the server is able to start processing them more quickly as well.

Overall, event-loop-based processing makes a lot of sense in many situations, especially in a microservices world. But this model is particularly vulnerable in cases where a lot of CPU time is necessary. There are a few different ways to mitigate this issue and as is often the case, the right answer is "it depends". In our case the thread pool approach was the best tradeoff: not only did it give visible improvements for our customers but it also allowed us to spread the load across more CPUs so we can more efficiently use our hardware.

But this tradeoff has many variables. Despite being too long to comfortably run on an event loop, the WAF is still very quick. For more complex tasks, having a separate service is usually a better option in my opinion. And there are a ton of other factors to take into account: security, development and deployment processes, etc.

We also saw that despite looking slightly worse in some micro metrics, the overall performance improved. This is something we all have to keep in mind when working on complex systems.

Are you interested in debugging and solving problems involving userspace, kernel and hardware? We're hiring!

[1] hopefully this pain will go away over time as we now have io_uring

[2] we still have to make sure the buffers are safely shared to avoid use-after-free and other data races, copying is the safest approach, but not necessarily the fastest.

[3] in every case, the metrics are taken over a period of 24h, exactly one week apart.