Configuration management is far from a solved problem. As organizations scale beyond a handful of administrators, having a secure, auditable, and self-service way of updating system settings becomes invaluable. Managing a Cloudflare account is no different. With dozens of products and hundreds of API endpoints, keeping track of current configuration and making bulk updates across multiple zones can be a challenge. While the Cloudflare Dashboard is great for analytics and feature exploration, any changes that could potentially impact users really should get a code review before being applied!

This is where Cloudflare's Terraform provider can come in handy. Built as a layer on top of the cloudflare-go library, the provider allows users to interface with the Cloudflare API using stateful Terraform resource declarations. Not only do we actively support this provider for customers, we make extensive use of it internally! In this post, we hope to provide some best practices we've learned about managing complex Cloudflare configurations in Terraform.

Why Terraform

Unsurprisingly, we find Cloudflare's products to be pretty useful for securing and enhancing the performance of services we deploy internally. We use DNS, WAF, Zero Trust, Email Security, Workers, and all manner of experimental new features throughout the company. This dog-fooding allows us to battle-harden the services we provide to users and feed our desired features back to the product teams all while running the backend of Cloudflare. But, as Cloudflare grew, so did the complexity and importance of our configuration.

When we were a much smaller company, we only had a handful of accounts with designated administrators making changes on behalf of their colleagues. However, over time this handful of accounts grew into hundreds with each managed by separate teams. Independent accounts are useful in that they allow service-owners to make modifications that can't impact others, but it comes with overhead.

We faced the challenge of ensuring consistent security policies, up-to-date account memberships, and change visibility. While our accounts were still administered by kind human stewards, we had numerous instances of account members not being removed after they transferred to a different team. While this never became a security incident, it demonstrated the shortcomings of manually provisioning account memberships. In the case of a production service migration, the administrator executing the change would often hop on a video call and ask for others to triple-check an IP address, ruleset, or access policy update. It was an era of looking through the audit logs to see what broke a service.

We wanted to make it easier for developers and users to make the changes they wanted without having to reach out to an administrator. Defining our configuration in code using Terraform has allowed us to keep tabs on the complexity of configuration while improving visibility and change management practices. By dogfooding the Cloudflare Terraform provider, we've been able to ensure:

- Modifications to accounts are peer reviewed by the team that owns an account.

- Each change is tied to a user, commit, and a ticket explaining the rationale for the change.

- API Tokens are tied to service accounts rather than individual human users, meaning they survive team changes and offboarding.

- Account configuration can be audited by anyone at the company for current state, accuracy, and security without needing to add everyone as a member of every account.

- Large changes, such as enforcing hard keys can be done rapidly– even in a single pull request.

- Configuration can be easily copied and reused across accounts to promote best practices and speed up development.

- We can use and iterate on our awesome provider and provide a better experience to other users (shoutout in particular to Jacob!).

Terraform in CI/CD

Terraform has a fairly mature open source ecosystem, built from years of running-in-production experience. Thus, there are a number of ways to make interacting with the system feel as comfortable to developers as git. One of these tools is Atlantis.

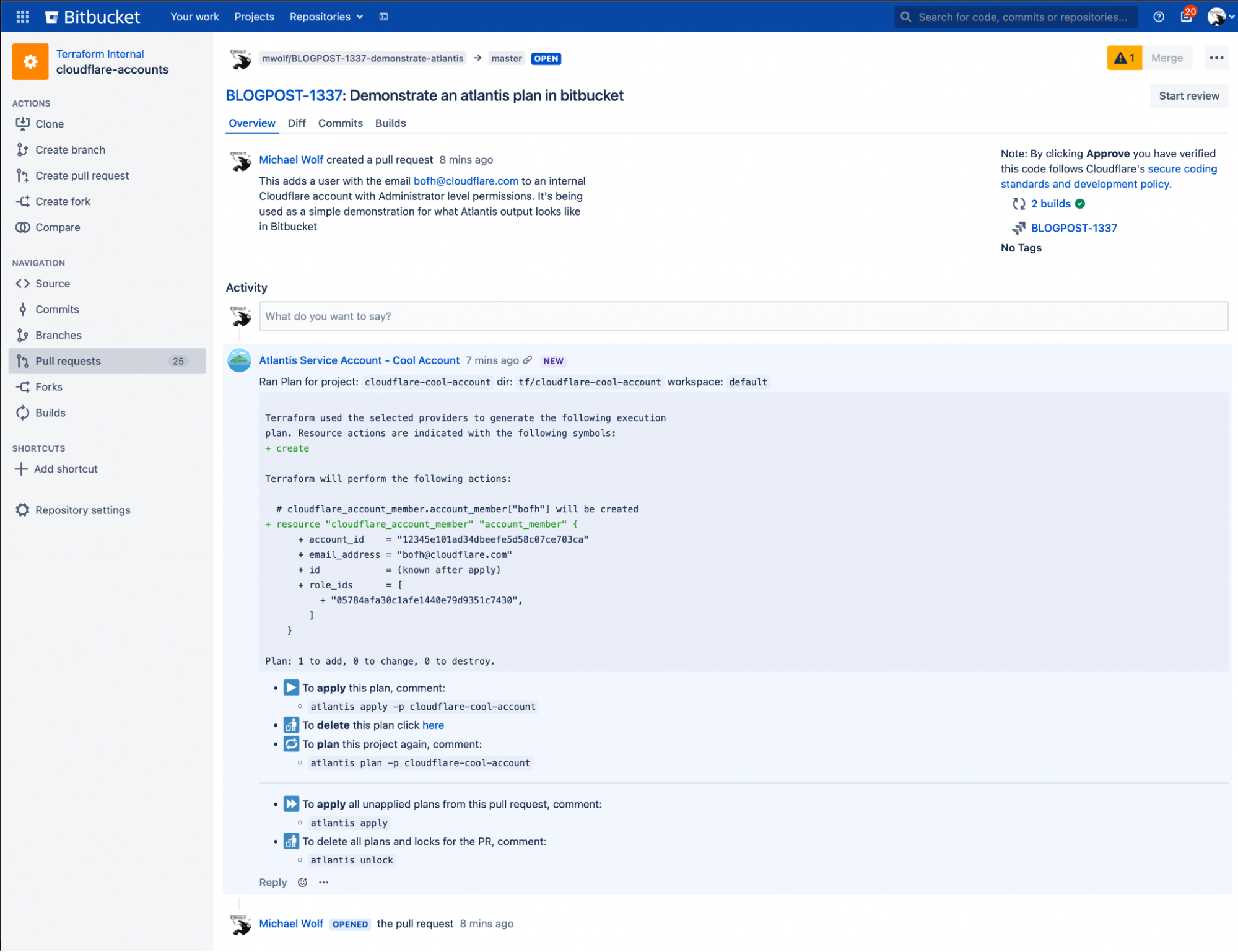

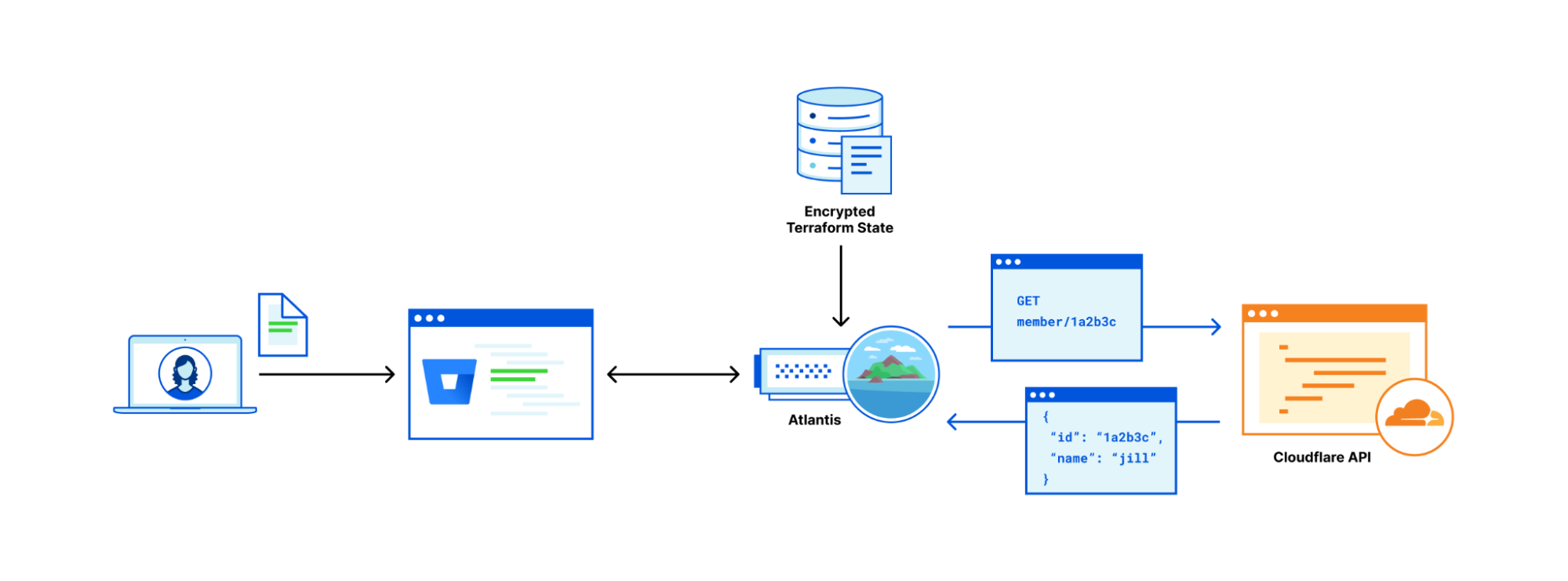

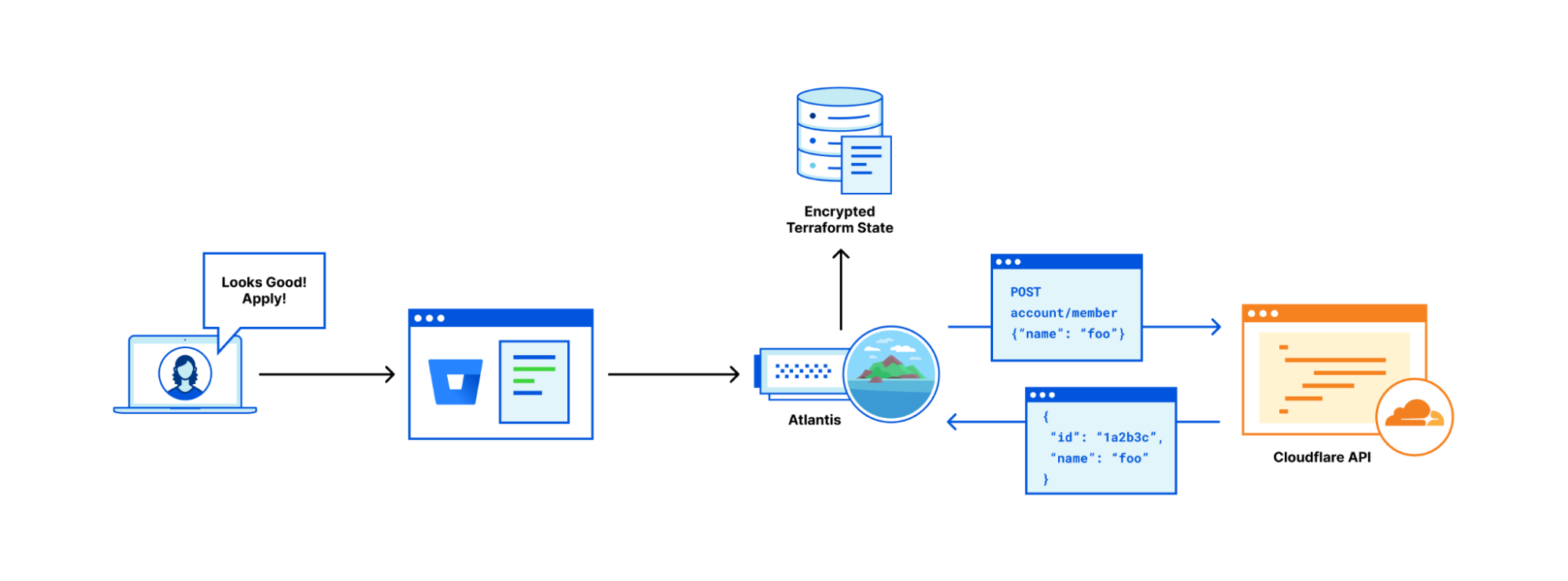

Atlantis acts as continuous integration/continuous delivery (CI/CD) for Terraform; fitting neatly into version control workflows, and giving visibility into the changes being deployed in each code change. We use Atlantis to display Terraform plans (effectively a diff in configuration) within pull requests and apply the changes after the pull request has been approved. Having all the output from the terraform provider in the comments of a pull request means there's no need to fiddle with the state locally or worry about where a state lock is coming from. Using Terraform CI/CD like this makes configuration management approachable to developers and non-technical folks alike.

In this example pull request, I'm adding a user to the cloudflare-cool-account (see the code in the next section). Once the PR is opened, Bitbucket posts a webhook to Atlantis, telling it to run a `terraform plan` using this branch. The resulting comment is placed in the pull request. Notice that this pull request can't be applied or merged yet as it doesn't have an approval! Once the pull request is approved, I would comment "atlantis apply", wait for Atlantis to post a comment containing the output of the command, and merge the pull request if that output looks correct.

Our Terraforming Cloudflare architecture consists of a monorepo with one directory (and tfstate) for each internally-owned Cloudflare account. This keeps all of our Cloudflare configuration centralized for easier oversight while remaining neatly organized.

It will be possible in a future (as of this writing) release to manage multiple Cloudflare accounts in the same tfstate, but we've found that accounts in our use generally map fairly neatly onto teams. Teams can be configured as CODEOWNERS for a given directory and be tagged on any pull requests to that account. With teams owning separate accounts and each account having a separate tfstate, it's rare for pull requests to get stuck waiting for a lock on the tfstate. Team-account-sized states remain relatively small, meaning that they also build quickly. Later on, we'll share some of the other optimizations we've made to keep the repo user-friendly.

Each of our terraform states, given that they include secrets (including the API key!), is stored encrypted in an internal datastore. When a pull request is opened, Atlantis reaches out to a piece of middleware (that we may open source once it's cleaned up a bit) that retrieves and decrypts the state for processing. Once the pull request is applied, the state is encrypted and put away again.

We execute a daily Terraform apply across all tfstates to capture any unintended config drift and rotate certificates when they approach expiration. This prevents unrelated changes from popping up in pull request diffs and causing confusion. While we could run more frequent state applies to ensure Terraform remains firmly up to date, once-a-day rectification strikes a balance between code enforcement and avoiding state locks while users are running Terraform plans in pull requests.

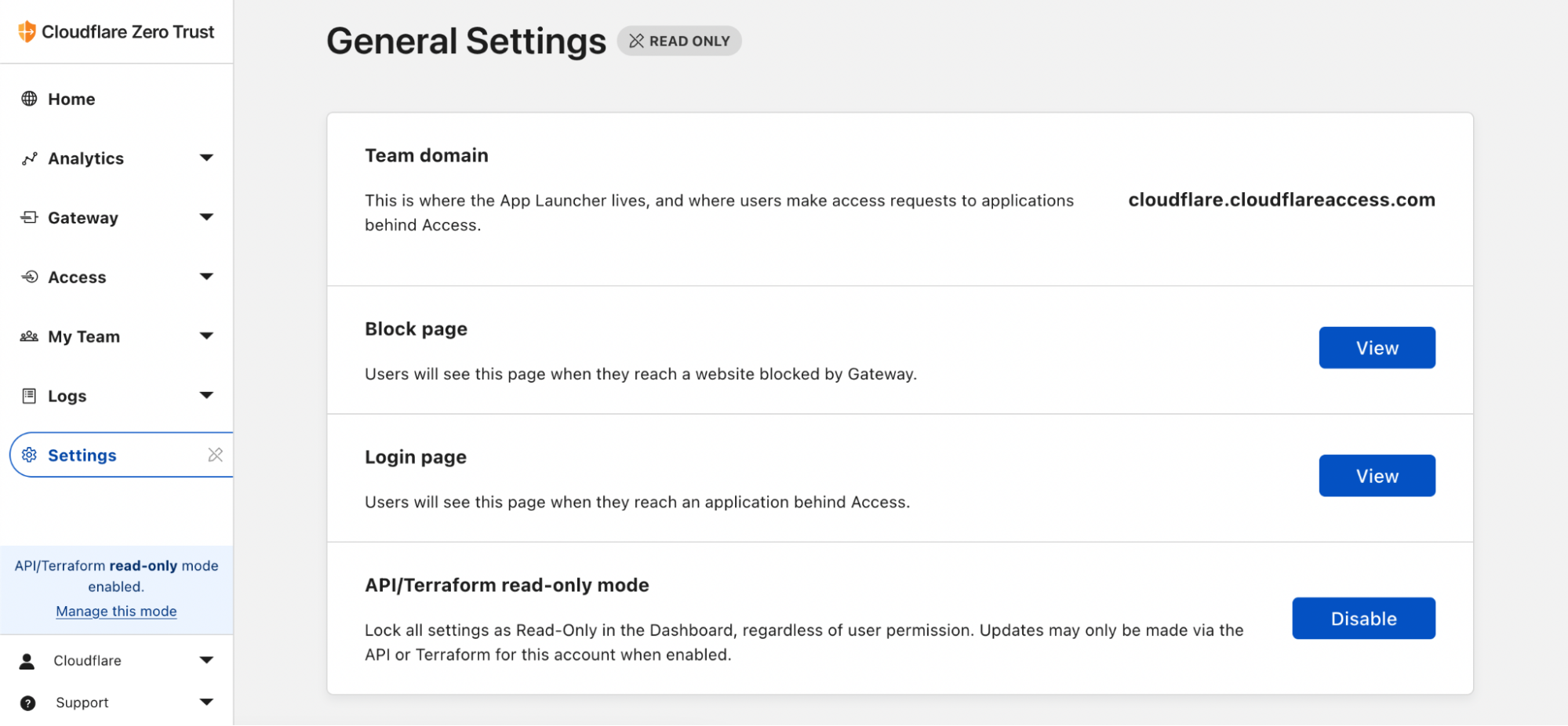

One of the problems that we encountered during our transition to Terraform is that folks were in the habit of making updates to configuration in the Dashboard and were still able to edit settings there. Thus, we didn't always have a single source of truth for our configuration in code. It also meant the change would get mysteriously (to them) reverted the next day! So that's why I'm excited to share a new Zero Trust Dashboard toggle that we've been turning on for our accounts internally: API/Terraform read-only mode.

With this button, we're able to politely prevent manual changes to your Cloudflare account’s Zero Trust configuration without removing permissions from the set of users who can fix settings manually in a break-glass emergency scenario. Check out how you can enable this setting in your Zero Trust organization.

Slick Snippets and Terraforming Recommendations

As our Terraform repository has matured, we've refined how we define Cloudflare resources in code. By finding a sweet spot between code reuse and readability, we've been able to minimize operational overhead and generally let users get their work done. Here's a couple of useful snippets that have been particularly valuable to us.

Account Membership

This allows for defining a fairly straightforward mapping of user emails to account privileges without code duplication or complex modules. We pull the list of human-friendly names of account roles from the API to show user permission assignments at a glance. Note: status is a new argument that allows for accounts to be added without sending an email to the user; perfect for when an organization is using SSO. (Thanks patrobinson for the feature request and mblackman for the PR!)

variables.tf

—-

data "cloudflare_account_roles" "my_account" {

account_id = var.account_id

}

locals {

roles = {

for role in data.cloudflare_account_roles.my_account.roles :

role.name => role

}

}

members.tf

—-

locals {

users = {

emerson = {

roles = [

local.roles["Administrator"].id

]

}

lucian = {

roles = [

local.roles["Super Administrator - All Privileges"].id

]

}

walruto = {

roles = [

local.roles_by_name["Audit Logs Viewer"].id,

local.roles_by_name["Cloudflare Access"].id,

local.roles_by_name["DNS"].id

]

}

}

resource "cloudflare_account_member" "account_member" {

for_each = local.users

account_id = var.account_id

email_address = "${each.key}@cloudflare.com"

role_ids = each.value.roles

status = "accepted"

}

Defining Auto-Refreshing Access Service Tokens

The GitHub issue and provider change that enabled automatic Access service token refreshes actually came from a need inside Cloudflare. Here's how we ended up implementing it. We begin by defining a set of services that need to connect to our hostnames that are protected by Access. Each of these tokens are created and stored in a secret key value store. Next, we reference those access tokens by ID in the target Access policies. Once this has run, the service owner or the service itself can retrieve the credentials from the data store. (Note: we're using Vault here, but any storage provider could be used in its place).

tokens.tf

—

locals {

service_tokens = toset([

"customer-service", # TICKET-120

"full-service", # TICKET-128

"quality-of-service" # TICKET-420

"room-service" # TICKET-927

])

}

resource "cloudflare_access_service_token" "token" {

for_each = local.service_tokens

account_id = var.account_id

name = each.key

min_days_for_renewal = 30

}

resource "vault_generic_secret" "access_service_token" {

for_each = local.service_tokens

path = "kv/secrets/${each.key}/access_service_token"

disable_read = true

data_json = jsonencode({

client_id = cloudflare_access_service_token.token["${each.key}"].client_id,

client_secret = cloudflare_access_service_token.token["${each.key}"].client_secret

})

}

super_cool_hostname.tf

—

resource "cloudflare_access_application" "super_cool_hostname" {

account_id = var.account_id

name = "Super Cool Hostname"

domain = "supercool.hostname.tld"

}

resource "cloudflare_access_policy" "super_cool_hostname_service_access" {

application_id = cloudflare_access_application.super_cool_hostname.id

zone_id = data.cloudflare_zone.hostname_tld.id

name = "TICKET-927 Allow Room Service "

decision = "non_identity"

precedence = 1

include {

service_token = [cloudflare_access_service_token.token["room-service"].id]

}

}

mTLS (Authenticated Origin Pulls) certificate creation and rotation

To further defense-in-depth objectives, we've been rolling out mTLS throughout our internal systems. One of the places where we can take advantage of our Terraform provider is in defining AOP (Authenticated Origin Pulls) certificates to lock down the Cloudflare-edge-to-origin connection. Anyone who has managed certificates of any kind can speak to the headaches they can cause. Having certificate configurations in Terraform takes out the manual work of rotation and expiration.

In this example we're defining hostname-level AOP as opposed to zone-level AOP. We start by cutting a certificate for each hostname. Once again we're using Vault for certificate creation, but other backends could be used just as well. This certificate is created with a (not-shown) 30 day expiration, but set to renew automatically. This means once the time-to-expiration is equal to min_seconds_remaining, the resource will be automatically tainted and replaced on the next Terraform run. We like to give this automation plenty of room before expiration to take into account holiday seasons and avoid sending alerts to humans when the alerts hit seven days to expiration. For the rest of this snippet, the certificate is uploaded to Cloudflare and the ID from that upload is then placed in the AOP configuration for the given hostname. The create_before_destroy meta-argument ensures that the replacement certificate is uploaded successfully before we remove the certificate that's currently in place.

locals {

hostnames = toset([

"supercool.hostname.tld",

"thatsafinelooking.hostname.tld"

])

}

resource "vault_pki_secret_backend_cert" "vault_cert" {

for_each = local.hostnames

backend = "pki-aop"

name = "default"

auto_renew = true

common_name = "${each.key}.aop.pki.vault.cfdata.org"

min_seconds_remaining = 864000 // renew when there are 10 days left before expiration

}

resource "cloudflare_authenticated_origin_pulls_certificate" "aop_cert" {

for_each = local.hostnames

zone_id = data.cloudflare_zone.hostname_tld.id

type = "per-hostname"

certificate = vault_pki_secret_backend_cert.vault_cert["${each.key}"].certificate

private_key = vault_pki_secret_backend_cert.vault_cert["${each.key}"].private_key

lifecycle {

create_before_destroy = true

}

}

resource "cloudflare_authenticated_origin_pulls" "aop_config" {

for_each = local.hostnames

zone_id = data.cloudflare_zone.hostname_tld.id

authenticated_origin_pulls_certificate = cloudflare_authenticated_origin_pulls_certificate.aop_cert["${each.key}"].id

hostname = "${each.key}"

enabled = true

}

Terraform recommendations

The comfortable automation that we've achieved thus far did not come without some hair-pulling. Below are a few of the learnings that have allowed us to maintain the repository as a side project run by two engineers (shoutout David).

Store your state somewhere safe

It feels worth repeating that the tfstate contains secrets including any API keys you're using with providers and the default location of the tfstate is in the current working directory. It's very easy to accidentally commit this to source control. By defining a backend, the state can be stored with a cloud storage provider, in a secure location on a filesystem, in a database, or even Cloudflare Workers! Wherever the state is stored, make sure it is encrypted.

Choose simplicity, avoid modules

Modules are intended to reduce code repetition for well-defined chunks of systems such as "I want three clusters of whizz-bangs in locations A, C, and F." If cloud-computing was like Factorio, this would be amazing. However, financial, technical, and physical constraints mean subtle differences in systems develop over time such as "I want fewer whizz-bangs in C and the whizz-bangs in F should get a different network topology." In Terraform, implementation logic of these requirements is moved to the module code. HCL is absolutely not the place to write decipherable conditionals. While module versioning prevents having to make every change backwards-compatible, keeping module usage up-to-date becomes another chore for repository maintainers.

An understandable code base is a user-friendly codebase. It's rare that a deeply cryptic error will return from a misconfigured resource definition. Conversely, modules, especially custom ones, can lead users on a head-scratching adventure. This kind of system can't scale with confused users.

A few well-designed for_each loops (we're obviously fans) can achieve similar objectives as modules without the complexity. It's fine to use plain old resources too! Especially when there are more than a handful of varying arguments, it's more valuable for the configuration to be clear than to be eloquent. For example: an account_member resource makes sense to be in a for_loop, but a page_rule probably doesn't.

Keep tfstates small

Maintaining quick pull-request-to-plan turnaround keeps Terraform from feeling like a burden on users' time. Furthermore, if a plan is taking 30 minutes to run, a rollback in the case of an issue would also take 30 minutes! This post describes our single-account-to-tfstate model.

However, after noticing slow-downs coming from the large number of AOP certificate configurations in a big zone, we moved that code to a separate tfstate. We were able to make this change because AOP configuration is fairly self-contained. To ensure there would be no fighting between the states, we kept the API token permissions for each tfstate mutually exclusive of each other. Our Atlantis Terraform plans typically finish under five minutes. If it feels impossible to keep the size of a tfstate down to a reasonable amount of time, it may be worth considering a different tool for that bit of configuration management.

Know when to use a Different tool

Terraform isn't a panacea. We generally don't use Terraform to manage DNS records, for example. We use OctoDNS which integrates more neatly into our infrastructure automation. DNS records can quickly add up to long state-rendering times and are often dynamically generated from systems that Terraform doesn't know about. To avoid conflicts, there should only ever be one system publishing changes to DNS records.

We also haven't figured out a maintainable way of managing Workers scripts in Terraform. When a .js script in the Terraform directory changes, Terraform isn't aware of it. This means a change needs to occur somewhere else in a .tf file before the plan diff is generated. It likely isn't an unsolvable issue, but doesn't seem particularly worth cramming into Terraform when there are better options for Worker management like Wrangler.

Looking forward

We're continuing to invest in the Cloudflare Terraforming experience both for our own use and for the benefit of our users. With the provider, we hope to offer a comfortable and scalable method of interacting with Cloudflare products. Hopefully this post has presented some useful suggestions to anyone interested in adopting Cloudflare-configuration-as-code. Don't hesitate to reach out on the GitHub project for troubleshooting, bug reports, or feature requests. For more in depth documentation on using Terraform to manage your Cloudflare account, read on here. And if you don't have a Cloudflare account already, click here to get started.