This post is also available in 简体中文, 繁體中文, 日本語 and 한국어.

Thanksgiving might be a US holiday (and one of our favorites — we have many things to be thankful for!). Many people get excited about the food or deals, but for me as a developer, it’s also always been a nice quiet holiday to hack around and play with new tech. So in that spirit, we're thrilled to announce that Stable Diffusion and Code Llama are now available as part of Workers AI, running in over 100 cities across Cloudflare’s global network.

As many AI fans are aware, Stable Diffusion is the groundbreaking image-generation model that can conjure images based on text input. Code Llama is a powerful language model optimized for generating programming code.

For more of the fun details, read on, or head over to the developer docs to get started!

Generating images with Stable Diffusion

Stability AI launched Stable Diffusion XL 1.0 (SDXL) this past summer. You can read more about it here, but we’ll briefly mention some really cool aspects.

First off, “Distinct images can be prompted without having any particular ‘feel’ imparted by the model, ensuring absolute freedom of style”. This is great as it gives you a blank canvas as a developer, or should I say artist.

Additionally, it’s “particularly well-tuned for vibrant and accurate colors, with better contrast, lighting, and shadows than its predecessor, all in native 1024x1024 resolution.” With the advancements in today's cameras (or phone cameras), quality images are table stakes, and it’s nice to see these models keeping up.

Getting started with Workers AI + SDXL (via API) couldn’t be easier. Check out the example below:

curl -X POST \

"https://api.cloudflare.com/client/v4/accounts/{account-id}/ai/run/@cf/stabilityai/stable-diffusion-xl-base-1.0" \

-H "Authorization: Bearer {api-token}" \

-H "Content-Type:application/json" \

-d '{ "prompt": "A happy llama running through an orange cloud" }' \

-o 'happy-llama.png'

And here is our happy llama:

You can also do this in a Worker:

import { Ai } from '@cloudflare/ai';

export default {

async fetch(request, env) {

const ai = new Ai(env.AI);

const response = await ai.run('@cf/stabilityai/stable-diffusion-xl-base-1.0', {

prompt: 'A happy llama running through an orange cloud'

});

return new Response(response, {

headers: {

"content-type": "image/png",

},

});

}

}

Generate code with Code Llama

If you’re not into generating art, then maybe you can have some fun with code. Code Llama, which was also released this last summer by Meta, is built on top of Llama 2, but optimized to understand and generate code in many popular languages (Python, C++, Java, PHP, Typescript / Javascript, C#, and Bash).

You can use it to help you generate code for a tough problem you're faced with, or you can also use it to help you understand code — perfect if you are picking up an existing, unknown codebase.

And just like all the other models, generating code with Workers AI is really easy.

From a Worker:

import { Ai } from '@cloudflare/ai';

// Enable env.AI for your worker by adding the ai binding to your wrangler.toml file:

// [ai]

// binding = "AI"

export default {

async fetch(request, env) {

const ai = new Ai(env.AI);

const response = await ai.run('@hf/thebloke/codellama-7b-instruct-awq', {

prompt: 'In JavaScript, define a priority queue class. The constructor must take a function that is called on each object to determine its priority.'

});

return Response.json(response);

}

}

Using curl:

curl -X POST \

"https://api.cloudflare.com/client/v4/accounts/{account-id}/ai/run/@hf/thebloke/codellama-7b-instruct-awq" \

-H "Authorization: Bearer {api-token}" \-H "Content-Type: application/json" \

-d '{ "prompt": "In JavaScript, define a priority queue class. The constructor must take a function that is called on each object to determine its priority." }

Using python:

#!/usr/bin/env python3

import json

import os

import requests

ACCOUNT_ID=os.environ["ACCOUNT_ID"]

API_TOKEN=os.environ["API_TOKEN"]

MODEL="@hf/thebloke/codellama-7b-instruct-awq"

prompt="""In JavaScript, define a priority queue class. The constructor must take a function that is called on each object to determine its priority."""

url = f"https://api.cloudflare.com/client/v4/accounts/{ACCOUNT_ID}/ai/run/{MODEL}"

headers = {

"Authorization": f"Bearer {API_TOKEN}"

}

payload = json.dumps({

"prompt": prompt

})

print(url)

r = requests.post(url, data=payload, headers=headers)

j = r.json()

if "result" in j and "response" in j["result"]:

print(r.json()["result"]["response"])

else:

print(json.dumps(j, indent=2))

Workers AI inference now available in 100 cities

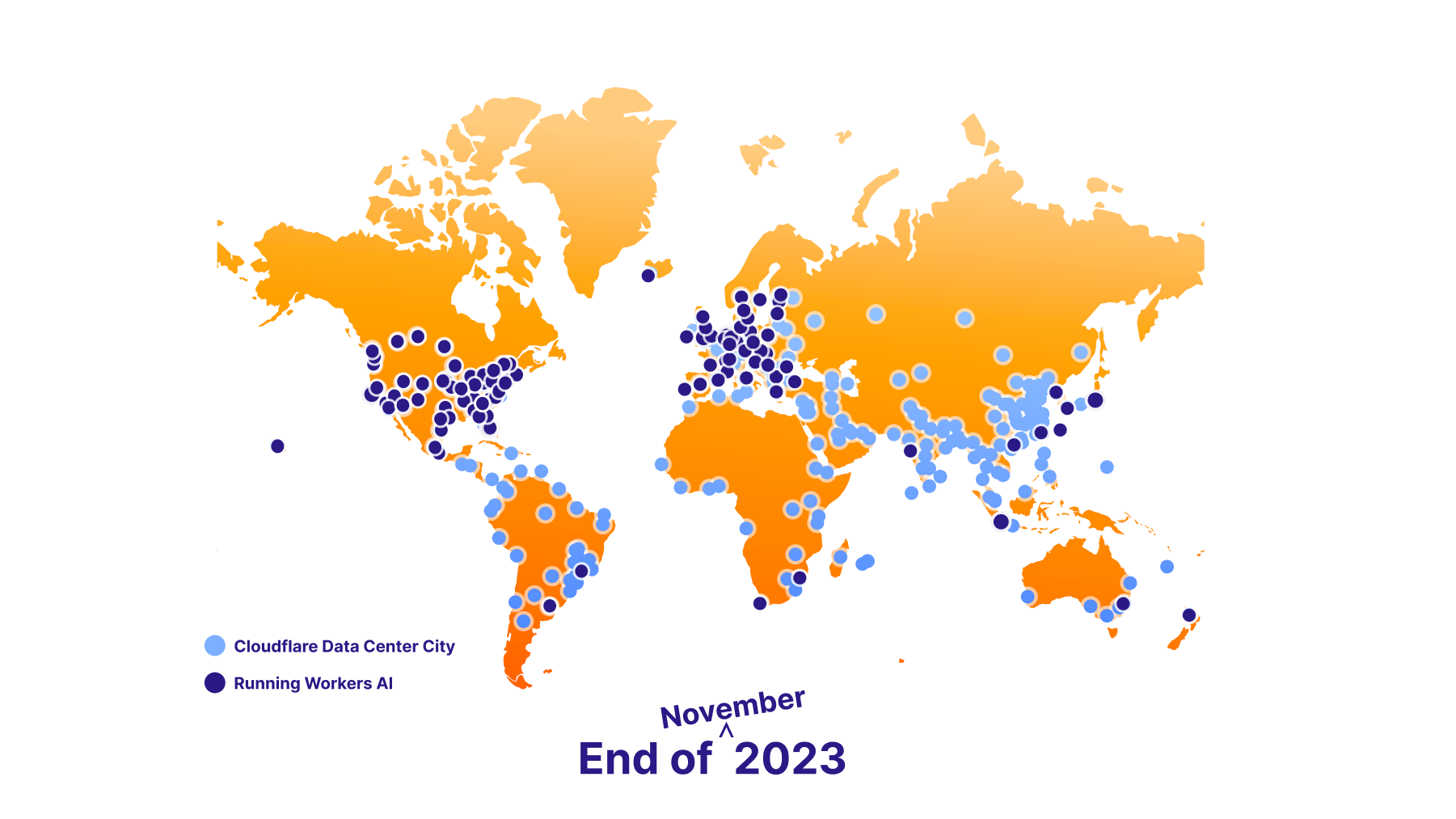

When we first released Workers AI back in September we launched with inference running in seven cities, but set an ambitious target to support Workers AI inference in 100 cities by the end of the year, and nearly everywhere by the end of 2024. We’re proud to say that we’re ahead of schedule and now support Workers AI Inference in 100 cities thanks to some awesome, hard-working folks across multiple teams. For developers this means that your inference tasks are more likely to run near your users, and it will only continue to improve over the next 18 months.

Mistral, in case you missed it

Lastly, in case you didn’t see our other update earlier this week, we also launched Mistral 7B, a super capable and powerful language model that packs a punch for its size. You can read more about it here, or start building with it here.

Go forth and build something fun

Today we gave you images (art), code, and Workers AI inference running in more cities. Please go have fun, build something cool, and if you need help, want to give feedback, or want to share what you’re building just pop into our Developer Discord!

Happy Thanksgiving!

Additionally, if you’re just getting started with AI, we’ll be offering a series of developer workshops ranging from understanding the basics such as embeddings, models and vector databases, getting started with LLMs on Workers AI and more. We encourage you to sign up here.