At Cloudflare, we’re used to being the fastest in the world. However, for approximately 30 minutes last December, Cloudflare was slow. Between 20:10 and 20:40 UTC on December 16, 2021, web requests served by Cloudflare were artificially delayed by up to five seconds before being processed. This post tells the story of how a missing shell option called “pipefail” slowed Cloudflare down.

Background

Before we can tell this story, we need to introduce you to some of its characters.

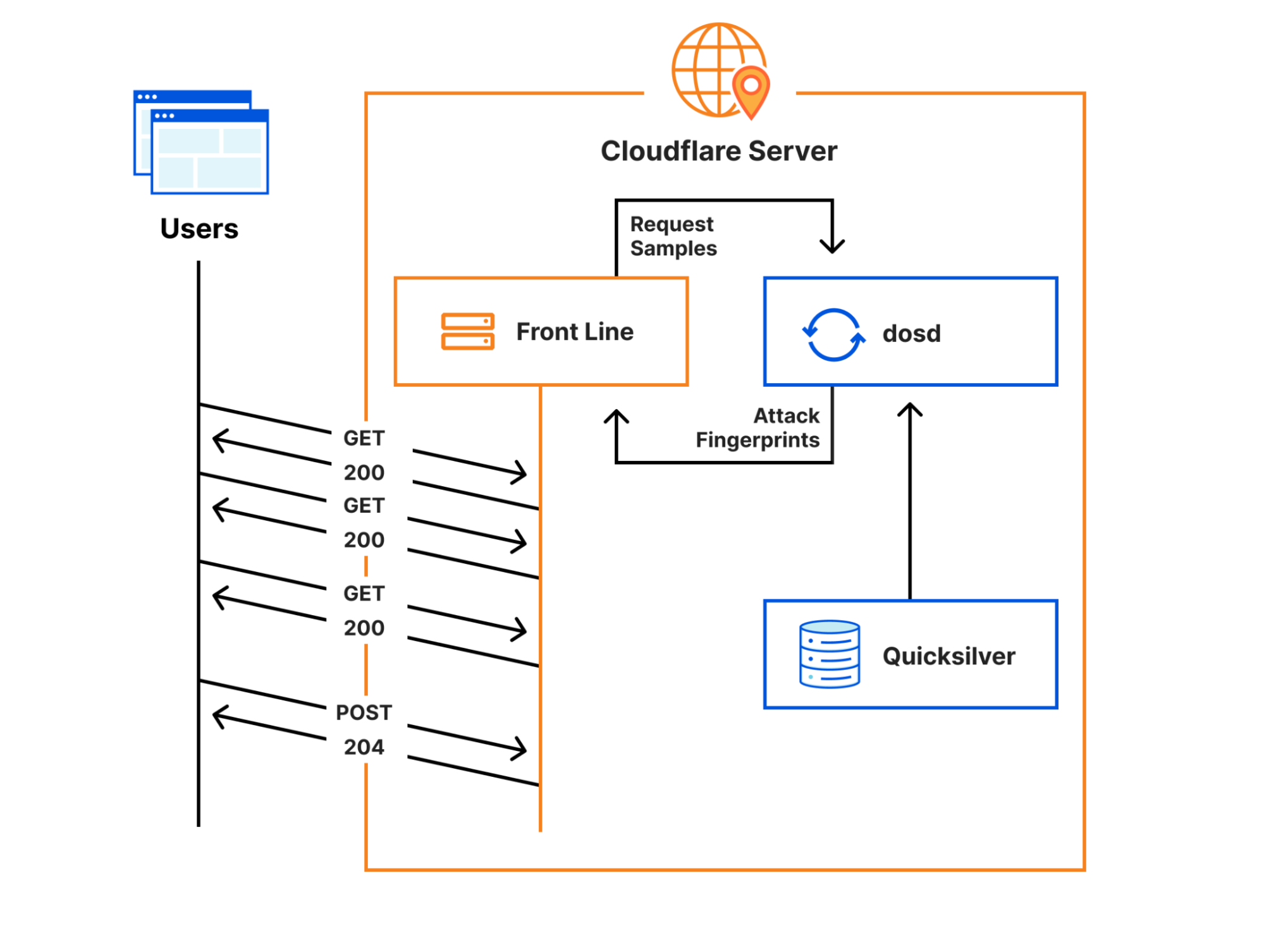

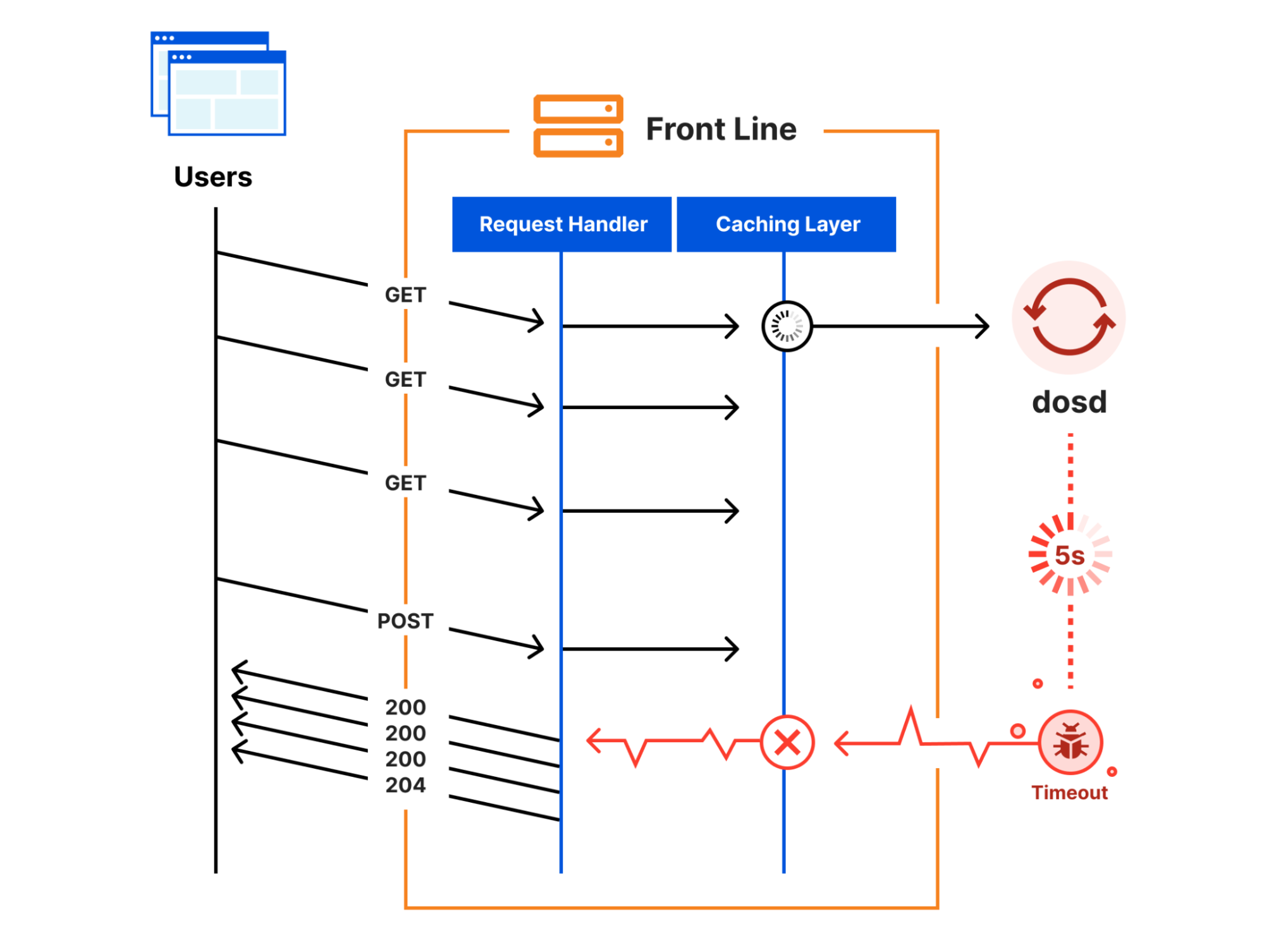

Cloudflare’s Front Line protects millions of users from some of the largest attacks ever recorded. This protection is orchestrated by a sidecar service called dosd, which analyzes traffic and looks for attacks. When dosd detects an attack, it provides Front Line with a list of attack fingerprints that describe how Front Line can match and block the attack traffic.

Instances of dosd run on every Cloudflare server, and they communicate with each other using a peer-to-peer mesh to identify malicious traffic patterns. This decentralized design allows dosd to perform analysis with much higher fidelity than is possible with a centralized system, but its scale also imposes some strict performance requirements. To meet these requirements, we need to provide dosd with very fast access to large amounts of configuration data, which naturally means that dosd depends on Quicksilver. Cloudflare developed Quicksilver to manage configuration data and replicate it around the world in milliseconds, allowing it to be accessed by services like dosd in microseconds.

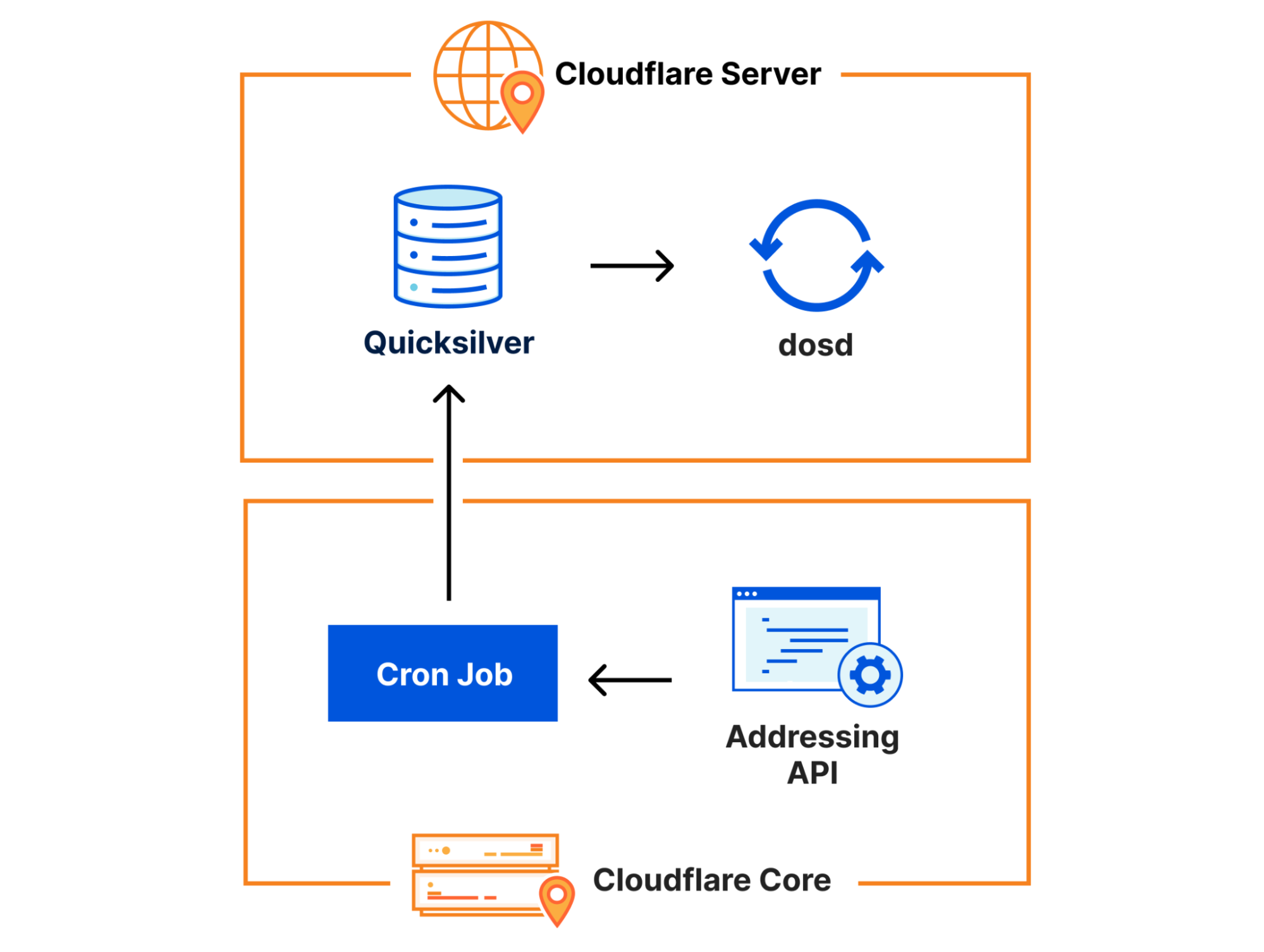

One piece of configuration data that dosd needs comes from the Addressing API, which is our authoritative IP address management service. The addressing data it provides is important because dosd uses it to understand what kind of traffic is expected on particular IPs. Since addressing data doesn’t change very frequently, we use a simple Kubernetes cron job to query it at 10 minutes past each hour and write it into Quicksilver, allowing it to be efficiently accessed by dosd.

With this context, let’s walk through the change we made on December 16 that ultimately led to the slowdown.

The Change

Approximately once a week, all of our Bug Fixes and Performance Improvements to the Front Line codebase are released to the network. On December 16, the Front Line team released a fix for a subtle bug in how the code handled compression in the presence of a Cache-Control: no-transform header. Unfortunately, the team realized pretty quickly that this fix actually broke some customers who had started depending on that buggy behavior, so the team decided to roll back the release and work with those customers to correct the issue.

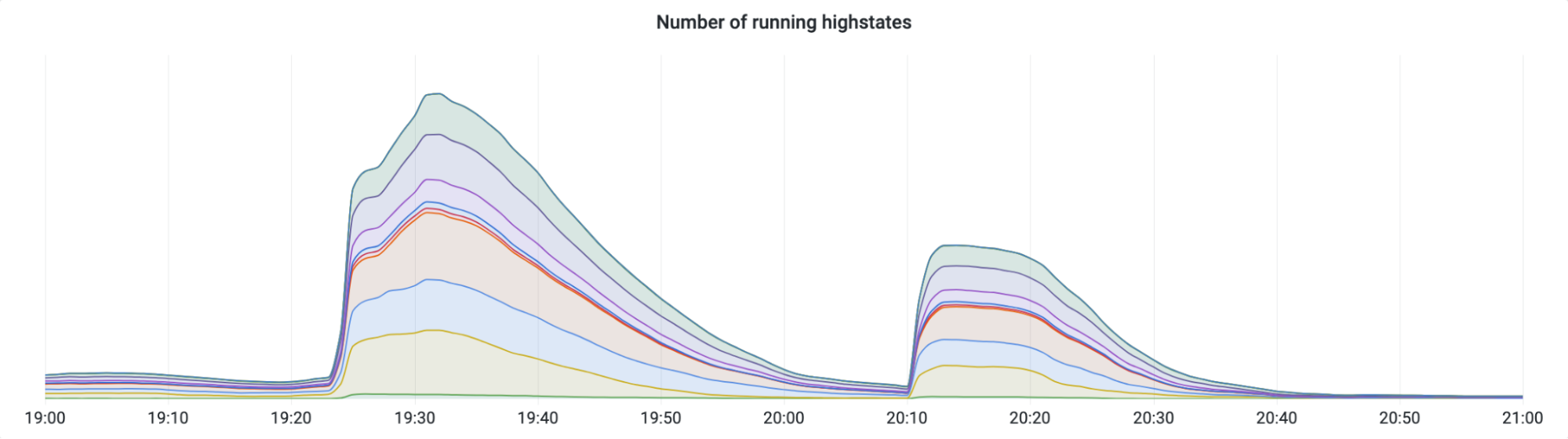

Here’s a graph showing the progression of the rollback. While most releases and rollbacks are fully automated, this particular rollback needed to be performed manually due to its urgency. Since this was a manual rollback, SREs decided to perform it in two batches as a safety measure. The first batch went to our smaller tier 2 and 3 data centers, and the second batch went to our larger tier 1 data centers.

SREs started the first batch at 19:25 UTC, and it completed in about 30 minutes. Then, after verifying that there were no issues, they started the second batch at 20:10. That’s when the slowdown started.

The Slowdown

Within minutes of starting the second batch of rollbacks, alerts started firing. “Traffic levels are dropping.” “CPU utilization is dropping.” “A P0 incident has been automatically declared.” The timing could not be a coincidence. Somehow, a deployment of known-good code, which had been limited to a subset of the network and which had just been successfully performed 40 minutes earlier, appeared to be causing a global problem.

A P0 incident is an “all hands on deck” emergency, so dozens of Cloudflare engineers quickly began to assess impact to their services and test their theories about the root cause. The rollback was paused, but that did not fix the problem. Then, approximately 10 minutes after the start of the incident, my team – the DOS team – received a concerning alert: “dosd is not running on numerous servers.” Before that alert fired we had been investigating whether the slowdown was caused by an unmitigated attack, but this required our immediate attention.

Based on service logs, we were able to see that dosd was panicking because the customer addressing data in Quicksilver was corrupted in some way. Remember: the data in this Quicksilver key is important. Without it, dosd could not make correct choices anymore, so it refused to continue.

Once we realized that the addressing data was corrupted, we had to figure out how it was corrupted so that we could fix it. The answer turned out to be pretty obvious: the Quicksilver key was completely empty.

Following the old adage – “did you try restarting it?” – we decided to manually re-run the Kubernetes cron job that populates this key and see what happened. At 20:40 UTC, the cron job was manually triggered. Seconds after it completed, dosd started running again, and traffic levels began returning to normal. We confirmed that the Quicksilver key was no longer empty, and the incident was over.

The Aftermath

Despite fixing the problem, we still didn’t really understand what had just happened.

Why was the Quicksilver key empty?

It was urgent that we quickly figure out how an empty value was written into that Quicksilver key, because for all we knew, it could happen again at any moment.

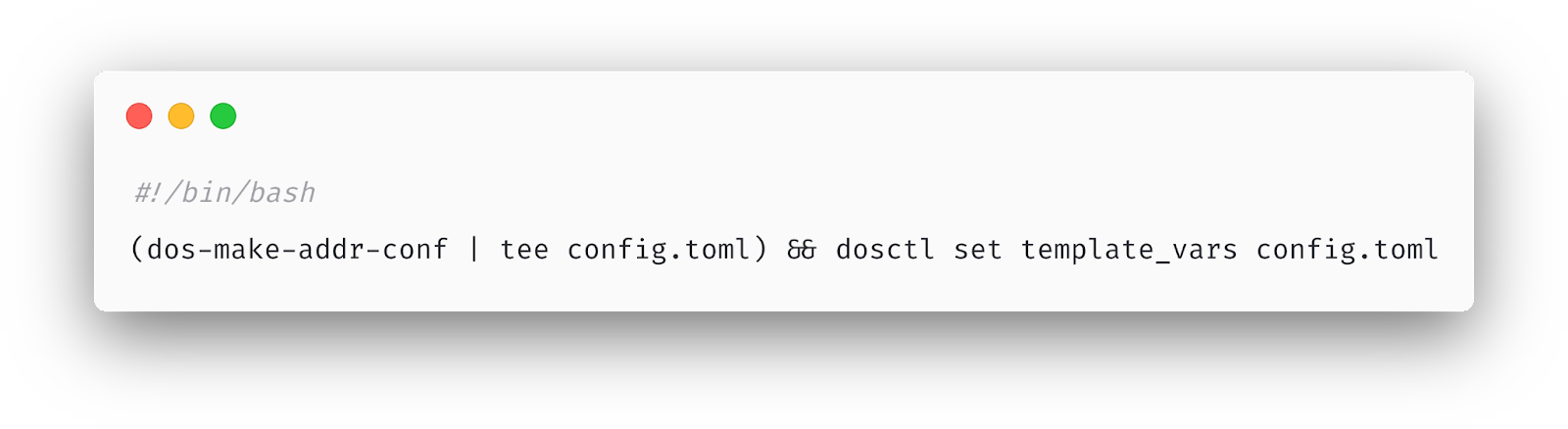

We started by looking at the Kubernetes cron job, which turned out to have a bug:

This cron job is implemented using a small Bash script. If you’re unfamiliar with Bash (particularly shell pipelining), here’s what it does:

First, the dos-make-addr-conf executable runs. Its job is to query the Addressing API for various bits of JSON data and serialize it into a Toml document, which gets written Into config.toml. Afterward, that Toml is passed as input into the dosctl executable, whose job is to simply write it into a Quicksilver key called template_vars.

Can you spot the bug? Here’s a hint: what happens if dos-make-addr-conf fails for some reason and exits with a non-zero error code? It turns out that, by default, the shell pipeline ignores the error code and continues executing! This means that the output of dos-make-addr-conf (which could be empty) gets unconditionally written into dosctl and used as the value of the template_vars key, regardless of whether dos-make-addr-conf succeeded or failed.

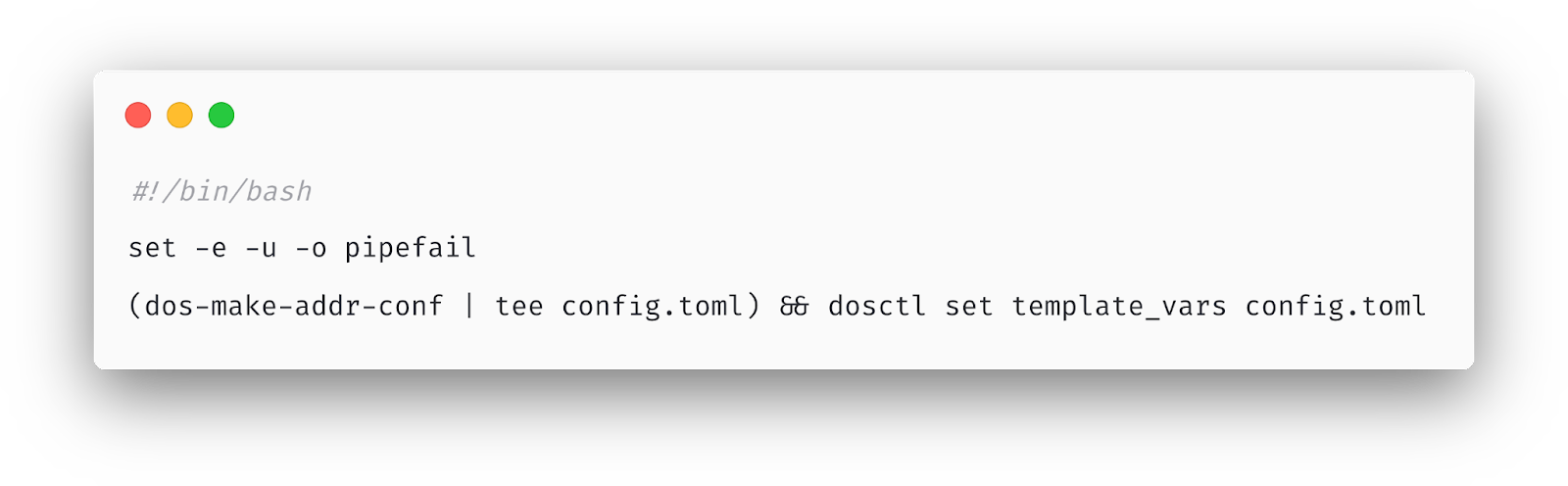

30 years ago, when the first users of Bourne shell were burned by this problem, a shell option called “pipefail” was introduced. Enabling this option changes the shell’s behavior so that, when any command in a pipeline series fails, the entire pipeline fails. However, this option is not enabled by default, so it’s widely recommended as best practice that all scripts should start by enabling this (and a few other) options.

Here’s the fixed version of that cron job:

This bug was particularly insidious because dosd actually did attempt to gracefully handle the case where this Quicksilver key contained invalid Toml. However, an empty string is a perfectly valid Toml document. If an error message had been accidentally written into this Quicksilver key instead of an empty string, then dosd would have rejected the update and continued to use the previous value.

Why did that cause the Front Line to slow down?

We had figured out how an empty key could be written into Quicksilver, and we were confident that it wouldn’t happen again. However, we still needed to untangle how that empty key caused such a severe incident.

As I mentioned earlier, the Front Line relies on dosd to tell it how to mitigate attacks, but it doesn’t depend on dosd directly to serve requests. Instead, once every few seconds, the Front Line asynchronously asks dosd for new attack fingerprints and stores them in an in-memory cache. This cache is consulted while serving each request, and if dosd ever fails to provide fresh attack fingerprints, then the stale fingerprints will continue to be used instead. So how could this have caused the impact that we saw?

As part of the rollback process, the Front Line’s code needed to be reloaded. Reloading this code implicitly flushed the in-memory caches, including the attack fingerprint data from dosd. The next time that a request tried to consult with the cache, the caching layer realized that it had no attack fingerprints to return and a “cache miss” happened.

To handle a cache miss, the caching layer tried to reach out to dosd, and this is when the slowdown happened. While the caching layer was waiting for dosd to reply, it blocked all pending requests from progressing. Since dosd wasn’t running, the attempt eventually timed out after five seconds when the caching layer gave up. But in the meantime, each pending request was stuck waiting for the timeout to happen. Once it did, all the pending requests that were queued up over the five-second timeout period became unblocked and were finally allowed to progress. This cycle repeated over and over again every five seconds on every server until the dosd failure was resolved.

To trigger this slowdown, not only did dosd have to fail, but the Front Line’s in-memory cache had to also be flushed at the same time. If dosd had failed, but the Front Line’s cache had not been flushed, then the stale attack fingerprints would have remained in the cache and request processing would not have been impacted.

Why didn’t the first rollback cause this problem?

These two batches of rollbacks were performed by forcing servers to run a Salt highstate. When each batch was executed, thousands of servers began running highstates at the same time. The highstate process involves, among other things, contacting the Addressing API in order to retrieve various bits of customer addressing information.

The first rollback started at 19:25 UTC, and the second rollback started 45 minutes later at 20:10. Remember how I mentioned that our Kubernetes cron job only runs on the 10th minute of every hour? At 21:10 – exactly the time that our cron job started executing – thousands of servers also began to highstate, flooding the Addressing API with requests. All of these requests were queued up and eventually served, but it took the Addressing API a few minutes to work through the backlog. This delay was long enough to cause our cron job to time out, and, due to the “pipefail” bug, inadvertently clobber the Quicksilver key that it was responsible for updating.

To trigger the “pipefail” bug, not only did we have to flood the Addressing API with requests, we also had to do it at exactly 10 minutes after the hour. If SREs had started the second batch of rollbacks a few minutes earlier or later, this bug would have continued to lay dormant.

Lessons Learned

This was a unique incident where a chain of small or unlikely failures cascaded into a severe and painful outage that we deeply regret. In response, we have hardened each link in the chain:

- A manual rollback inadvertently triggered the thundering herd problem, which overwhelmed the Addressing API. We have since significantly scaled out the Addressing API, so that it can handle high request rates if it ever again has to.

- An error in a Kubernetes cron job caused invalid data to be written to Quicksilver. We have since made sure that, when this cron job fails, it is no longer possible for that failure to clobber the Quicksilver key.

dosddid not correctly handle all possible error conditions when loading configuration data from Quicksilver, causing it to fail. We have since taken these additional conditions into account where necessary, so thatdosdwill gracefully degrade in the face of corrupt Quicksilver data.- The Front Line had an unexpected dependency on

dosd, which caused it to fail whendosdfailed. We have since removed all such dependencies, and the Front Line will now gracefully survivedosdfailures.

More broadly, this incident has served as an example to us of why code and systems must always be resilient to failure, no matter how unlikely that failure may seem.