Over the past few days we learnt about a new attack that posed a serious weakness in the encryption protocol used to secure all modern Wi-Fi networks. The KRACK Attack effectively allows interception of traffic on wireless networks secured by the WPA2 protocol. Whilst it is possible to backward patch implementations to mitigate this vulnerability, security updates are rarely installed universally.

Prior to this vulnerability, there were no shortage of wireless networks that were vulnerable to interception attacks. Some wireless networks continue to use a dated security protocol (called WEP) that is demonstrably "totally insecure" [1]; other wireless networks, such as those in coffee shops and airports, remain completely open and do not authenticate users. Once an attacker gains access to a network, they can act as a proxy to intercept connections over the network (using tactics known as ARP Cache Poisoning and DNS Hijacking). And yes, these interception tactics can easily be deployed against wired networks where someone gains access to an ethernet port.

With all this known, it is beyond doubt that it is simply not secure to blindly trust the medium that connects your users to the internet. HTTPS was created to allow HTTP traffic to be transmitted in encrypted form, however the authors of the KRACK Attack presented a video demonstration of how the encryption could be completely stripped away on a popular dating site (despite the website supporting HTTPS). This blog post presents a plain-english primer on how HTTPS protection can be stripped and mechanisms for mitigating this.

HTTP over TLS

The internet is built on a patchwork of standards, with components being refactored and rebuilt in new published standards. When one standard is found to be flawed, it is later patched or replaced by a new standard. As a standard is falsified and replaced by a better one, the internet as a whole becomes better.

The HTTP protocol was originally specified to communicate data in the clear over the internet. Prior to the official introduction of HTTP 1.0, the first documented version of HTTP was known as HTTP V0.9 and was published in 1991. Netscape were the first to recognise the need for greater security assurance over the internet and in mid-1994 HTTPS was implemented into the Netscape browser. In order to implement greater security assurance, a technology called SSL (Secure Socket Layer) was created.

SSL 1.0 was short lived (and not even officially standardised) due to a number of security concerns and shortcomings. This protocol was incrementally updated in SSL 2.0 and SSL 3.0; this was then iteratively superseded by the TLS (Transport Layer Security) standards.

| Protocol | SSL 1.0 | SSL 2.0 | SSL 3.0 | TLS 1.0 | TLS 1.1 | TLS 1.2 | TLS 1.3 |

|---|---|---|---|---|---|---|---|

| Release | N/A | 1995 | 1996 | 1999 | 2006 | 2008 | Draft |

Each of those versions come with different security constraints and support varies from browser-to-browser. Additionally, the encryption ciphers used can be configured somewhat independently of the overlying protocol. It is therefore vital to ensure any HTTPS-enabled web server is set-up to use a configuration that’s optimised to balance browser support against security. I won't go into detail on this in this post, however you can read about SSL and TLS Deployment Best Practices in documentation provided by SSL Labs.

At a high-level; the end result of HTTP over TLS, is that when a site is requested over https:// instead of http:// the connection is completed in an encrypted manner. This process provides a reasonable guarantee of both privacy and integrity; in other words, we don't just encrypt the messages we're sending, we make sure the message we receive isn't altered over the wire. When a secure connection is established, web browsers can indicate this to their users by lighting the browser bar green.

As SSL Certificates themselves are signed by Certificate Authorities, a degree of "domain validation" is carried out - the Certificate Authority makes sure they are only validating a certificate which is owned by someone who has the ability to make changes to the website. This provides a degree of assurance that certificates aren't being issued to attackers who can then seem legitimate when intercepting web traffic. In the event a certificate ends up in the wrong hands, a Certificate Revocation List can be used to retract the certificate. Such lists are then automatically downloaded by modern Operating Systems to ensure that when an invalid certificate is served, it is marked as insecure in the browser. As there are a considerable number (>100) certificate authorities with the power to issue SSL certificates, it is possible to allowlist which Certificate Authorities should issue certificates for a given domain by configuring CAA DNS records.

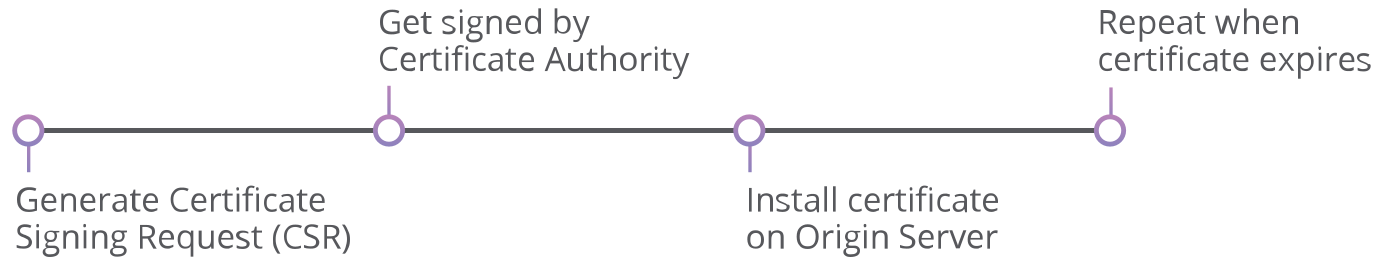

Nowadays this process can be simplified, for example; when your traffic is proxied through the Cloudflare network, we will dynamically manage renewing and signing your certificate for you (whilst using Cloudflare's Origin CA to generate a certificate to encrypt traffic back to the origin web server). Similarly, the EFF offer a tool called CertBot to make it relatively easy to install and generate Let's Encrypt certificates from the command line.

When using HTTPS, it is important that the entire content of the website is then loaded over HTTPS - not just the login pages. It used to be common practice for websites to initially present the login page over a secure encrypted connection, then when the user was logged in, they would degrade the connection back to HTTP. Once logged into a website, a session cookie is stored on the local browser to allow the website to ensure the user is logged in.

In 2010, Eric Butler demonstrated how insecure this was by building a simple interception tool called FireSheep. By Eavesdropping wireless connections, FireSheep would capture the login session for common websites. Whilst the attacker would not necessarily be able to capture the password of the website, they would be able to capture the login session and perform behaviours on websites as if they were login. They would also be able to intercept traffic as the user was logged in.

When connecting to a website using SSL, the first request should usually redirect the user to a secure version of the website. For example; when you first visit http://www.cloudflare.com/ a HTTP 301 redirect is used to send you to the HTTPS version of the site, https://www.cloudflare.com/.

This raises an important question; if someone is able to intercept the unencrypted request to the HTTP version of the site, couldn't they then strip away the encryption and serve the site back to the user without encryption? This was a question explored by Moxie Marlinspike, which later led to the creation of HSTS.

HTTP Strict Transport Security (HSTS)

In 2009 at Blackhat DC, Moxie Marlinspike presented a tool known as SSLStrip. This tool would intercept HTTP traffic and whenever it spotted redirects or links to sites using HTTPS, it would transparently strip them away.

Instead of the victim connecting directly to a website; the victim would connect to the attacker, and the attacker would initiate the connection back to the website. This attack is known as an on-path attack.

The magic of SSLStrip was that whenever it would spot a link to a HTTPS webpage on an unencrypted HTTP connection, it would replace the HTTPS with a HTTP and sit in the middle to intercept the connection. The interceptor would make the encrypted connection to back to the web server in HTTPS, and serve the traffic back to the site visitor unencrypted (logging any interesting passwords or credit card information in the process).

In response, a protocol called HTTP Strict Transport Security (HSTS) was created in 2012 and specified in RFC 6797. The protocol works by the server responding with a special header called Strict-Transport-Security which contains a response telling the client that whenever they reconnect to the site, they must use HTTPS. This response contains a "max-age" field which defines how long this rule should last for since it was last seen.

Whilst this provided an improvement in preventing interception attacks, it wasn't perfect and there remain a number of shortcomings.

HSTS Preloading

One of the shortcomings of HSTS is the fact that it requires a previous connection to know to always connect securely to a particular site. When the visitor first connects to the website, they won't have received the HSTS rule that tells them to always use HTTPS. Only on subsequent connections will the visitor's browser be aware of the HSTS rule that requires them to connect over HTTPS.

Other mechanisms of attacking HSTS have been explored; for example by hijacking the protocol used to the sync a computer's time (NTP), it can be possible to set a computers date and time to one in the future. This date and time can be set to a value when the HSTS rule has expired and thereby bypassing HSTS[2].

HSTS Preload Lists are one potential solution to help with these issues, they effectively work by hardcoding a list of websites that need to be connected to using HTTPS-only. Sites with HSTS enabled can be submitted to the Chrome HSTS Preload List at hstspreload.org; which is also used as the basis of the preload lists used in other browsers.

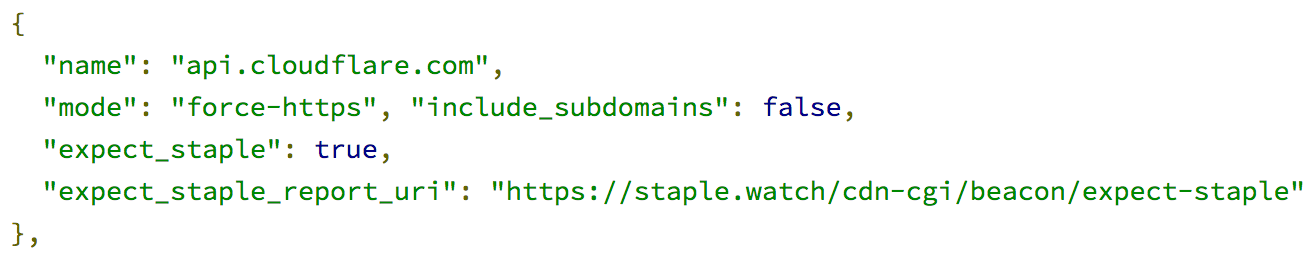

Inside the source code of Google Chrome, there is a file which contains a hardcoded file listing the HSTS properties for all domains in the Preload List. Each entry is formatted in JSON and looks something like this:

Even with preload, things still aren't perfect. Suppose someone is reading a blog about books and on that blog there is a link to purchase a book from an online retailer. Despite the fact the online retailer enforces HTTPS using HSTS it is possible to conduct an on-path attack, providing the blog linking to the online retailer does not use HTTPS.

More Still to be Done

Leonardo Nve revived SSLStrip in a new version called SSLStrip+, with the ability to avoid HSTS. When a site is connected to over an unencrypted HTTP connection, SSLStrip+ will look for links to HTTPS sites. When a link is found to a HTTPS site, it is rewritten to HTTP and critically the domain is rewritten to an artificial domain which is not on the HSTS Preload list.

For example; suppose a site contains a link to https://example.com/, the HSTS could be stripped by rewriting the URLs to http://example.org/; with attacker sitting in the middle, receiving traffic from http://example.org/ and proxying it to https://example.com/.

Such an attack can also be performed against redirects; suppose http://example.net/ is loaded over HTTP but then redirects to https://example.com/ which is loaded over HTTPS. At the point the redirect is carried out, the legitimate HSTS-protected site can be redirected to a phoney domain which the attacker uses to serve traffic over HTTP and intercept traffic.

As more and more of the internet moves to HTTPS, the surface area of this attack will get smaller as there is less unencrypted HTTP traffic to intercept.

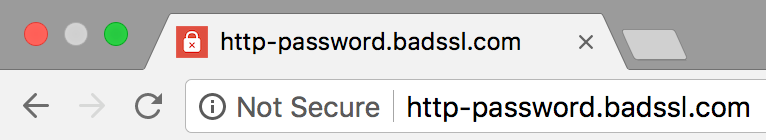

In the latest newly-released version of Google Chrome (version 62), websites which serve input forms (such as credit card forms and password fields) on insecure connections are flagged as "Not Secure" to the user, instead of a neutral message. When in Incognito (private browsing) mode, Chrome will flag any website as insecure if it does not use HTTPS.

This change helps make it clearer to users when HTTPS has been stripped away from a webpage as they try to login. Additionally, in making this change, it hoped that more websites will adopt HTTPS - thereby improving the security of the internet as a whole.

Final Remarks

This blog post has discussed mechanisms of stripping HTTPS away from websites and in particular how HSTS can affect this. It is worth noting that there are other potential attack vectors within various HTTPS specifications and in certain ciphers; this blog post haven't gone into them.

Despite HTTPS offering a mechanism for encrypting web traffic, it is important to implement technologies such as HTTP Strict Transport Security to ensure it is enforced, and preferably submit your site to HSTS Preload lists. As more websites do this, the security of the internet overall is improved.

To learn how you can implement HTTPS and HSTS in practice, I'd highly recommend Troy Hunt's blog post: The 6-Step "Happy Path" to HTTPS. His blog post goes into how you can enable strong HTTPS in practice, and additionally touches on a technology that I didn't mention here known as CSP (Content Security Policy). CSP allows you to automatically upgrade or block HTTP requests when loading pages over HTTPS, as this poses another attack vector.

Stubblefield, A., Ioannidis, J. and Rubin, A.D., 2002, February. Using the Fluhrer, Mantin, and Shamir Attack to Break WEP. In NDSS. ↩︎

Selvi, J., 2014. Bypassing HTTP strict transport security. Black Hat Europe. ↩︎