Today we’re excited to introduce Concurrent Streaming Acceleration, a new technique for reducing the end-to-end latency of live video on the web when using Stream Delivery.

Let’s dig into live-streaming latency, why it’s important, and what folks have done to improve it.

How “live” is “live” video?

Live streaming makes up an increasing share of video on the web. Whether it’s a TV broadcast, a live game show, or an online classroom, users expect video to arrive quickly and smoothly. And the promise of “live” is that the user is seeing events as they happen. But just how close to “real-time” is “live” Internet video?

Delivering live video on the Internet is still hard and adds lots of latency:

- The content source records video and sends it to an encoding server;

- The origin server transforms this video into a format like DASH, HLS or CMAF that can be delivered to millions of devices efficiently;

- A CDN is typically used to deliver encoded video across the globe

- Client players decode the video and render it on the scree

And all of this is under a time constraint — the whole process need to happen in a few seconds, or video experiences will suffer. We call the total delay between when the video was shot, and when it can be viewed on an end-user’s device, as “end-to-end latency” (think of it as the time from the camera lens to your phone’s screen).

Traditional segmented delivery

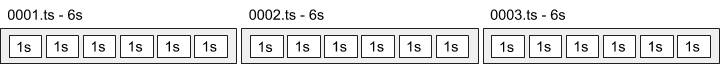

Video formats like DASH, HLS, and CMAF work by splitting video into small files, called “segments”. A typical segment duration is 6 seconds.

If a client player needs to wait for a whole 6s segment to be encoded, sent through a CDN, and then decoded, it can be a long wait! It takes even longer if you want the client to build up a buffer of segments to protect against any interruptions in delivery. A typical player buffer for HLS is 3 segments:

When you consider encoding delays, it’s easy to see why live streaming latency on the Internet has typically been about 20-30 seconds. We can do better.

Reduced latency with chunked transfer encoding

A natural way to solve this problem is to enable client players to start playing the chunks while they’re downloading, or even while they’re still being created. Making this possible requires a clever bit of cooperation to encode and deliver the files in a particular way, known as “chunked encoding.” This involves splitting up segments into smaller, bite-sized pieces, or “chunks”. Chunked encoding can typically bring live latency down to 5 or 10 seconds.

Confusingly, the word “chunk” is overloaded to mean two different things:

- CMAF or HLS chunks, which are small pieces of a segment (typically 1s) that are aligned on key frames

- HTTP chunks, which are just a way of delivering any file over the web

HTTP chunks are important because web clients have limited ability to process streams of data. Most clients can only work with data once they’ve received the full HTTP response, or at least a complete HTTP chunk. By using HTTP chunked transfer encoding, we enable video players to start parsing and decoding video sooner.

CMAF chunks are important so that decoders can actually play the bits that are in the HTTP chunks. Without encoding video in a careful way, decoders would have random bits of a video file that can’t be played.

CDNs can introduce additional buffering

Chunked encoding with HLS and CMAF is growing in use across the web today. Part of what makes this technique great is that HTTP chunked encoding is widely supported by CDNs – it’s been part of the HTTP spec for 20 years.

CDN support is critical because it allows low-latency live video to scale up and reach audiences of thousands or millions of concurrent viewers – something that’s currently very difficult to do with other, non-HTTP based protocols.

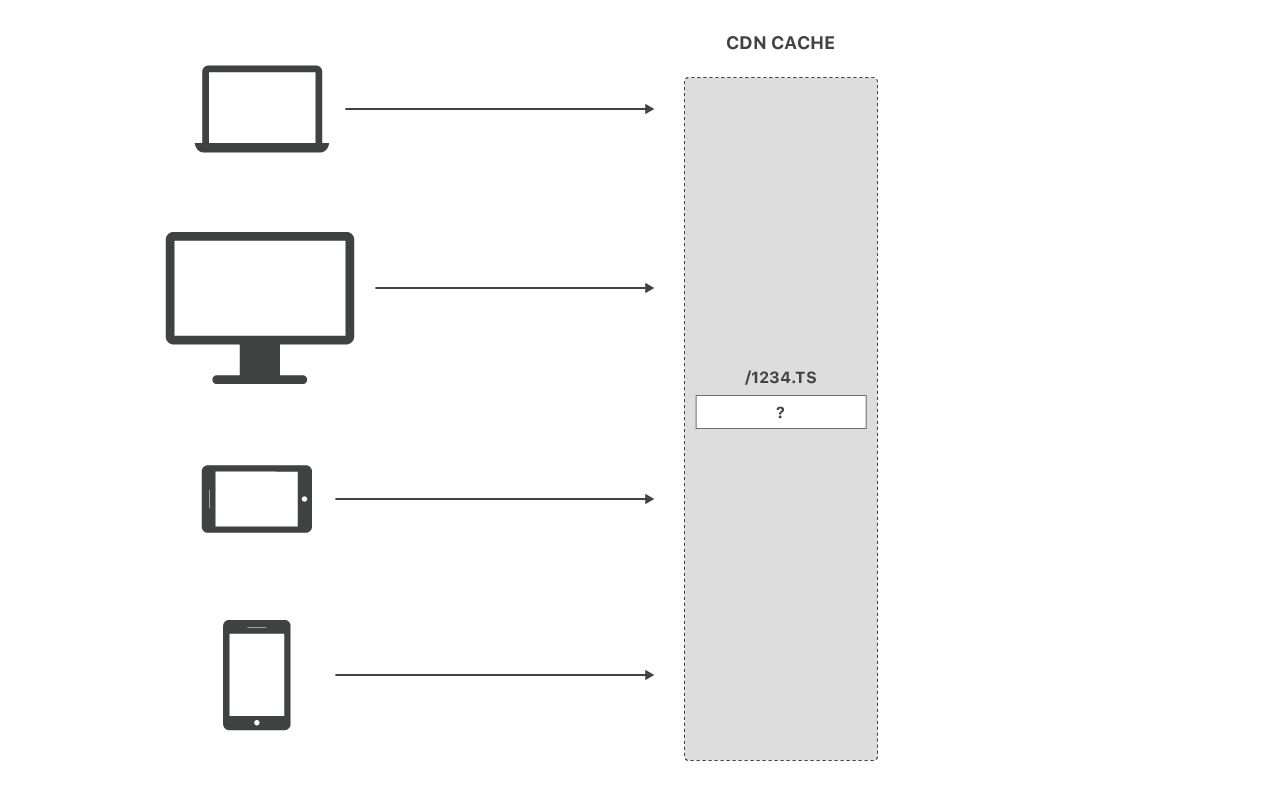

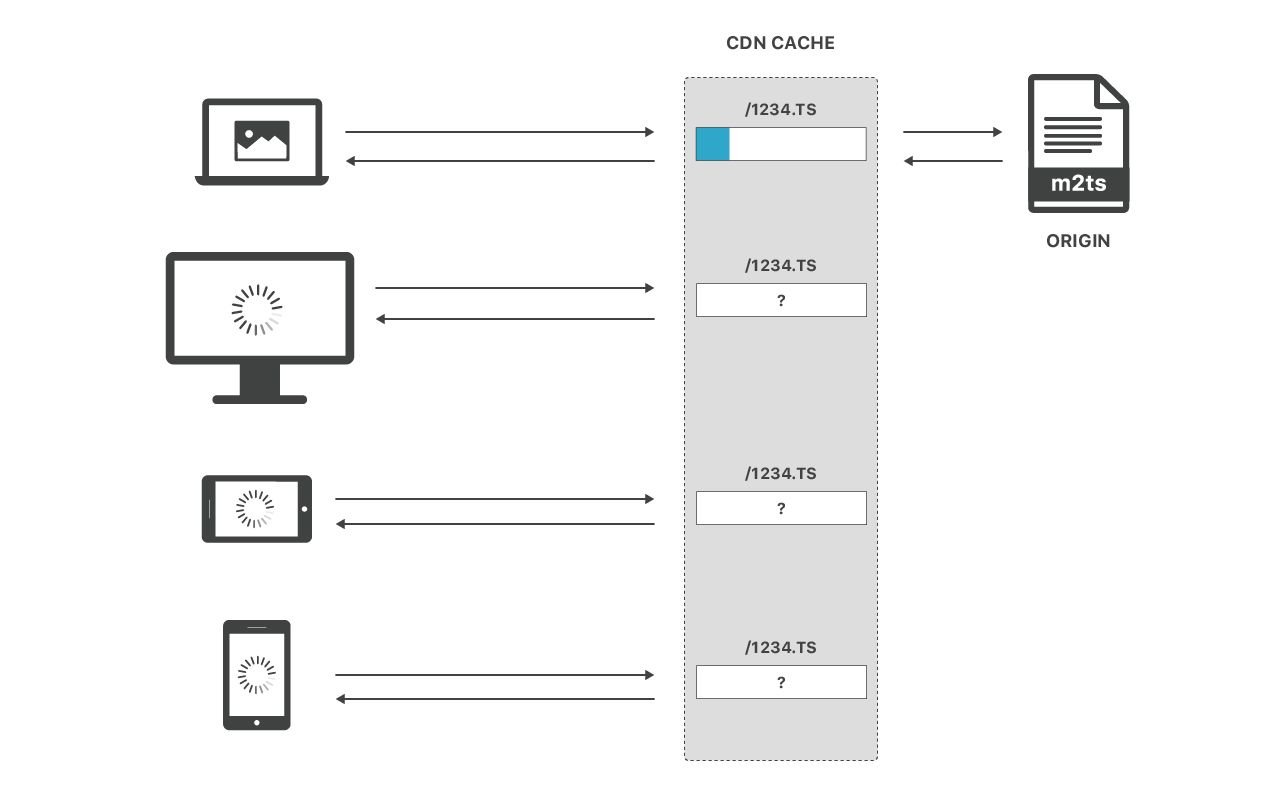

Unfortunately, even if you enable chunking to optimise delivery, your CDN may be working against you by buffering the entire segment. To understand why consider what happens when many people request a live segment at the same time:

If the file is already in cache, great! CDNs do a great job at delivering cached files to huge audiences. But what happens when the segment isn’t in cache yet? Remember – this is the typical request pattern for live video!

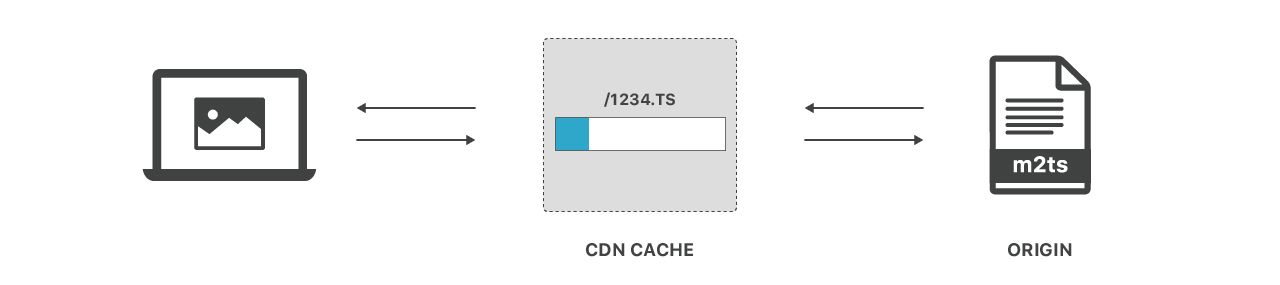

Typically, CDNs are able to “stream on cache miss” from the origin. That looks something like this:

But again – what happens when multiple people request the file at once? CDNs typically need to pull the entire file into cache before serving additional viewers:

This behavior is understandable. CDN data centers consist of many servers. To avoid overloading origins, these servers typically coordinate amongst themselves using a “cache lock” (mutex) that allows only one server to request a particular file from origin at a given time. A side effect of this is that while a file is being pulled into cache, it can’t be served to any user other than the first one that requested it. Unfortunately, this cache lock also defeats the purpose of using chunked encoding!

To recap thus far:

- Chunked encoding splits up video segments into smaller pieces

- This can reduce end-to-end latency by allowing chunks to be fetched and decoded by players, even while segments are being produced at the origin server

- Some CDNs neutralize the benefits of chunked encoding by buffering entire files inside the CDN before they can be delivered to clients

Cloudflare’s solution: Concurrent Streaming Acceleration

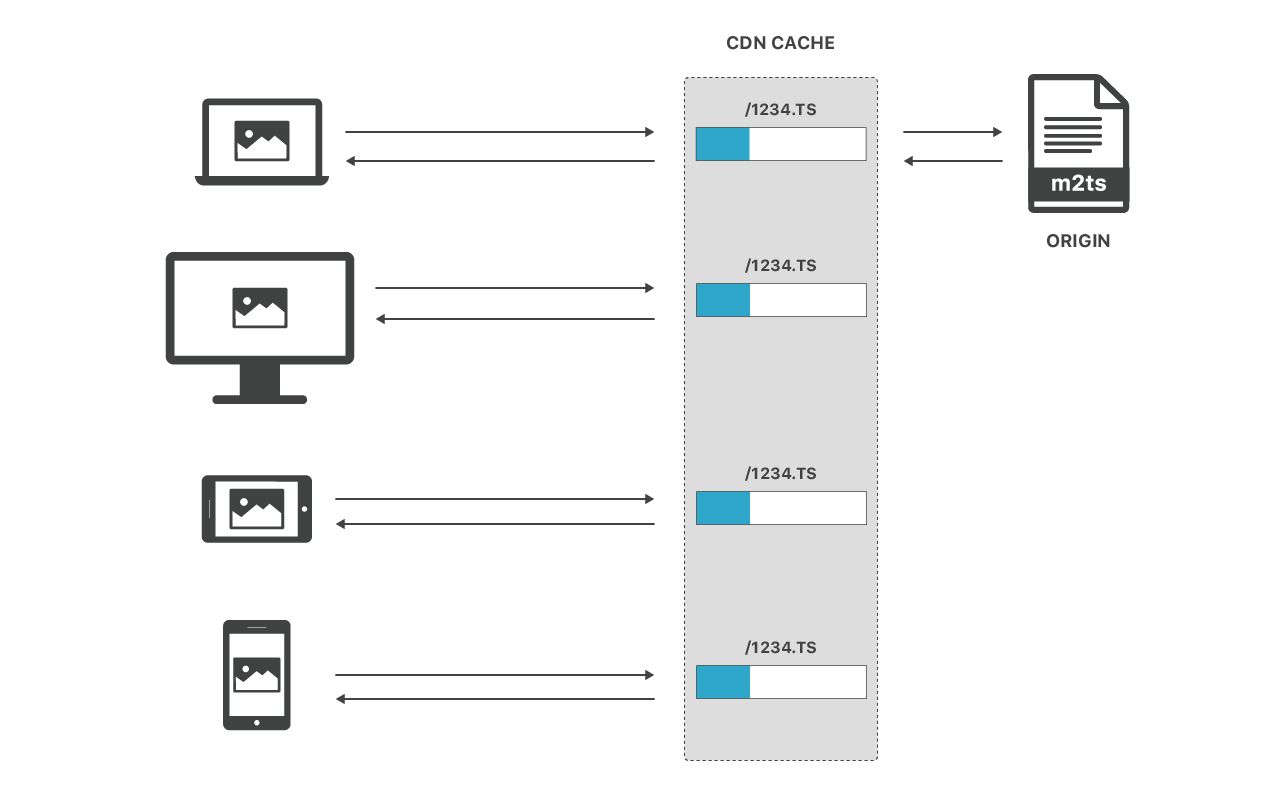

As you may have guessed, we think we can do better. Put simply, we now have the ability to deliver un-cached files to multiple clients simultaneously while we pull the file once from the origin server.

This sounds like a simple change, but there’s a lot of subtlety to do this safely. Under the hood, we’ve made deep changes to our caching infrastructure to remove the cache lock and enable multiple clients to be able to safely read from a single file while it’s still being written.

The best part is – all of Cloudflare now works this way! There’s no need to opt-in, or even make a config change to get the benefit.

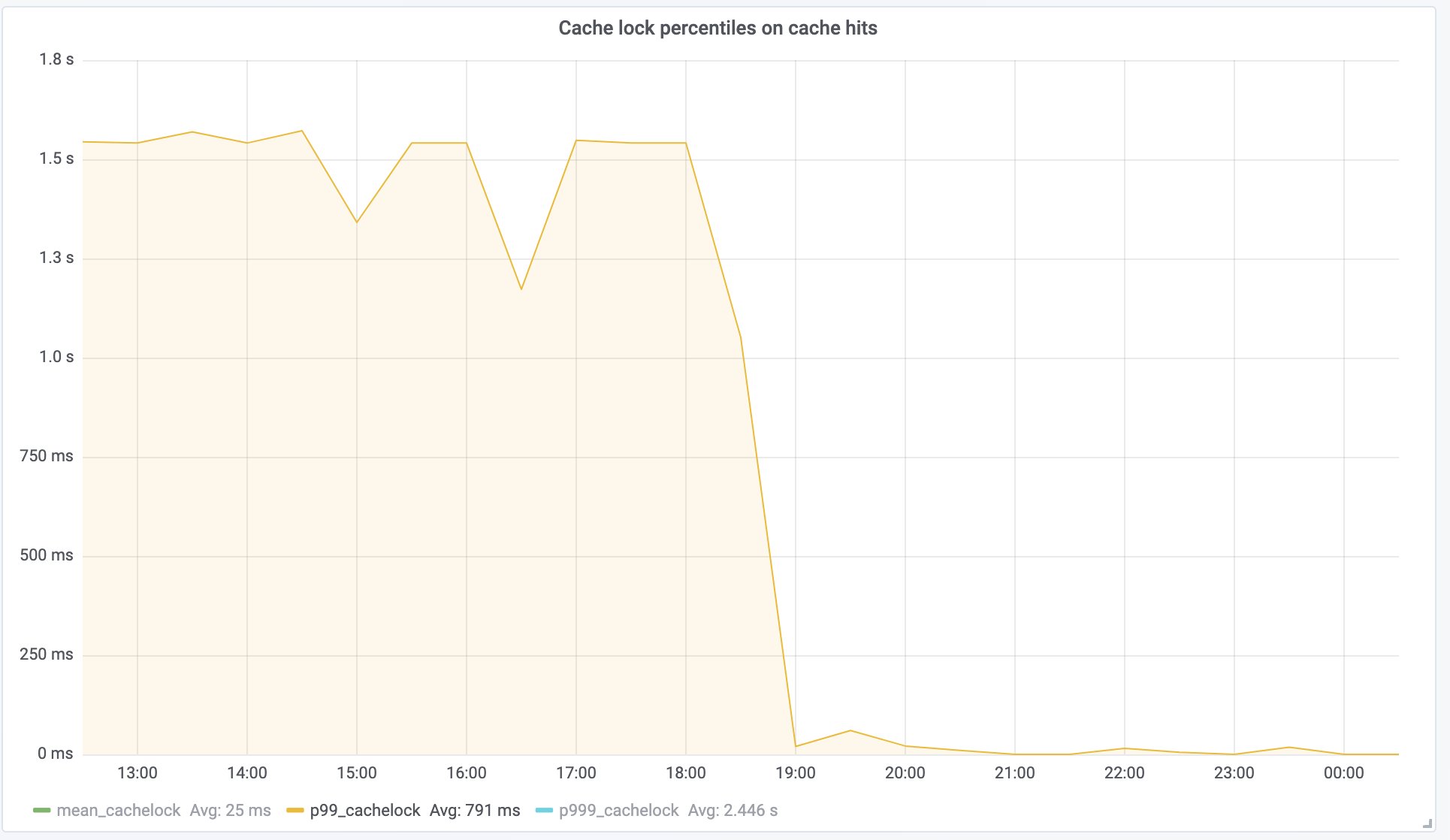

We rolled this feature out a couple months ago and have been really pleased with the results so far. We measure success by the “cache lock wait time,” i.e. how long a request must wait for other requests – a direct component of Time To First Byte. One OTT customer saw this metric drop from 1.5s at P99 to nearly 0, as expected:

This directly translates into a 1.5-second improvement in end-to-end latency. Live video just got more live!

Conclusion

New techniques like chunked encoding have revolutionized live delivery, enabling publishers to deliver low-latency live video at scale. Concurrent Streaming Acceleration helps you unlock the power of this technique at your CDN, potentially shaving precious seconds of end-to-end latency.

If you’re interested in using Cloudflare for live video delivery, contact our enterprise sales team.

And if you’re interested in working on projects like this and helping us improve live video delivery for the entire Internet, join our engineering team!