The Time to First Byte (TTFB) of a site is the time from when the user starts navigating until the HTML for the page they requested starts to arrive. A slow TTFB has been the bane of my existence for more than the ten years I have been running WebPageTest.

Looking at a recent test data set (~100k pages):

— Patrick Meenan (@patmeenan) August 10, 2016

20% TTFB > 3s

80% start render > 5s (10% > 10s)

500 pages were > 15MB

So much slow to fix

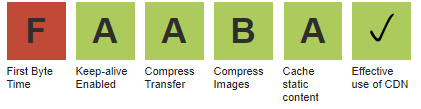

There is a reason why TTFB appears as one of the few “grades” that WebPageTest scores a site on and, specifically, why it is the first grade in the list.

If the first byte is slow, EVERY other metric will also be slow. Improving it is one of the few cases where you can predict what the impact will be on every other measurement. Every millisecond improvement in the TTFB translates directly into a millisecond of savings in every other measurement (i.e. first paint will be 500ms faster if TTFB improves by 500ms). That said, a fast ttfb doesn't guarantee a fast experience but a slow ttfb does guarantee a slow experience. I’d estimate that roughly 50% of all requests for help with WebPageTest results come from site owners struggling with a slow TTFB.

Many things can roll up into the TTFB, including redirects, DNS, connection setup, SSL negotiation and the actual server response time. Most of them can be fixed relatively easily by using a service like Cloudflare, but the server response time for the HTML itself is often the biggest problem and the hardest one to solve.

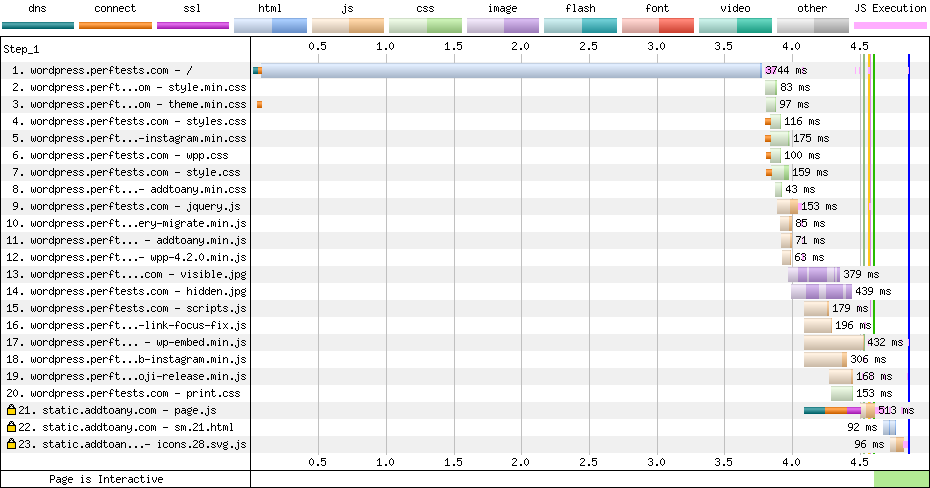

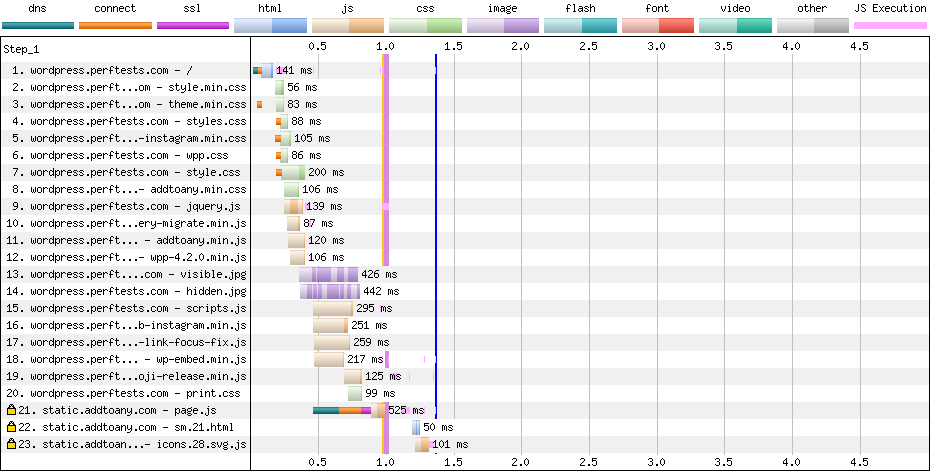

The waterfall diagram below shows the server response time as a light blue bar on the first request and it can be painfully obvious when it is slow. Under optimal conditions, the server response time would be no longer than the orange socket connect bar right before it.

Slow origin response times can be caused by an enormous assortment of issues from the server configuration, system load, back-end databases and systems it talks to, to the application code itself. Getting to the root of the performance issues usually involves teams of Dev Ops engineers working with Application Performance Management tools to track down the slowest parts of the application and improve them.

A huge portion of the site owners I have worked with don’t have the resources or expertise to handle that kind of an investigation. More often than not, they paid someone a one-time development fee to build their site or built it themselves on WordPress and are hosting it on the lowest-cost hosting they could find. The hosting is generally designed to run as many sites as possible, not necessarily for the highest performance.

Edge Caching of HTML

The thing is, most HTML isn’t really dynamic. It needs to be able to change relatively quickly when the site is updated but for a huge portion of the web the content is static for months or years at a time.

There are special cases like when a user is logged-in (as the admin or otherwise) where the content needs to differ but the vast majority of visits are of anonymous users. If the HTML can be cached and served directly from the edge then the performance gains can be substantial (over 3 seconds faster on all metrics in this case).

There are dozens of plugins for WordPress for caching content at the origin but they require configuration (where to cache the pages) and the performance still depends heavily on the performance of the hosting itself. Pushing the content cache further out to the edge reduces the complexity, eliminates the additional time to get back to the origin and completely removes the hosting performance from the equation. It can also significantly reduce the load on the hosting systems by offloading all of the anonymous traffic.

Cloudflare supports caching static HTML, and business and enterprise customers can enable logged-in users to skip the cache by enabling “bypass cache on cookies”. It works in tandem with the Cloudflare plugin for WordPress so the cache can be cleared whenever content is updated. There are also several other caching plugins that integrate with various CDN’s but in all of the cases they need to be configured with API keys for the CDN and the implementations are specific for each CDN.

Zero-Config Edge Caching of HTML

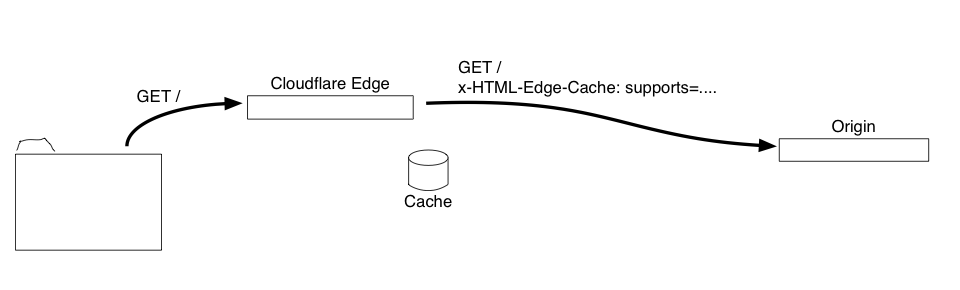

For broad adoption, we need to make the caching of HTML happen automatically (or as close to automatically as possible). To that end, we need a way to communicate between an origin (like a WordPress site) and an edge cache (like Cloudflare’s edge nodes) for managing a remote cache that can be explicitly purged.

The Origin needs to be able to:

- Recognize when there is a supported edge cache in front of it.

- Specify content that should be cached and for what visitors (i.e. visits without a login cookie).

- Purge the cached content when it has changed (globally across all edges).

Instead of requiring the origin to integrate with an API for purging on changes and requiring manual configuration for what to cache an when we can accomplish everything using HTTP headers on the requests that travel back and forth between the edges and the origin:

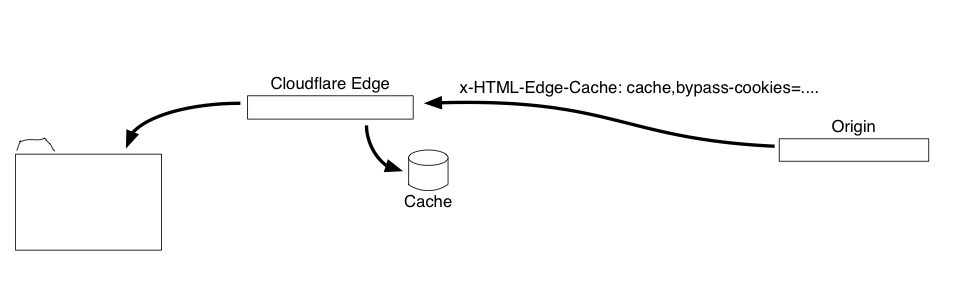

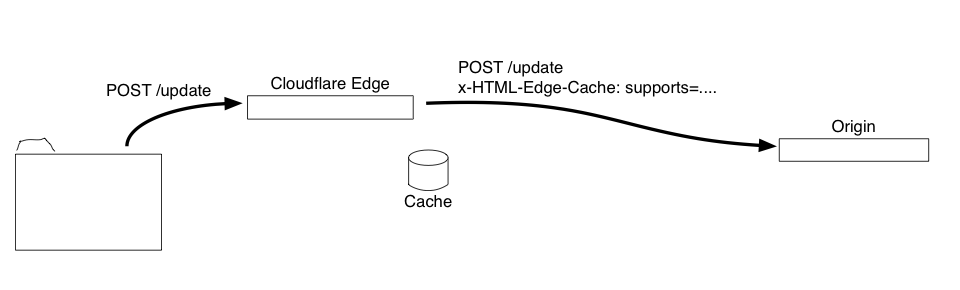

1 - A HTTP header is added to requests going from the edge to the origin to advertise that there is an edge cache present and the capabilities it supports:

x-HTML-Edge-Cache: supports=cache|purgeall|bypass-cookies

2 - When the origin responds with a cacheable page it adds a HTTP header on the response to indicate that it should be cached and any rules for when the cached version shouldn’t be used (to allow for bypassing the cache for logged-in users):

x-HTML-Edge-Cache: cache,bypass-cookies=wp-|wordpress

In this case the HTML will be cached but any requests that have cookies that start with “wordpress” or “wp-” for the cookie name will bypass the cache and go to the origin.

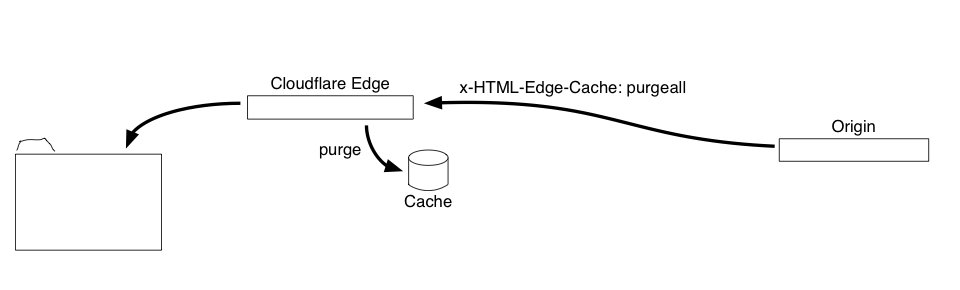

3 - When a request modifies the site content (updating a post, changing a theme, adding a comment) the origin adds a HTTP response header indicating that the cache needs to be purged:

x-HTML-Edge-Cache: purgeall

The only tricky part to deal with is that the purge needs to clear the cache from ALL of the edges, not just the one edge that the request went through.

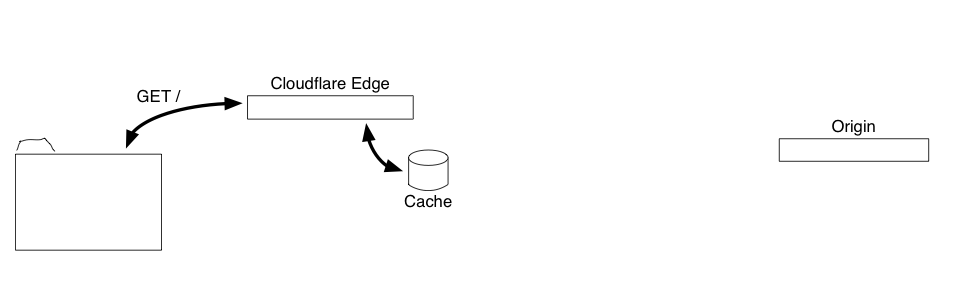

4 - When a new request comes in for HTML that is in the edge cache, the request cookies are checked against the rules for the cached response. If the cookies are not present then the cached version is served; otherwise, the request is passed through to the origin.

With this simple header-based command and control interface we can eliminate the need for an origin to talk to an API and for any explicit configuration. It also makes the logic on the origin significantly easier to implement as there is no configuration (or UI) and no need to make any outbound requests to a vendor-specific API. The example WordPress plugin is less than 50 lines of code and the vast majority of that is hooking up callbacks for all of the events that change content.

Start Caching today with WordPress and Workers

One of the things I love most about Workers is that it gives you a fully programmable edge to experiment with ideas and implement your own logic. I created a Worker script that implements the header-based protocol and edge caching on the Cloudflare edges and a WordPress plugin that implements the origin logic for WordPress.

The only tricky part with the Worker was finding a way to purge items from the cache globally. The Worker caches are local to each edge and don’t provide an interface for doing any global operations. One way it does it is to use the Cloudflare API to purge the global cache but that is a bit of a heavy hammer (purging everything from the cache including scripts and images) and it requires some configuration. If you know the specific URLs that will be changed by a content change then doing a targeted purge through the API for just those URLs would probably be the best solution.

Using the new Workers KV store we can purge the cache a different way. The Worker script uses a versioning scheme for the cache where every URL gets a version number appended to it (i.e. http://www.example.com/?cf_edge_cache_ver=32). The modified URL is only ever used locally by the worker as a key for the cached responses and the current version number is stored in KV which is a global store. When the cache is purged, the version number is incremented which changes the URL for all of the resources. Old entries will age out of the cache normally since they will no longer be accessed. It requires a little configuration to set up KV for the worker but hopefully at some point in the future that can also be automatic.

What Next?

I think there is a huge value for the web in standardizing a way for edge caches and origins to communicate for caching of dynamic content. That would provide incentive for content management systems to build support directly into the platforms and provide a standard interface that could be used across different providers (and even for local edge caches in load balancers or other reverse proxies). After doing some more testing with different types of sites I’m planning to bring the concept to the IETF HTTP Working Group to see if we can come up with an official standard for the control headers (using different names). If you have opinions about how it should work or what features you’d need exposed I’d love to hear about it (like purging specific URLs, varying content for mobile/desktop or region, expanding it to cover all content types, etc).