As a developer, you may be all too familiar with the stress of responding to a major service outage, becoming aware of an ongoing security breach, or simply dealing with the frustration of setting up a new service for the first time. When confronted with these situations, you want a real-time view into the events flowing through your network, so you can receive, process, and act on information as quickly as possible.

If you have a UNIX mindset, you’ll be familiar with tailing web service logs and searching for patterns using grep. With distributed systems like Cloudflare’s edge network, this task becomes much more complex because you’ll either need to log in to thousands of servers, or ship all the logs to a single place.

This is why we built Instant Logs. Instant Logs removes all barriers to accessing your Cloudflare logs, giving you a complete platform to view your HTTP logs in real time, with just a single click, right from within Cloudflare’s dashboard. Powerful filters then let you drill into specific events or search for patterns, and act on them instantly.

The Challenge

Today, Cloudflare’s Logpush product already gives customers the ability to ship their logs to a third-party analytics or storage provider of their choosing. While this system is already exceptionally fast, delivering logs in about 15s on average, it is optimized for completeness and the utmost certainty that your data is reliably making it to its destination. It is the ideal solution for after things have settled down, and you want to perform a forensic deep dive or retrospective.

We originally aimed to extend this system to provide our real-time logging capabilities, but we soon realized the objectives were inherently at odds with each other. In order to get all of your data, to a single place, all the time, the laws of the universe require that latencies be introduced into the system. We needed a complementary solution, with its own unique set of objectives.

This ultimately boiled down to the following

- It has to be extremely fast, in human terms. This means average latencies between an event occurring at the edge and being received by the client should be under three seconds.

- We wanted the system design to be simple, and communication to be as direct to the client as possible. This meant operating the dataplane entirely at the edge, eliminating unnecessary round trips to a core data center.

- The pipeline needs to provide sensible results on properties of all sizes, ranging from a few requests per day to hundreds of thousands of requests per second.

- The pipeline must support a broad set of user-definable filters that are applied before any sampling occurs, such that a user can target and receive exactly what they want.

Workers and Durable Objects

Our existing Logpush pipeline relies heavily on Kafka to provide sharding, buffering, and aggregation at a single, central location. While we’ve had excellent results using Kafka for these pipelines, the clusters are optimized to run only within our core data centers. Using Kafka would require extra hops to far away data centers, adding a latency penalty we were not willing to incur.

In order to keep the data plane running on the edge, we needed primitives that would allow us to perform some of the same key functions we needed out of Kafka. This is where Workers and the recently released Durable Objects come in. Workers provide an incredibly simple to use, highly elastic, edge-native, compute platform we can use to receive events, and perform transformations. Durable Objects, through their global uniqueness, allow us to coordinate messages streaming from thousands of servers and route them to a singular object. This is where aggregation and buffering are performed, before finally pushing to a client over a thin WebSocket. We get all of this, without ever having to leave the edge!

Let’s walk through what this looks like in practice.

A Simple Start

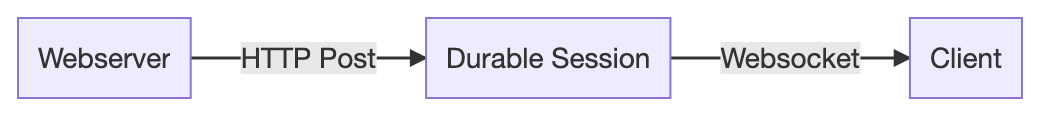

Imagine a simple scenario in which we have a single web server which produces log messages, and a single client which wants to consume them. This can be implemented by creating a Durable Object, which we will refer to as a Durable Session, that serves as the point of coordination between the server and client. In this case, the client initiates a WebSocket connection with the Durable Object, and the server sends messages to the Durable Object over HTTP, which are then forwarded directly to the client.

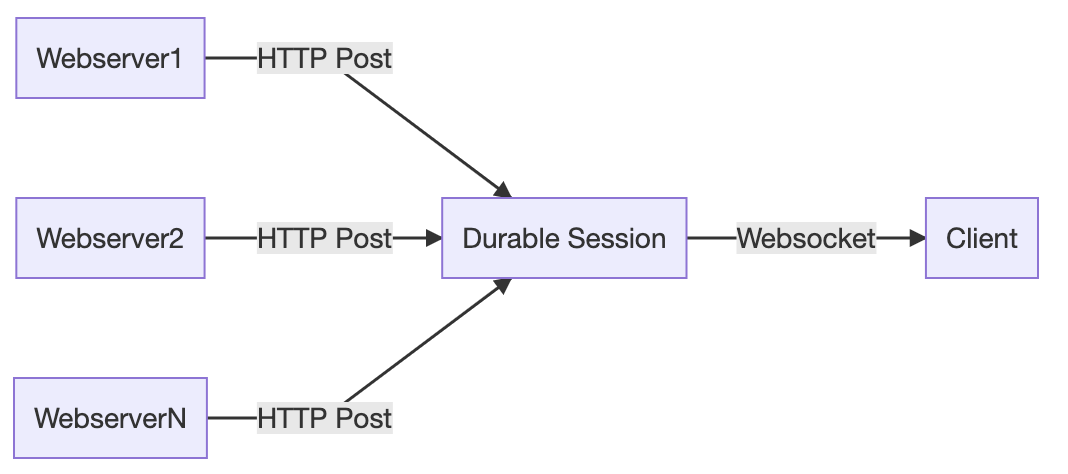

This model is quite quick and introduces very little additional latency other than what would be required to send a payload directly from the web server to the client. This is thanks to the fact that Durable Objects are generally located at or near the data center where they are first requested. At least in human terms, it’s instant. Adding more servers to our model is also trivial. As the additional servers produce events, they will all be routed to the same Durable Object, which merges them into a single stream, and sends them to the client over the same WebSocket.

Durable Objects are inherently single threaded. As the number of servers in our simple example increases, the Durable Object will eventually saturate its CPU time and will eventually start to reject incoming requests. And even if it didn’t, as data volumes increase, we risk overwhelming a client’s ability to download and render log lines. We’ll handle this in a few different ways.

Honing in on specific events

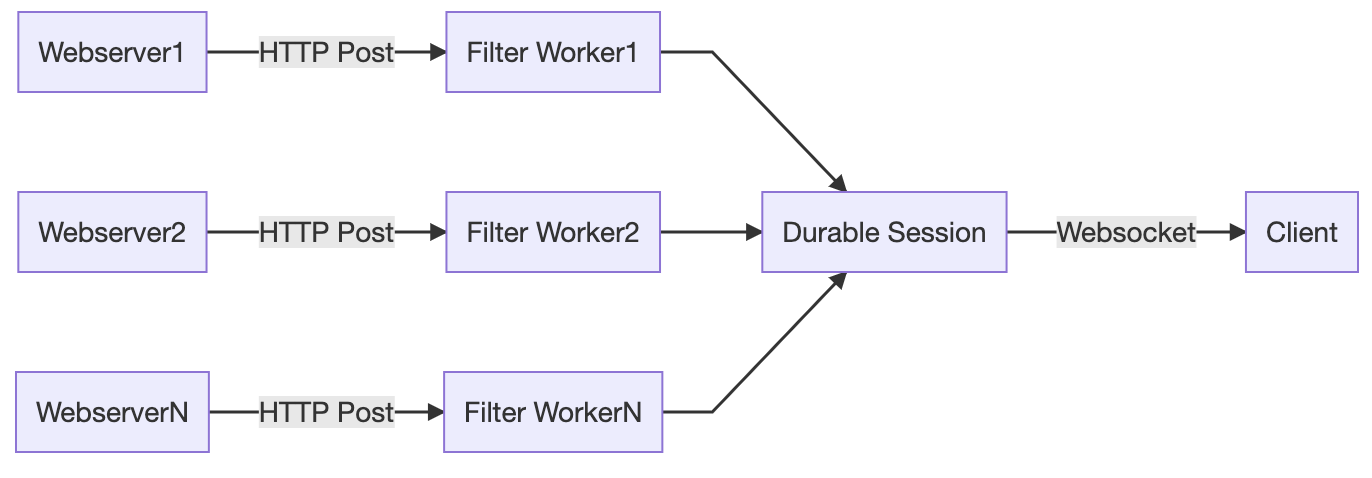

Filtering is the most simple and obvious way to reduce data volume before it reaches the client. If we can filter out the noise, and stream only the events of interest, we can substantially reduce volume. Performing this transformation in the Durable Object itself will provide no relief from CPU saturation concerns. Instead, we can push this filtering out to an invoking Worker, which will run many filter operations in parallel, as it elastically scales to process all the incoming requests to the Durable Object. At this point, our architecture starts to look a lot like the MapReduce pattern!

Scaling up with shards

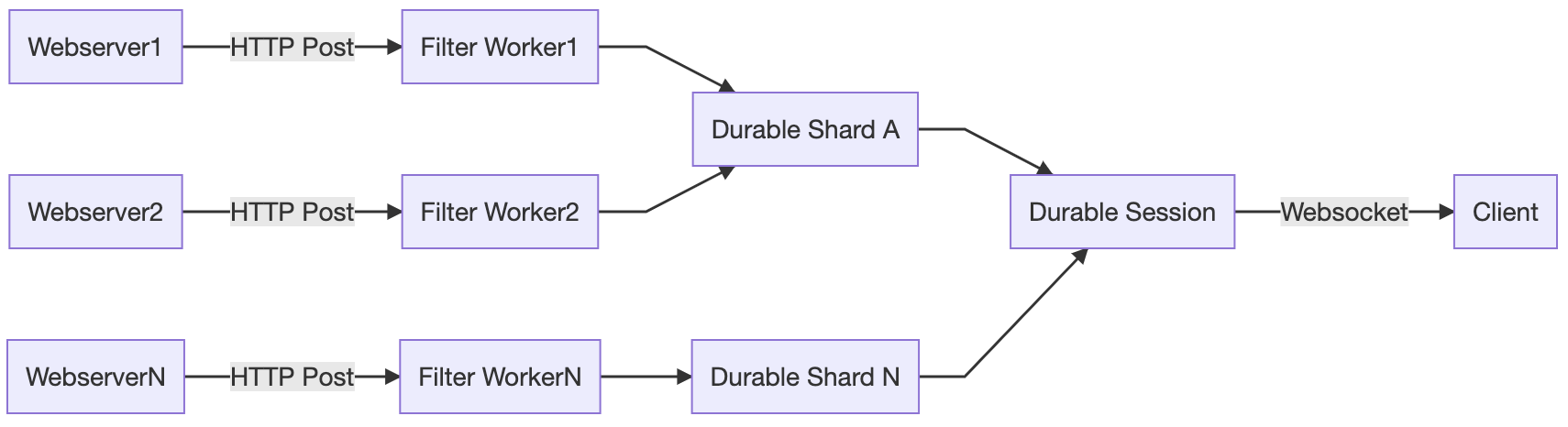

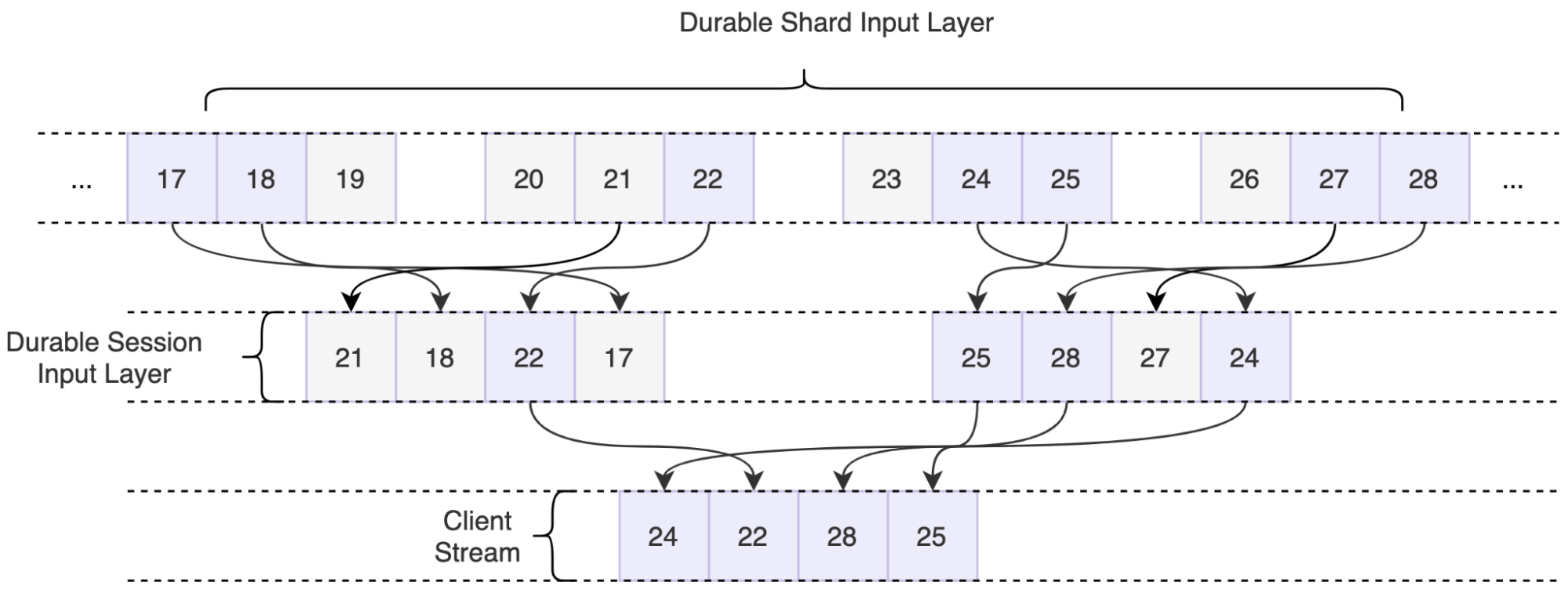

Ok, so filtering may be great in some situations, but it’s not going to save us under all scenarios. We still need a solution to help us coordinate between potentially thousands of servers that are sending events every single second. Durable Objects will come to the rescue, yet again. We can implement a sharding layer consisting of Durable Objects, we will call them Durable Shards, that effectively allow us to reduce the number of requests being sent to our primary object.

But how do we implement this layer if Durable Objects are globally unique? We first need to decide on a shard key, which is used to determine which Durable Object a given message should first be routed to. When the Worker processes a message, the key will be added to the name of the downstream Durable Object. Assuming our keys are well-balanced, this should effectively reduce the load on the primary Durable Object by approximately 1/N.

Reaching the moon by sampling

But wait, there’s more to do. Going back to our original product requirements, “The pipeline needs to provide sensible results on properties of all sizes, ranging from a few requests per day to hundreds of thousands of requests per second.” With the system as designed so far, we have the technical headroom to process an almost arbitrary number of logs. However, we’ve done nothing to reduce the absolute volume of messages that need to be processed and sent to the client, and at high log volumes, clients would quickly be overwhelmed. To deliver the interactive, instant user experience customers expect, we need to roll up our sleeves one more time.

This is where our final trick, sampling, comes into play.

Up to this point, when our pipeline saturates, it still makes forward progress by dropping excess data as the Durable Object starts to refuse connections. However, this form of ‘uncontrolled shedding’ is dangerous because it causes us to lose information. When we drop data in this way, we can’t keep a record of the data we dropped, and we cannot infer things about the original shape of the traffic from the messages that we do receive. Instead, we implement a form of ‘controlled’ sampling, which still preserves the statistics, and information about the original traffic.

For Instant Logs, we implement a sampling technique called Reservoir Sampling. Reservoir sampling is a form of dynamic sampling that has this amazing property of letting us pick a specific k number of items from a stream of unknown length n, with a single pass through the data. By buffering data in the reservoir, and flushing it on a short (sub second) time interval, we can output random samples to the client at the maximum data rate of our choosing. Sampling is implemented in both layers of Durable Objects.

Information about the original traffic shape is preserved by assigning a sample interval to each line, which is equivalent to the number of samples that were dropped for this given sample to make it through, or 1/probability. The actual number of requests can then be calculated by taking the sum of all sample intervals within a time window. This technique adds a slight amount of latency to the pipeline to account for buffering, but enables us to point an event source of nearly any size at the pipeline, and we can expect it will be handled in a sensible, controlled way.

Putting it all together

What we are left with is a pipeline that sensibly handles wildly different volumes of traffic, from single digits to hundreds of thousands of requests a second. It allows the user to pinpoint an exact event in a sea of millions, or calculate summaries over every single one. It delivers insight within seconds, all without ever having to do more than click a button.

Best of all? Workers and Durable Objects handle this workload with aplomb and no tuning, and the available developer tooling allowed me to be productive from my first day writing code targeting the Workers ecosystem.

How to get involved?

We’ll be starting our Beta for Instant Logs in a couple of weeks. Join the waitlist to get notified about when you can get access!

If you want to be part of building the future of data at Cloudflare, we’re hiring engineers for our data team in Lisbon, London, Austin, and San Francisco!