CloudFlare had an hour-long outage this last weekend. Thankfully, outages like this have been a relatively rare occurrance for our service. This is in spite of hundreds of thousands of customers, the enormous volume of legitimate traffic they generate, and the barrage of large denial of service attacks we are constantly mitigating on their behalf. While last weekend's outage exposed a flaw in our architecture that we're working to fully eliminate, largely our systems have been designed to be balanced and have no single points of failure. We haven't talked much about the architecture of CloudFlare's systems but thought the rest of the community might benefit from seeing some of the choices we've made, how we load balance our systems, and how this has allowed us to scale quickly and efficiently.

Failure Isn't an Option, It's a Fact

CloudFlare's architecture starts with an assumption: failure is going to happen. As a result, we have to plan for failure at every level and design a system that gracefully handles it when it occurs. To understand how we do this, you have to understand the components of CloudFlare's edge systems. Here are four critical components we deploy at the edge of our network:

- Network:CloudFlare's 23 data centers (internally we refer to them as PoPs) are connected to the rest of the world via multiple providers. These connections are both through transit (bandwidth) providers as well as other networks we directly peer with.

- Router:at the edge of each of our PoPs is a router. This router announces the paths packets take to CloudFlare's network from the rest of the Internet.

- Switch: within each PoP there will be one or more switches that aggregate traffic within the PoP's local area network (LAN).

- Server: behind each switch there are a collection of servers. These servers perform some of the key tasks to power CloudFlare's service including DNS resolution, proxying, caching, and logging.

Those are the four components you'll find in the racks that we run in locations around the world. You'll notice some things from a typical hardware stack seem to be missing. For example, there's no hardware load balancer. The problem with hardware load balancers (and hardware firewalls, for that matter) is that they often become the bottleneck and create a single point of failure themselves. Instead of relying on a piece of hardware to load balance across our network, we use routing protocols to spread traffic and handle failure.

Anycast Is Your Friend

For most of the Internet, IP addressess correspond to a single device connected to the public Internet. In your home or office, you may have multiple devices sitting behind a gateway using network address translation (NAT), but there is only one public IP address and all the devices that sit behind the network use a unique private IP address (e.g., in the space 192.168.X.X or 10.X.X.X). The general rule on the Internet is one unique IP per devices. This is a routing scheme known as Unicast. However, it's not the only way.

There are four major routing schemes: Unicast, Multicast, Broadcast, and Anycast. Multicast and Broadcast are so-called one-to-many routing schemes. With Broadcast, one node sends packets that hit all recipient nodes. Broadcast is not widely used any longer and was actually not implemented in IPv6 (its largest contemporary use has likely been launching SMURF DDoS attacks). With Multicast, one node sends packets that hit multiple (but not all) recipient nodes that have opted into a group (e.g., how a cable company may deliver a television broadcast over an IP network).

Unicast and Anycast are one-to-one routing schemes. In both, there is one sender and one recipient of the packet. The difference between the two is that while there is only one possible destination on the entire network for a packet sent over Unicast, with Anycast there are multiple possible destinations and the network itself picks the route that is most preferential. On the wide area network (WAN) -- aka. the Internet -- this preference is for the shortest path from the sender to the recipient. On the LAN, the preferences can be set with weights that are honored by the router.

Anycast at the WAN

At CloudFlare, we use Anycast at two levels: the WAN and the LAN. At the WAN level, every router in all of CloudFlare's 23 data centers announces all of our external-facing IP addresses. For example, one of the IPs that CloudFlare announces for DNS services is 173.245.58.205. A route to that IP address is announced from all 23 CloudFlare data centers. When you send a packet to that IP address, it passes through a series of routers. Those routers look at the available paths to CloudFlare's end points and send the packet down the one with the fewest stops along the way (i.e., "hops"). You can run a traceroute to see each of these steps.

If I run a traceroute from CloudFlare's office in San Francisco, the path my packets take is:

$ traceroute 173.245.58.205 traceroute to 173.245.58.205 (173.245.58.205), 64 hops max, 52 byte packets 1 192.168.2.1 (192.168.2.1) 3.473 ms 1.399 ms 1.247 ms 2 10.10.11.1 (10.10.11.1) 3.136 ms 2.857 ms 3.206 ms 3 ge-0-2-5.cr1.sfo1.us.nlayer.net (69.22.X.X) 2.936 ms 3.405 ms 3.193 ms 4 ae3-70g.cr1.pao1.us.nlayer.net (69.22.143.170) 3.638 ms 4.076 ms 3.911 ms 5 ae1-70g.cr1.sjc1.us.nlayer.net (69.22.143.165) 4.833 ms 4.874 ms 4.973 ms 6 ae1-40g.ar2.sjc1.us.nlayer.net (69.22.143.118) 8.926 ms 8.529 ms 6.742 ms 7 as13335.xe-8-0-5.ar2.sjc1.us.nlayer.net (69.22.130.146) 5.048 ms 8 173.245.58.205 (173.245.58.205) 4.601 ms 4.338 ms 4.611 ms

If you run the same traceroute from a Linode server in London, the path my packets take is:

$ traceroute 173.245.58.205 traceroute to 173.245.58.205 (173.245.58.205), 30 hops max, 60 byte packets 1 212.111.X.X (212.111.X.X) 6.574 ms 6.514 ms 6.522 ms 2 212.111.33.X (212.111.33.X) 0.934 ms 0.935 ms 0.969 ms 3 85.90.238.69 (85.90.238.69) 1.396 ms 1.381 ms 1.405 ms 4 ldn-b3-link.telia.net (80.239.167.93) 0.700 ms 0.696 ms 0.670 ms 5 ldn-bb1-link.telia.net (80.91.247.24) 2.349 ms 0.700 ms 0.671 ms 6 ldn-b5-link.telia.net (80.91.246.147) 0.759 ms 0.771 ms 0.774 ms 7 cloudflare-ic-154357-ldn-b5.c.telia.net (80.239.161.246) 0.917 ms 0.853 ms 0.833 ms 8 173.245.58.205 (173.245.58.205) 0.972 ms 1.292 ms 0.916 ms

In both cases, the 8th and final hop is the same. You can tell, however, that they are hitting different CloudFlare data centers from hints in the 7th hop (highlighted in red below): as13335.xe-8-0-5.ar2.sjc1.us.nlayer.net suggesting it is hitting San Jose and cloudflare-ic-154357-ldn-b5.c.telia.net suggesting it is hitting London.

Since packets will follow the shortest path, if a particular path is withdrawn then packets will find their way to the next shortest available route. For simple protocols like UDP that don't maintain state, Anycast is ideal and it has been used widely to load balance DNS for some time. At CloudFlare, we've done a significant amount of engineering to allow TCP to run across Anycast without flapping. This involves carefully adjusting routes in order to get optimal routing and also adjusting the way we handle protocol negotiation itself. While more complex to maintain than a Unicast network, the benefit is we can lose an entire data center and packets flow to the next closest facility without anyone noticing and hiccup.

Anycast in the LAN

Once a packet arrives as a particular CloudFlare data center we want to ensure it gets to a server that can correctly handle the request. There are four key tasks that CloudFlare's servers perform: DNS, proxy, cache, and logging. We tend to follow the Google-like approach and deploy generic, white-box servers that can perform a number of different functions. (Incidentally, if anyone is interested, we're thinking of doing a blog post to "tour" a typical CloudFlare server and discuss the choices we made in working with manufacturers to design them.) Since servers can fail or be overloaded, we need to be able to route traffic intelligently around problems. For that, we return to our old friend Anycast.

Using Anycast, each server within each of CloudFlare's data centers is setup to receive traffic from any of our public IP addresses. The routes to these servers are announced via the border gateway protocol (BGP) from the servers themselves. To do this we use a piece of software called Bird. (You can tell it's an awesomely intense piece of networking software just by looking at one of its developers.) While all servers announce a route across the LAN for all the IPs, each server assigns its own weight to each IPs route. The router is then configured such that the route with the lowest weight is preferred.

If a server crashes, Bird stops announcing the BGP route to the router. The router then begins sending traffic to the server with the next-lowest weighted route. We also monitor critical processes on each server. If any of these critical processes fails then it can signal Bird to withdraw a route. This is not all or nothing. The monitor is aware of the server's own load as well as the load on the other servers in the data center. If a particular server starts to become overloaded, and it appears there is sufficient capacity elsewhere, then just some of the BGP routes can be withdrawn to take some traffic away from the overloaded server.

Beyond failover, we are beginning to experiment with BGP to do true load balancing. In this case, the weights to multiple servers are the same and the router hashes the source IP, destination IP, and port in order to consistently route traffic to the same server. The hash mapping table can be adjusted to increase or decrease load to any machine in the cluster. This is relatively easy with simple protocols like UDP, so we're playing with it for DNS. It's trickier with protocols that need to maintain some session state, like TCP, and gets trickier still when you throw in SSL, but we have some cool things in our lab that will help us better spread load across all the available resources.

Failure Scenarios

To understand this architecture, it's useful to think through some common failure scenarios.

- Process Crash: if a core process (DNS, proxy, cache, or logging) crashes then the monitor daemon running on the server detects the failure. The monitor signals Bird to withdraw the BGP routes that are routed to that process (e.g., if just DNS crashes then the IPs that are used for CloudFlare name servers will be withdrawn, but the server will still respond to proxy traffic). With the routes withdrawn, the router in the data center sends traffic to the route with the next-lowest weight. The monitor daemon restarts the DNS server and, after verifying it has come up cleanly, signals Bird to start announcing routes again.

- Server Crash: if a whole server crashes, Bird crashes along with it. All BGP routes to the server are withdrawn and the router sends traffic to the servers with the next lowest route weights. A monitor process on a control server within the data center attempts to reboot the box using the IPMI management interface and, if that fails, a power cycle from the fancy power strip (PDU). After the monitor process has verified the box has come back up cleanly, Bird is restarted and routes to the server are reinitiated.

- Switch Crash: if a switch fails, all BGP routes to the servers behind the switch are automatically withdrawn. The routers are configured if they lose sufficient routes to the machines to drop the IPs that correspond to those routes out of the WAN Anycast pool. Traffic fails over for those IPs to the next closest data center. Monitors both inside and outside the affected data center alert our networking team who monitor the network 24/7 that there has been a switch failure so they can investigate and attempt a reboot.

- Router Crash: if a router fails, all BGP routes across the WAN are withdrawn for the data center for which the router is responsible. Traffic to the data center automatically fails over to the next closest data center. Monitors both inside and outside the affected data center alert our networking team who monitor the network 24/7 that there has been a router failure so they can investigate and attempt a reboot.

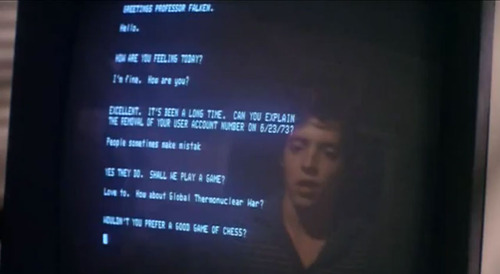

- Global Thermonuclear War: would be bad, but CloudFlare may continue to be able to route traffic to whatever portion of the Internet is left. As facilities were vaporized (starting with Las Vegas) their routers would stop announcing routes. As long as some facilities remained connected to whatever remained of the network (maybe Sydney, Australia?) they would provide a path for traffic destined for our customers. We've designed the network such that more than half of it can completely fail and we'll still be able to keep up with the traffic.

It's a rare company our size that gets to play with systems to globally load balance Internet-scale traffic. While we've done a number of smart things to build a very fault tolerant network, last weekend's events prove there is more work to be done. If these are the sort of problems that excite you and you're interested in helping build a network that can survive almost anything, we're hiring.