I grew up with DOS and Windows 3.1. I remember applications being fast - instant feedback or close to it. Today, native applications like Outlook or Apple Mail still feel fast - click compose and the window is there instantly and it feels snappy. Internet applications do not.

My first Internet experience was paying $30 for a prepaid card with 10 hour access over a 14.4k modem. First, it was bulletin boards and later IRC and the WWW. From my small seaside town in Australia, the Internet was a window into the wider world, but it was slooooooow. In a way, it didn’t matter. The world of opportunities the Internet opened up, from information to music, to socializing and ecommerce, who cared if it was slow? The utility of the Internet and Internet applications meant I would use them regardless of the experience.

Performance improved from the 90s, but in 2008 when I switched from Outlook downloading my Yahoo! email over IMAP to Gmail in the browser, it wasn’t because it was faster - it wasn’t - it was because features like search, backed up mail, and unlimited storage were too good to resist. The cloud computing power that Google could bring to bear on my mail meant I was happy to trade native performance for a browser-based one that wasn’t bad, but definitely not snappy.

Efforts like Electron have attempted to blend performance and utility by offering a host in the native windowing technology, which loads an HTML5 app. It’s not working. Some of the most popular Electron apps today are not snappy at all.

Recorded on Macbook 2013 with 8gb RAM

How did we get here?

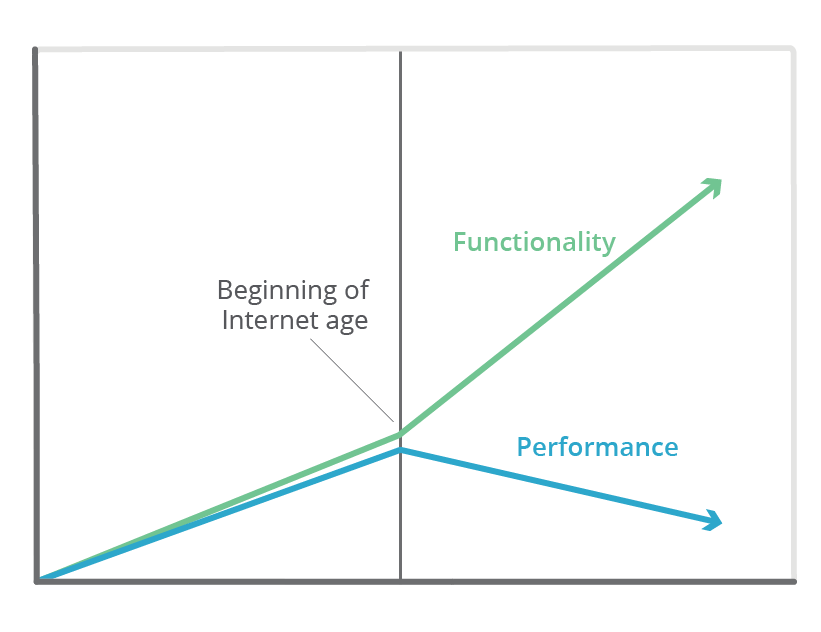

So how did we get here and where are we going? I think of applications during the period of 1980-2018 like this:

1980-2000 Functionality and performance increased at a similar rate

2000-2018 Functionality increased during the Internet age, but perceived performance degraded

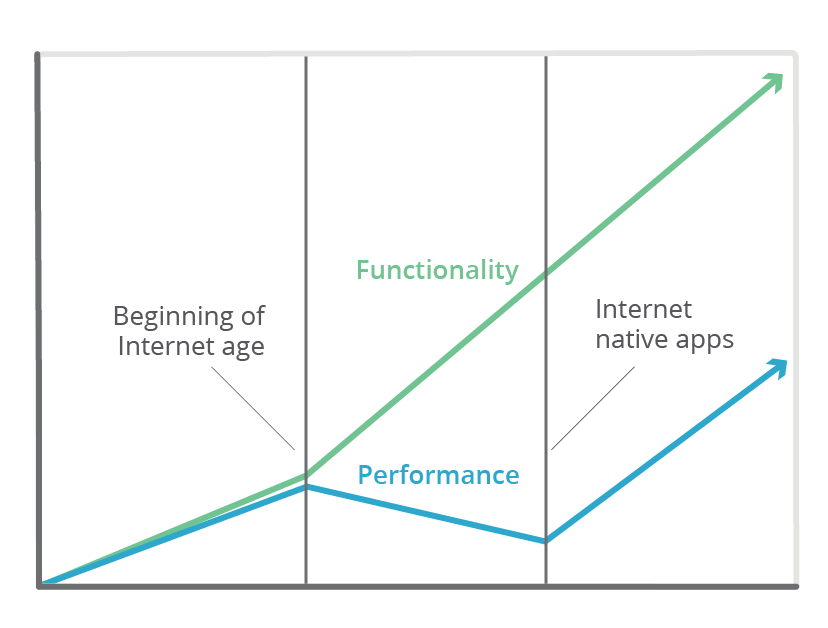

I think we’re entering the next phase, whereby performance and the user experience will again track the rise in the utility of those apps. I predict we’ll go back to a chart like this:

To summarize, while the Internet and the “Cloud” brought a huge increase in functionality the performance of Internet Applications has been hampered by:

Downloading interpreted code

Over chatty protocols

From origin servers far away from users

This has prevented Internet Applications achieving their potential of being both performant and functional. This is changing and I am defining this new era of applications as Internet Native Applications.

Where are we going?

Internet Native Applications will combine the utility of Internet apps, but with the speed of local desktop apps. These apps will feel magical, as the functionality that was previously in some distant data center, will now feel like just an extension of the computer itself.

How will this happen and what will be the drivers?

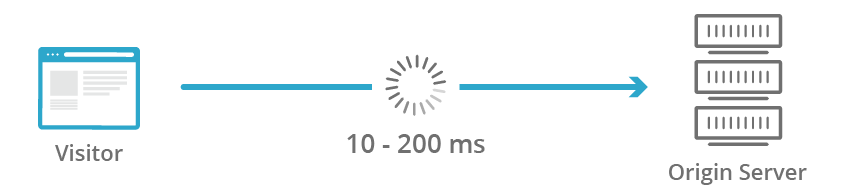

1. Remote services will be embedded in the network itself less than 10ms from the client.

As huge Edge networks get closer to end-users, the perceived performance cost of not serving a request from the Edge and requiring a round-trip to a legacy cloud provider will increase. In a world where users will come to expect near-instant responses from Internet Native applications, a response not served from the Edge and requiring a trip to a centralized cloud-based origin will feel like a "cache miss".

In the same way systems programmers have to consider the impact of L1 vs L2 vs Main Memory access, so too will Internet Native application architects consider carefully each time an application requires a resource to be fetched or a computation to be run from a centralized data center, as opposed to the Edge. As more and more services are available <10ms from the end user, the cost of the new “cache miss” will increase. For comparison, a disk seek on an average desktop HDD costs around 10ms - the same time it takes to get a request to Edge, so Edge network requests will feel local - requests to far flung centralized data centers will be noticeably slow.

APIs, storage, and eventually even data-intensive tasks will be processed on the Edge. To the user, it will feel like this magical functionality is just an extension of their computer. As network compute nodes push further and further towards users, from Internet Exchange Points (IXs), to ISPs and even to cell towers, it will indeed be close to the truth.

Near instant

The new "cache miss"

2. Client-side code will executed at near-native speed using WebAssembly.

Ever since the web emerged as the ultimate app distribution platform, there have been attempts to embed runtimes in browsers. Java Applets, ActiveX and Flash to name a few. Java probably made the most headway, but was plagued by write-once-debug-everywhere issues and installation friction.

WASM is gaining momentum and now ships in all major browsers. It's still an MVP, but with wide support and promising early performance testing, expect more and more apps to take advantage of WASM. It's early days – WASM can't interact with the DOM yet, but that is coming too. The debate is ongoing, but I believe WASM will enable user interaction with UI elements in the browser to get much closer to the “native” speed of the past.

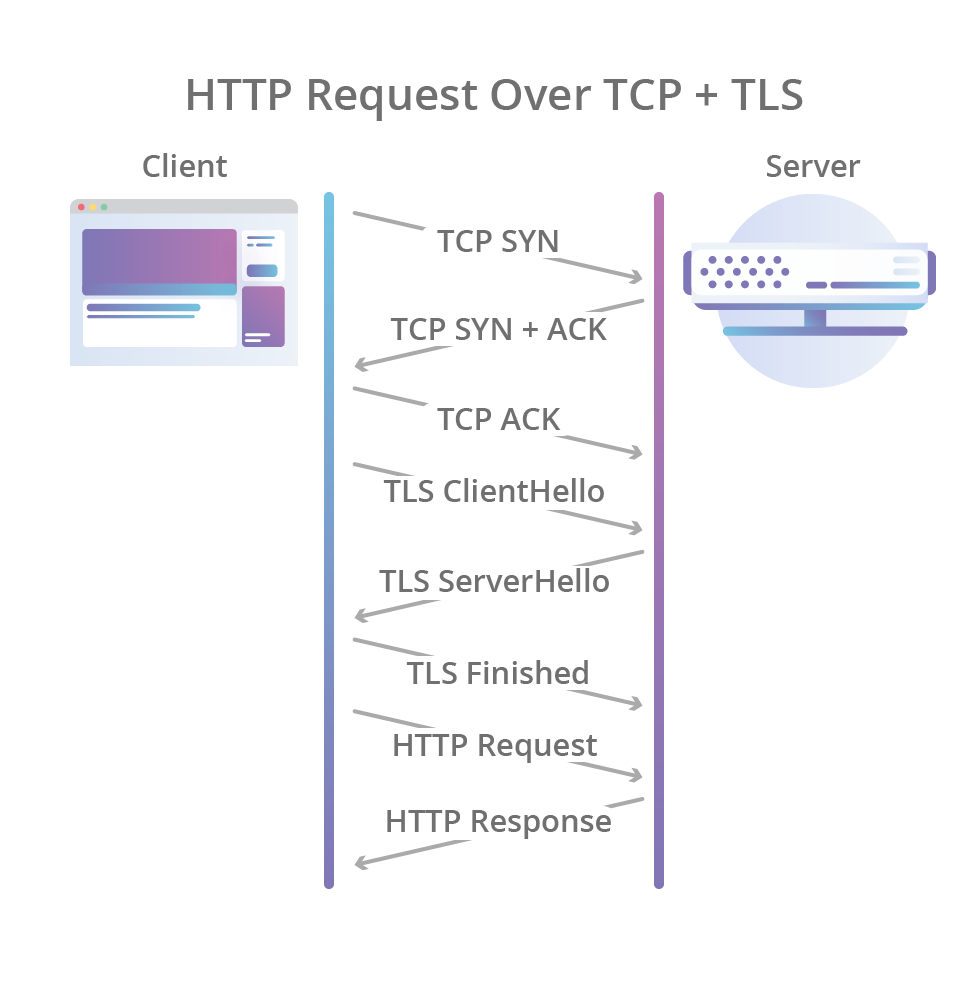

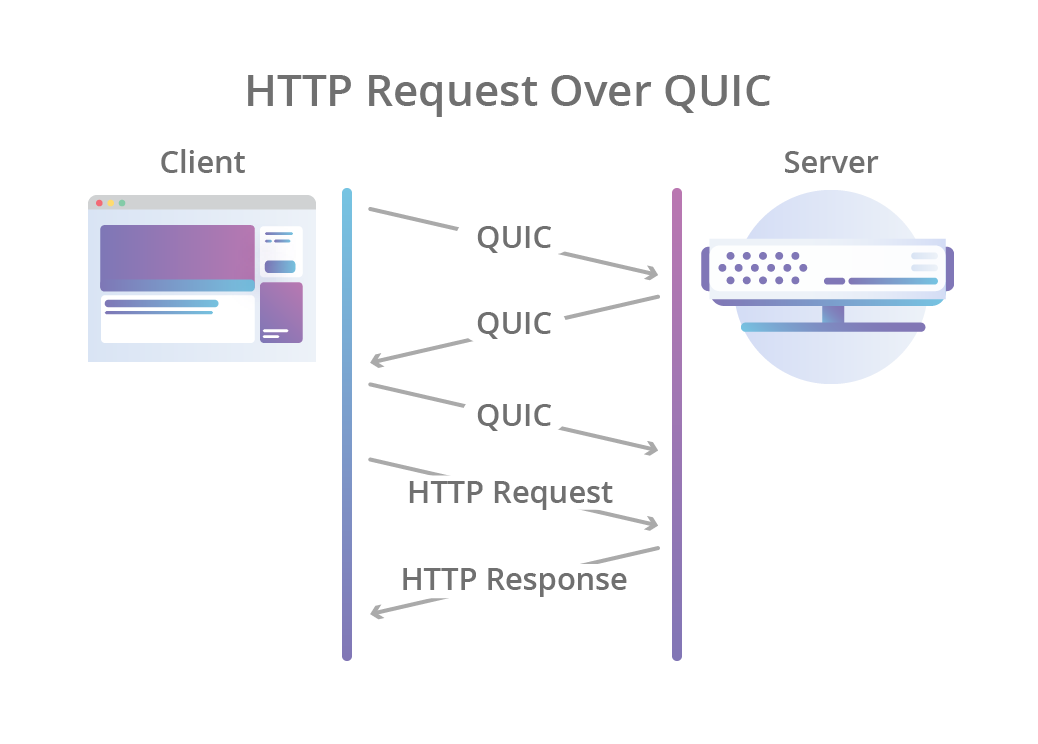

3. Clients exchange data using a new generation of performance-based protocols.

TCP, conceived in 1981, can no longer meet the needs of the highest performing Internet applications. Dropped packets on mobile networks cause back-off and newer protocols handle congestion control better.

QUIC, now under the auspices of the IETF and starting to see Internet-facing server implementations, will underpin the connection of Internet Native applications to their nearest network compute node, mostly using an efficient, multiplexed HTTP/2 connection.

HTTPS

QUIC

Market Implications

More services to move to the Edge

With the Edge now a general-compute platform – more and more services will move there, driving up the percentage of requests that can be fulfilled within 10ms. Legacy cloud providers will continue to provide services like like AI/ML training, data pipelines and "big data" applications for some time, but many services will move "up the stack", to be closer to the user.

The old “Cloud” becomes a pluggable implementation detail

Source: https://github.com/google/go-cloud

CIOs woke up years ago to the risk of vendor lock-in and have defended against it in a variety of forms such as hybrid clouds, open-source, and container orchestration to name a few. Google launched another attempt to further commoditize cloud services with its launch of go/cloud. It provides a generalizable way for application architects to define cloud resources in a non-vendor specific way.

I believe Internet Native applications will increasingly define their cloud dependencies, e.g., storage, as pluggable implementation details, and deploy policies on the edge to route according to latency, cost, privacy and other domain-specific criteria.

Summary

No single technology defines Internet Native Applications. However, I believe the combination of:

A rapidly increasing percentage of requests serviced directly from the Edge under 10ms

Near-native speed code on the browser, powered by WebAssembly

Improved Internet protocols like QUIC and HTTP/2

Will usher in a new era of performance for Internet-based applications. I believe the difference in user experience will be so significant, that it will bring about a fundamental shift in the way we architect the applications of tomorrow – Internet Native Applications – and Engineers, Architects and CIOs need to start planning for this shift now.

Closing Note

Do you remember people asking if “you have the Internet on your computer?” when people didn’t really understand what the Internet was? Internet Native applications will finally make it irrelevant – you won’t be able to tell the difference.

Want to work on technology powering the next era? I’m hiring for Cloudflare in San Francisco and Austin.