Every day, close to 1 billion people watch video through Cloudflare. That’s 100 petabytes of video every month. Last year, video made up 73% of all internet traffic, which is why earlier today we announced Cloudflare Stream, an end to end video solution designed to bring instant video to any device and network connection. Here’s how Stream works to optimize video upload, encoding and delivery.

Uploads

Using Cloudflare Stream starts with a call to the Stream API to /upload a video.

HTTP does not by default provide a reliable upload mechanism for large files, which can make it tricky to upload large media content like high quality video. If there’s any latency in the network connection, a timeout can cancel the entire upload and require the client to start over.

We use an open source upload protocol called Tus which allows for resumable uploads where the upload previously failed. Tus does this by splitting the download into manageable chunks and tracking completed chunks on the server.

Encodes

Video files are containers that hold the video file, the audio track, and some metadata. The video file is compressed by a codec. The codec is a compression algorithm for encoding video streams. Browsers use codecs to determine how they should decode and decompress the video for playing. Common container formats include MPEG, AVI, OGG and MP4. (You can usually tell the container format by the file extension.) Common codecs include H.264, HEVC (which Apple just announced support for as part of iOS 11), and VP9.

Different browsers support different codecs so in order to run the best quality video on all browsers and devices, video providers need to encode a single video to multiple formats. For example, Netflix uses HEVC/H.265 to get 50% bitrate savings over its predecessor, but that format is only supported on the newest version of Safari on the latest Mac and iOS operating systems, so they fall back when streaming on other browsers and devices. Likewise, YouTube uses VP9 to deliver 4K video at half the bandwidth, but it isn’t supported on Safari, IE or Opera so they use other formats on those browsers.

Once you upload a video to Cloudflare Stream, Cloudflare immediately starts encoding the video and storing each encoded version. Today we encode to H.264, which is supported by all modern browsers and is known for supporting high quality video including 4K, and to MP4 for legacy support. We’re adding more optimized codecs like VP9 and HEVC next.

Delivery: Streaming

With normal HTTP streaming, the client pulls down the video while the user watches it at the same time. That means for video to play without buffering, the bitrate needs to be below that of the bandwidth available on the connection. As more clients are watching video from their phones, the bandwidth connection is often lower and this method sacrifices video quality.

Instead, we use progressive downloads, also called pseudo streaming, in which the video is streamed from the HTTP server and then cached on the client, where it is played from the hard drive or from memory. This allows us to encode at higher bitrates, preserving quality.

Alongside progressive downloads, we use a technology called adaptive streaming in which the server offers multiple bitrate streams and the client switches between them based on the current network connectivity. To accomplish this, the video is chunked, and the client can switch between profiles in between the video chunks by downloading the following chunk from a different bitrate stream. You might have seen this in action when watching a video where the image quality changes in response to your network connection.

Each chunk contains a valid video file, audio file, and some metadata, which means that each chunk can be played independently. The difference between the video files on the chunks on each stream is the pixel dimension and the framerate (aka the quality of detail). However, the start moment of each chunk is lined up between streams so there’s no skipping as the client switches between streams.

The client knows where to source each bitrate stream because the video delivery starts by delivering a single manifest file that contains each available stream and its location. Here’s an example of the m3u8 format manifest file used for adaptive streaming. There’s a list of available bitrates and a location of each of their manifest files:

#EXTM3U

#EXT-X-MEDIA:TYPE=AUDIO,GROUP-ID="audio-1",NAME="Audio",LANGUAGE="en",AUTOSELECT=YES,URI="audio_0_128000_hls.m3u8"

#EXT-X-STREAM-INF:BANDWIDTH=9728000,CODECS="avc1.64000d,mp4a.40.2",RESOLUTION=1920x1080,AUDIO="audio-1"

video_0_4800000_hls.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=4928000,CODECS="avc1.64000d,mp4a.40.2",RESOLUTION=1280x720,AUDIO="audio-1"

video_0_2400000_hls.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=2528000,CODECS="avc1.64000d,mp4a.40.2",RESOLUTION=854x480,AUDIO="audio-1"

video_0_1200000_hls.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=1728000,CODECS="avc1.64000d,mp4a.40.2",RESOLUTION=640x360,AUDIO="audio-1"

video_0_800000_hls.m3u8Then if you were to open a single bitrate’s manifest file, you’d find the location of each chunk (this is how we route clients to the lowest latency data center for first segments vs lowest cost data center for subsequent segments -- more on that later):

#EXTM3U

#EXT-X-MEDIA-SEQUENCE:0

#EXT-X-TARGETDURATION:10

#EXTINF:10,

http://media.cloudflarestream.com/segment1.avc

#EXTINF:10,

http://media.cloudflarestream.com/segment2.avc

#EXTINF:10,

http://media.cloudflarestream.com/segment3.avc

#EXT-X-ENDLISTCloudflare uses two standards for adaptive streaming: HLS and MPEG-DASH. The standards both work in essentially the same way - they contain small differences like MPEG-DASH uses smaller chunk sizes by default (2-4 seconds versus 10 seconds) so switching channels is faster. MPEG-DASH also has better support for ad insertion. HLS, Apple HTTP Live Streaming, on the other hand, is ideal for streaming to Apple products because it is natively supported by Apple devices and software.

Delivery: Where in the world

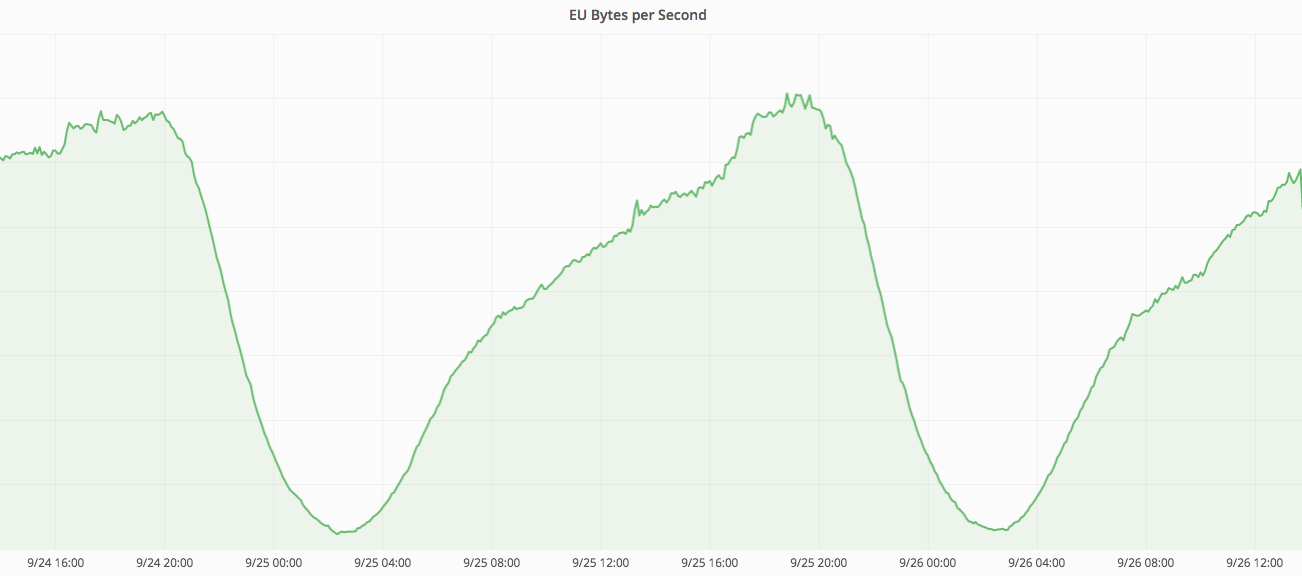

Cloudflare runs a global network in over 115 PoPs. In every one of our data centers we have a large number of servers connected to the Internet. Traffic to these data centers rises and falls depending on the time of day. Since each data center serves visitors in the region, when it's the middle of the night and people in the region are asleep, traffic drops. You can see this in the bandwidth graphs to data centers around the world.

We are billed for bandwidth based on our peak usage in any 30-day period. That means that if we use more bandwidth during the troughs in any data center's day we don't incur any additional cost. Similarly, what we pay for the servers that run in these data centers is a fixed cost. Every minute we're not fully utilizing a server we are, effectively, underutilizing a resource we've already paid for.

Video delivery, it turns out, is the perfect task to fill these underutilized resources. To be fast, only the first few bytes need to come from the closest data center to the video viewer. The rest have a little bit of buffer time while the first few seconds of the video are being watched to reach the client and can come from whatever data center currently has excess resources.

How we accomplish this is by dynamically assigning data centers IP ranges based on which are currently being underutilized. Then videos are loaded alongside a manifest file, pointing the player to where they can locate the different chunks of the video for each bitrate. (As we described earlier, this is how adaptive streaming works: the player uses this manifest file to quickly locate different bitrate formats and switch between them as network latency changes). That manifest file is dynamically generated to point to the closest data center using Cloudflare’s default Anycast IP for the first chunk, then to the underutilized data centers for subsequent chunks. The final result is that the first segment is fast, with low cost subsequent segments. This allows us to offer a video solution that is at the best price, because let's face it, enabling video today is really expensive and unpredictable and it doesn't have to be.

It’s a bird! It’s a plane! It’s a Cloudflare App! ?

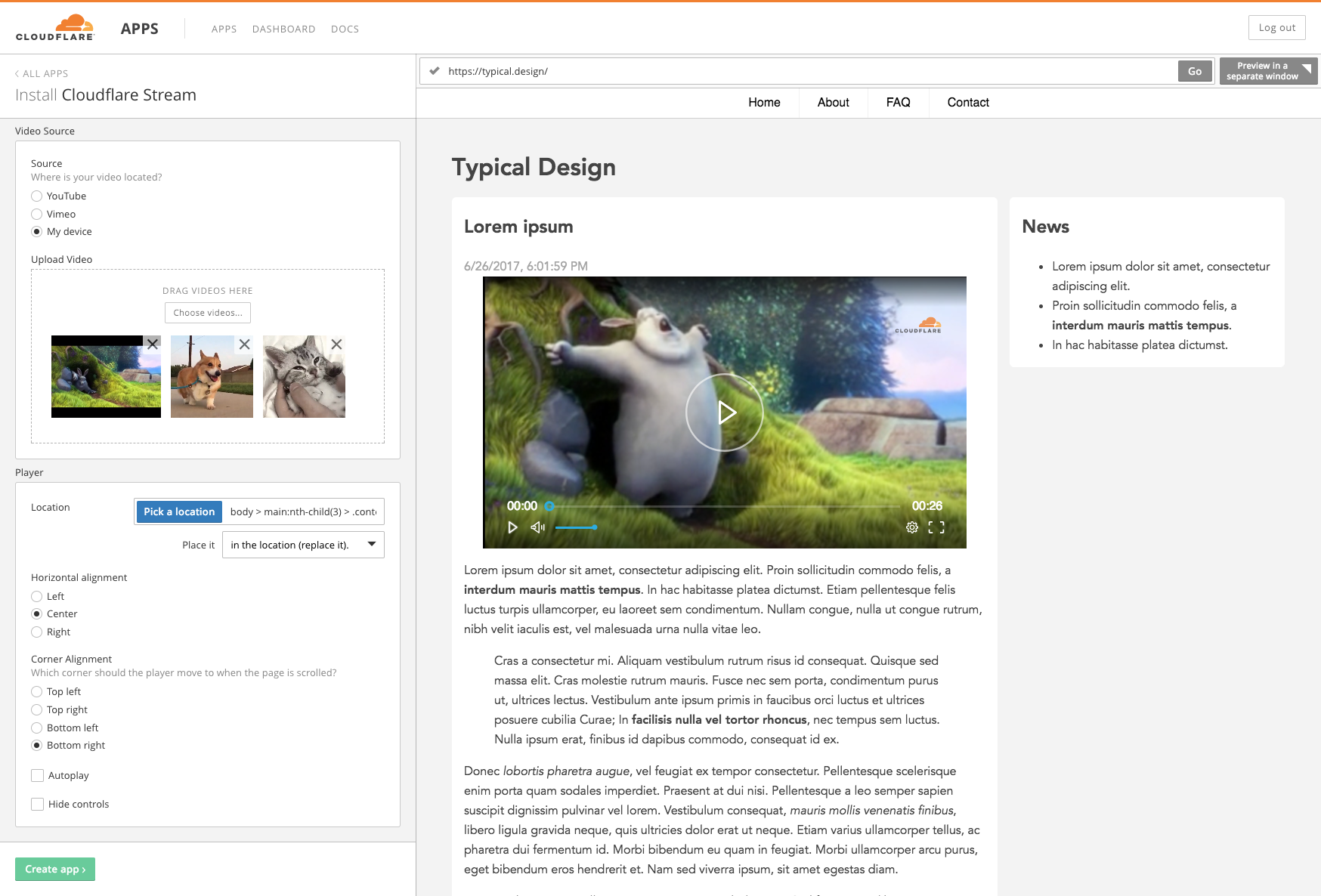

Three months ago we launched the next generation of Cloudflare Apps, an open platform that lets developers reach over 6 million customers. The Apps platform allows developers to build tools that use all of Cloudflare, which is why’ve built the Stream app:

Developers will soon be able to add video uploaders to their apps, making the Stream API accessible to all non-technical users.

We’re Taking Advanced Sign Ups

It’s 10 years after Opera demoed the first implementation of the <video> element in 2007 and we’re excited about making video streaming easy, instant and cost effective. We’re going to open up a beta soon and we’re taking advance sign ups in the meantime. You can reserve your spot here.

If bringing instant streaming to 7M applications sounds like a fun challenge for you, we're growing a video team in Austin, and we want to meet you.