How Does Secondary DNS Work?

If you already understand how Secondary DNS works, please feel free to skip this section. It does not provide any Cloudflare-specific information.

Secondary DNS has many use cases across the Internet; however, traditionally, it was used as a synchronized backup for when the primary DNS server was unable to respond to queries. A more modern approach involves focusing on redundancy across many different nameservers, which in many cases broadcast the same anycasted IP address.

Secondary DNS involves the unidirectional transfer of DNS zones from the primary to the Secondary DNS server(s). One primary can have any number of Secondary DNS servers that it must communicate with in order to keep track of any zone updates. A zone update is considered a change in the contents of a zone, which ultimately leads to a Start of Authority (SOA) serial number increase. The zone’s SOA serial is one of the key elements of Secondary DNS; it is how primary and secondary servers synchronize zones. Below is an example of what an SOA record might look like during a dig query.

example.com 3600 IN SOA ashley.ns.cloudflare.com. dns.cloudflare.com.

2034097105 // Serial

10000 // Refresh

2400 // Retry

604800 // Expire

3600 // Minimum TTLEach of the numbers is used in the following way:

Serial - Used to keep track of the status of the zone, must be incremented at every change.

Refresh - The maximum number of seconds that can elapse before a Secondary DNS server must check for a SOA serial change.

Retry - The maximum number of seconds that can elapse before a Secondary DNS server must check for a SOA serial change, after previously failing to contact the primary.

Expire - The maximum number of seconds that a Secondary DNS server can serve stale information, in the event the primary cannot be contacted.

Minimum TTL - Per RFC 2308, the number of seconds that a DNS negative response should be cached for.

Using the above information, the Secondary DNS server stores an SOA record for each of the zones it is tracking. When the serial increases, it knows that the zone must have changed, and that a zone transfer must be initiated.

Serial Tracking

Serial increases can be detected in the following ways:

The fastest way for the Secondary DNS server to keep track of a serial change is to have the primary server NOTIFY them any time a zone has changed using the DNS protocol as specified in RFC 1996, Secondary DNS servers will instantly be able to initiate a zone transfer.

Another way is for the Secondary DNS server to simply poll the primary every “Refresh” seconds. This isn’t as fast as the NOTIFY approach, but it is a good fallback in case the notifies have failed.

One of the issues with the basic NOTIFY protocol is that anyone on the Internet could potentially notify the Secondary DNS server of a zone update. If an initial SOA query is not performed by the Secondary DNS server before initiating a zone transfer, this is an easy way to perform an amplification attack. There is two common ways to prevent anyone on the Internet from being able to NOTIFY Secondary DNS servers:

Using transaction signatures (TSIG) as per RFC 2845. These are to be placed as the last record in the extra records section of the DNS message. Usually the number of extra records (or ARCOUNT) should be no more than two in this case.

Using IP based access control lists (ACL). This increases security but also prevents flexibility in server location and IP address allocation.

Generally NOTIFY messages are sent over UDP, however TCP can be used in the event the primary server has reason to believe that TCP is necessary (i.e. firewall issues).

Zone Transfers

In addition to serial tracking, it is important to ensure that a standard protocol is used between primary and Secondary DNS server(s), to efficiently transfer the zone. DNS zone transfer protocols do not attempt to solve the confidentiality, authentication and integrity triad (CIA); however, the use of TSIG on top of the basic zone transfer protocols can provide integrity and authentication. As a result of DNS being a public protocol, confidentiality during the zone transfer process is generally not a concern.

Authoritative Zone Transfer (AXFR)

AXFR is the original zone transfer protocol that was specified in RFC 1034 and RFC 1035 and later further explained in RFC 5936. AXFR is done over a TCP connection because a reliable protocol is needed to ensure packets are not lost during the transfer. Using this protocol, the primary DNS server will transfer all of the zone contents to the Secondary DNS server, in one connection, regardless of the serial number. AXFR is recommended to be used for the first zone transfer, when none of the records are propagated, and IXFR is recommended after that.

Incremental Zone Transfer (IXFR)

IXFR is the more sophisticated zone transfer protocol that was specified in RFC 1995. Unlike the AXFR protocol, during an IXFR, the primary server will only send the secondary server the records that have changed since its current version of the zone (based on the serial number). This means that when a Secondary DNS server wants to initiate an IXFR, it sends its current serial number to the primary DNS server. The primary DNS server will then format its response based on previous versions of changes made to the zone. IXFR messages must obey the following pattern:

Current latest SOA

Secondary server current SOA

DNS record deletions

Secondary server current SOA + changes

DNS record additions

Current latest SOA

Steps 2,3,4,5,6 can be repeated any number of times, as each of those represents one change set of deletions and additions, ultimately leading to a new serial.

IXFR can be done over UDP or TCP, but again TCP is generally recommended to avoid packet loss.

How Does Secondary DNS Work at Cloudflare?

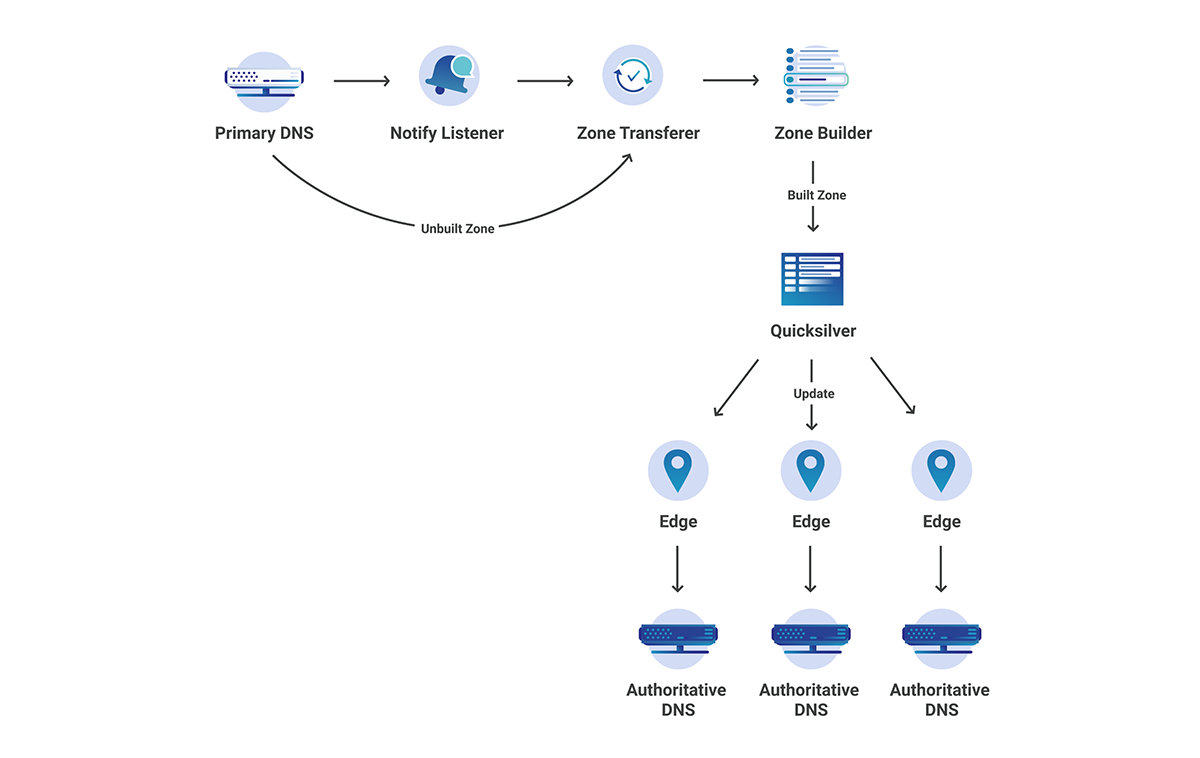

The DNS team loves microservice architecture! When we initially implemented Secondary DNS at Cloudflare, it was done using Mesos Marathon. This allowed us to separate each of our services into several different marathon apps, individually scaling apps as needed. All of these services live in our core data centers. The following services were created:

Zone Transferer - responsible for attempting IXFR, followed by AXFR if IXFR fails.

Zone Transfer Scheduler - responsible for periodically checking zone SOA serials for changes.

Rest API - responsible for registering new zones and primary nameservers.

In addition to the marathon apps, we also had an app external to the cluster:

Notify Listener - responsible for listening for notifies from primary servers and telling the Zone Transferer to initiate an AXFR/IXFR.

Each of these microservices communicates with the others through Kafka.

Figure 1: Secondary DNS Microservice Architecture

Once the zone transferer completes the AXFR/IXFR, it then passes the zone through to our zone builder, and finally gets pushed out to our edge at each of our 200 locations.

Although this current architecture worked great in the beginning, it left us open to many vulnerabilities and scalability issues down the road. As our Secondary DNS product became more popular, it was important that we proactively scaled and reduced the technical debt as much as possible. As with many companies in the industry, Cloudflare has recently migrated all of our core data center services to Kubernetes, moving away from individually managed apps and Marathon clusters.

What this meant for Secondary DNS is that all of our Marathon-based services, as well as our NOTIFY Listener, had to be migrated to Kubernetes. Although this long migration ended up paying off, many difficult challenges arose along the way that required us to come up with unique solutions in order to have a seamless, zero downtime migration.

Challenges When Migrating to Kubernetes

Although the entire DNS team agreed that kubernetes was the way forward for Secondary DNS, it also introduced several challenges. These challenges arose from a need to properly scale up across many distributed locations while also protecting each of our individual data centers. Since our core does not rely on anycast to automatically distribute requests, as we introduce more customers, it opens us up to denial-of-service attacks.

The two main issues we ran into during the migration were:

How do we create a distributed and reliable system that makes use of kubernetes principles while also making sure our customers know which IPs we will be communicating from?

When opening up a public-facing UDP socket to the Internet, how do we protect ourselves while also preventing unnecessary spam towards primary nameservers?.

Issue 1:

As was previously mentioned, one form of protection in the Secondary DNS protocol is to only allow certain IPs to initiate zone transfers. There is a fine line between primary servers allow listing too many IPs and them having to frequently update their IP ACLs. We considered several solutions:

Altering Network Address Translation(NAT) entries

Do not use k8s for zone transfers

Allowlist all Cloudflare IPs and dynamically update

Proxy egress traffic

Ultimately we decided to proxy our egress traffic from k8s, to the DNS primary servers, using static proxy addresses. Shadowsocks-libev was chosen as the SOCKS5 implementation because it is fast, secure and known to scale. In addition, it can handle both UDP/TCP and IPv4/IPv6.

Figure 2: Shadowsocks proxy Setup

The partnership of k8s and Shadowsocks combined with a large enough IP range brings many benefits:

Horizontal scaling

Efficient load balancing

Primary server ACLs only need to be updated once

It allows us to make use of kubernetes for both the Zone Transferer and the Local ShadowSocks Proxy.

Shadowsocks proxy can be reused by many different Cloudflare services.

Issue 2:

The Notify Listener requires listening on static IPs for NOTIFY Messages coming from primary DNS servers. This is mostly a solved problem through the use of k8s services of type loadbalancer, however exposing this service directly to the Internet makes us uneasy because of its susceptibility to attacks. Fortunately DDoS protection is one of Cloudflare's strengths, which lead us to the likely solution of dogfooding one of our own products, Spectrum.

Spectrum provides the following features to our service:

Reverse proxy TCP/UDP traffic

Filter out Malicious traffic

Optimal routing from edge to core data centers

Dual Stack technology

Figure 3: Spectrum interaction with Notify Listener

Figure 3 shows two interesting attributes of the system:

Spectrum <-> k8s IPv4 only:

This is because our custom k8s load balancer currently only supports IPv4; however, Spectrum has no issue terminating the IPv6 connection and establishing a new IPv4 connection.

Spectrum <-> k8s routing decisions based of L4 protocol:

This is because k8s only supports one of TCP/UDP/SCTP per service of type load balancer. Once again, spectrum has no issues proxying this correctly.

One of the problems with using a L4 proxy in between services is that source IP addresses get changed to the source IP address of the proxy (Spectrum in this case). Not knowing the source IP address means we have no idea who sent the NOTIFY message, opening us up to attack vectors. Fortunately, Spectrum’s proxy protocol feature is capable of adding custom headers to TCP/UDP packets which contain source IP/Port information.

As we are using miekg/dns for our Notify Listener, adding proxy headers to the DNS NOTIFY messages would cause failures in validation at the DNS server level. Alternatively, we were able to implement custom read and write decorators that do the following:

Reader: Extract source address information on inbound NOTIFY messages. Place extracted information into new DNS records located in the additional section of the message.

Writer: Remove additional records from the DNS message on outbound NOTIFY replies. Generate a new reply using proxy protocol headers.

There is no way to spoof these records, because the server only permits two extra records, one of which is the optional TSIG. Any other records will be overwritten.

Figure 4: Proxying Records Between Notifier and Spectrum

This custom decorator approach abstracts the proxying away from the Notify Listener through the use of the DNS protocol.

Although knowing the source IP will block a significant amount of bad traffic, since NOTIFY messages can use both UDP and TCP, it is prone to IP spoofing. To ensure that the primary servers do not get spammed, we have made the following additions to the Zone Transferer:

Always ensure that the SOA has actually been updated before initiating a zone transfer.

Only allow at most one working transfer and one scheduled transfer per zone.

Additional Technical Challenges

Zone Transferer Scheduling

As shown in figure 1, there are several ways of sending Kafka messages to the Zone Transferer in order to initiate a zone transfer. There is no benefit in having a large backlog of zone transfers for the same zone. Once a zone has been transferred, assuming no more changes, it does not need to be transferred again. This means that we should only have at most one transfer ongoing, and one scheduled transfer at the same time, for any zone.

If we want to limit our number of scheduled messages to one per zone, this involves ignoring Kafka messages that get sent to the Zone Transferer. This is not as simple as ignoring specific messages in any random order. One of the benefits of Kafka is that it holds on to messages until the user actually decides to acknowledge them, by committing that messages offset. Since Kafka is just a queue of messages, it has no concept of order other than first in first out (FIFO). If a user is capable of reading from the Kafka topic concurrently, it is entirely possible that a message in the middle of the queue be committed before a message at the end of the queue.

Most of the time this isn’t an issue, because we know that one of the concurrent readers has read the message from the end of the queue and is processing it. There is one Kubernetes-related catch to this issue, though: pods are ephemeral. The kube master doesn’t care what your concurrent reader is doing, it will kill the pod and it’s up to your application to handle it.

Consider the following problem:

Figure 5: Kafka Partition

Read offset 1. Start transferring zone 1.

Read offset 2. Start transferring zone 2.

Zone 2 transfer finishes. Commit offset 2, essentially also marking offset 1.

Restart pod.

Read offset 3 Start transferring zone 3.

If these events happen, zone 1 will never be transferred. It is important that zones stay up to date with the primary servers, otherwise stale data will be served from the Secondary DNS server. The solution to this problem involves the use of a list to track which messages have been read and completely processed. In this case, when a zone transfer has finished, it does not necessarily mean that the kafka message should be immediately committed. The solution is as follows:

Keep a list of Kafka messages, sorted based on offset.

If finished transfer, remove from list:

If the message is the oldest in the list, commit the messages offset.

Figure 6: Kafka Algorithm to Solve Message Loss

This solution is essentially soft committing Kafka messages, until we can confidently say that all other messages have been acknowledged. It’s important to note that this only truly works in a distributed manner if the Kafka messages are keyed by zone id, this will ensure the same zone will always be processed by the same Kafka consumer.

Life of a Secondary DNS Request

Although Cloudflare has a large global network, as shown above, the zone transferring process does not take place at each of the edge datacenter locations (which would surely overwhelm many primary servers), but rather in our core data centers. In this case, how do we propagate to our edge in seconds? After transferring the zone, there are a couple more steps that need to be taken before the change can be seen at the edge.

Zone Builder - This interacts with the Zone Transferer to build the zone according to what Cloudflare edge understands. This then writes to Quicksilver, our super fast, distributed KV store.

Authoritative Server - This reads from Quicksilver and serves the built zone.

Figure 7: End to End Secondary DNS

What About Performance?

At the time of writing this post, according to dnsperf.com, Cloudflare leads in global performance for both Authoritative and Resolver DNS. Here, Secondary DNS falls under the authoritative DNS category here. Let’s break down the performance of each of the different parts of the Secondary DNS pipeline, from the primary server updating its records, to them being present at the Cloudflare edge.

Primary Server to Notify Listener - Our most accurate measurement is only precise to the second, but we know UDP/TCP communication is likely much faster than that.

NOTIFY to Zone Transferer - This is negligible

Zone Transferer to Primary Server - 99% of the time we see ~800ms as the average latency for a zone transfer.

Figure 8: Zone XFR latency

4. Zone Transferer to Zone Builder - 99% of the time we see ~10ms to build a zone.

Figure 9: Zone Build time

5. Zone Builder to Quicksilver edge: 95% of the time we see less than 1s propagation.

Figure 10: Quicksilver propagation time

End to End latency: less than 5 seconds on average. Although we have several external probes running around the world to test propagation latencies, they lack precision due to their sleep intervals, location, provider and number of zones that need to run. The actual propagation latency is likely much lower than what is shown in figure 10. Each of the different colored dots is a separate data center location around the world.

Figure 11: End to End Latency

An additional test was performed manually to get a real world estimate, the test had the following attributes:

Primary server: NS1Number of records changed: 1Start test timer event: Change record on NS1Stop test timer event: Observe record change at Cloudflare edge using digRecorded timer value: 6 seconds

Conclusion

Cloudflare serves 15.8 trillion DNS queries per month, operating within 100ms of 99% of the Internet-connected population. The goal of Cloudflare operated Secondary DNS is to allow our customers with custom DNS solutions, be it on-premise or some other DNS provider, to be able to take advantage of Cloudflare's DNS performance and more recently, through Secondary Override, our proxying and security capabilities too. Secondary DNS is currently available on the Enterprise plan, if you’d like to take advantage of it, please let your account team know. For additional documentation on Secondary DNS, please refer to our support article.