During last year’s Birthday Week we announced preliminary support for QUIC and HTTP/3 (or “HTTP over QUIC” as it was known back then), the new standard for the web, enabling faster, more reliable, and more secure connections to web endpoints like websites and APIs. We also let our customers join a waiting list to try QUIC and HTTP/3 as soon as they became available.

Since then, we’ve been working with industry peers through the Internet Engineering Task Force, including Google Chrome and Mozilla Firefox, to iterate on the HTTP/3 and QUIC standards documents. In parallel with the standards maturing, we’ve also worked on improving support on our network.

We are now happy to announce that QUIC and HTTP/3 support is available on the Cloudflare edge network. We’re excited to be joined in this announcement by Google Chrome and Mozilla Firefox, two of the leading browser vendors and partners in our effort to make the web faster and more reliable for all.

In the words of Ryan Hamilton, Staff Software Engineer at Google, “HTTP/3 should make the web better for everyone. The Chrome and Cloudflare teams have worked together closely to bring HTTP/3 and QUIC from nascent standards to widely adopted technologies for improving the web. Strong partnership between industry leaders is what makes Internet standards innovations possible, and we look forward to our continued work together.”

What does this mean for you, a Cloudflare customer who uses our services and edge network to make your web presence faster and more secure? Once HTTP/3 support is enabled for your domain in the Cloudflare dashboard, your customers can interact with your websites and APIs using HTTP/3. We’ve been steadily inviting customers on our HTTP/3 waiting list to turn on the feature (so keep an eye out for an email from us), and in the coming weeks we’ll make the feature available to everyone.

What does this announcement mean if you’re a user of the Internet interacting with sites and APIs through a browser and other clients? Starting today, you can use Chrome Canary to interact with Cloudflare and other servers over HTTP/3. For those of you looking for a command line client, curl also provides support for HTTP/3. Instructions for using Chrome and curl with HTTP/3 follow later in this post.

The Chicken and the Egg

Standards innovation on the Internet has historically been difficult because of a chicken and egg problem: which needs to come first, server support (like Cloudflare, or other large sources of response data) or client support (like browsers, operating systems, etc)? Both sides of a connection need to support a new communications protocol for it to be any use at all.

Cloudflare has a long history of driving web standards forward, from HTTP/2 (the version of HTTP preceding HTTP/3), to TLS 1.3, to things like encrypted SNI. We’ve pushed standards forward by partnering with like-minded organizations who share in our desire to help build a better Internet. Our efforts to move HTTP/3 into the mainstream are no different.

Throughout the HTTP/3 standards development process, we’ve been working closely with industry partners to build and validate client HTTP/3 support compatible with our edge support. We’re thrilled to be joined by Google Chrome and curl, both of which can be used today to make requests to the Cloudflare edge over HTTP/3. Mozilla Firefox expects to ship support in a nightly release soon as well.

Bringing this all together: today is a good day for Internet users; widespread rollout of HTTP/3 will mean a faster web experience for all, and today’s support is a large step toward that.

More importantly, today is a good day for the Internet: Chrome, curl, and Cloudflare, and soon, Mozilla, rolling out experimental but functional, support for HTTP/3 in quick succession shows that the Internet standards creation process works. Coordinated by the Internet Engineering Task Force, industry partners, competitors, and other key stakeholders can come together to craft standards that benefit the entire Internet, not just the behemoths.

Eric Rescorla, CTO of Firefox, summed it up nicely: “Developing a new network protocol is hard, and getting it right requires everyone to work together. Over the past few years, we've been working with Cloudflare and other industry partners to test TLS 1.3 and now HTTP/3 and QUIC. Cloudflare's early server-side support for these protocols has helped us work the interoperability kinks out of our client-side Firefox implementation. We look forward to advancing the security and performance of the Internet together.”

How did we get here?

Before we dive deeper into HTTP/3, let’s have a quick look at the evolution of HTTP over the years in order to better understand why HTTP/3 is needed.

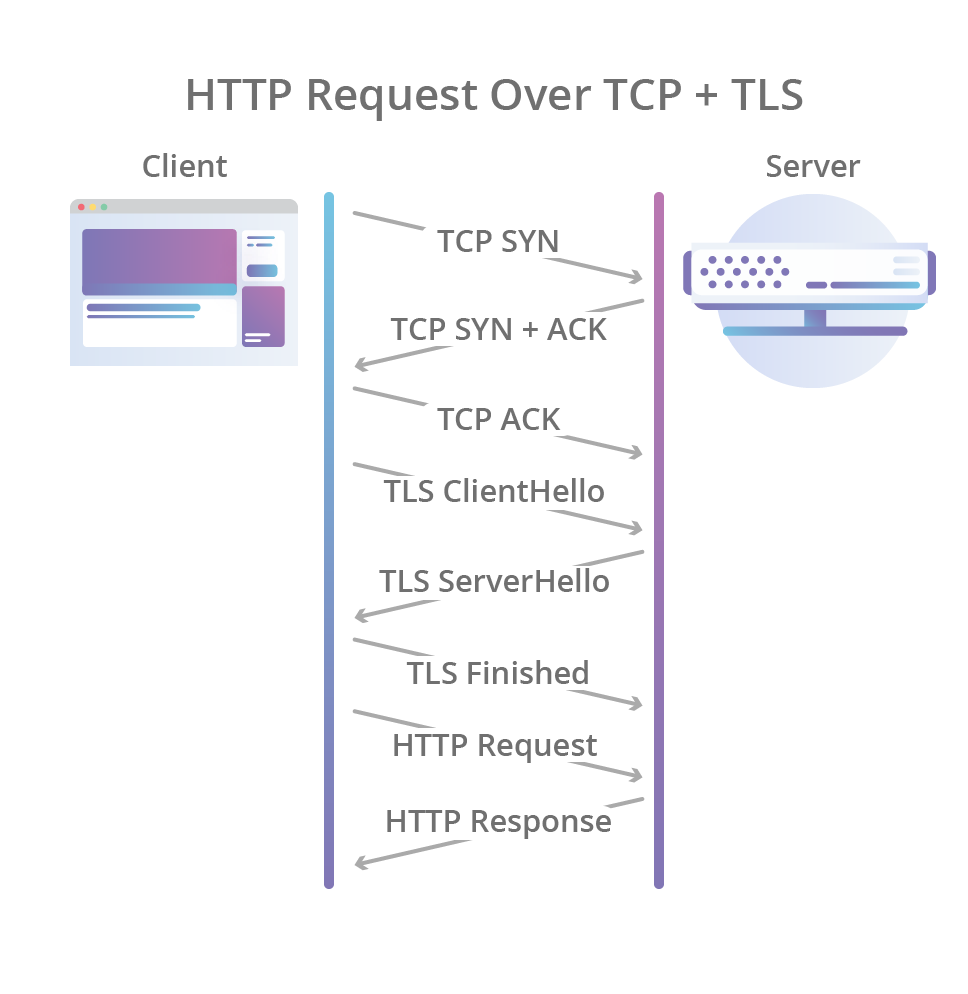

It all started back in 1996 with the publication of the HTTP/1.0 specification which defined the basic HTTP textual wire format as we know it today (for the purposes of this post I’m pretending HTTP/0.9 never existed). In HTTP/1.0 a new TCP connection is created for each request/response exchange between clients and servers, meaning that all requests incur a latency penalty as the TCP and TLS handshakes are completed before each request.

Worse still, rather than sending all outstanding data as fast as possible once the connection is established, TCP enforces a warm-up period called “slow start”, which allows the TCP congestion control algorithm to determine the amount of data that can be in flight at any given moment before congestion on the network path occurs, and avoid flooding the network with packets it can’t handle. But because new connections have to go through the slow start process, they can’t use all of the network bandwidth available immediately.

The HTTP/1.1 revision of the HTTP specification tried to solve these problems a few years later by introducing the concept of “keep-alive” connections, that allow clients to reuse TCP connections, and thus amortize the cost of the initial connection establishment and slow start across multiple requests. But this was no silver bullet: while multiple requests could share the same connection, they still had to be serialized one after the other, so a client and server could only execute a single request/response exchange at any given time for each connection.

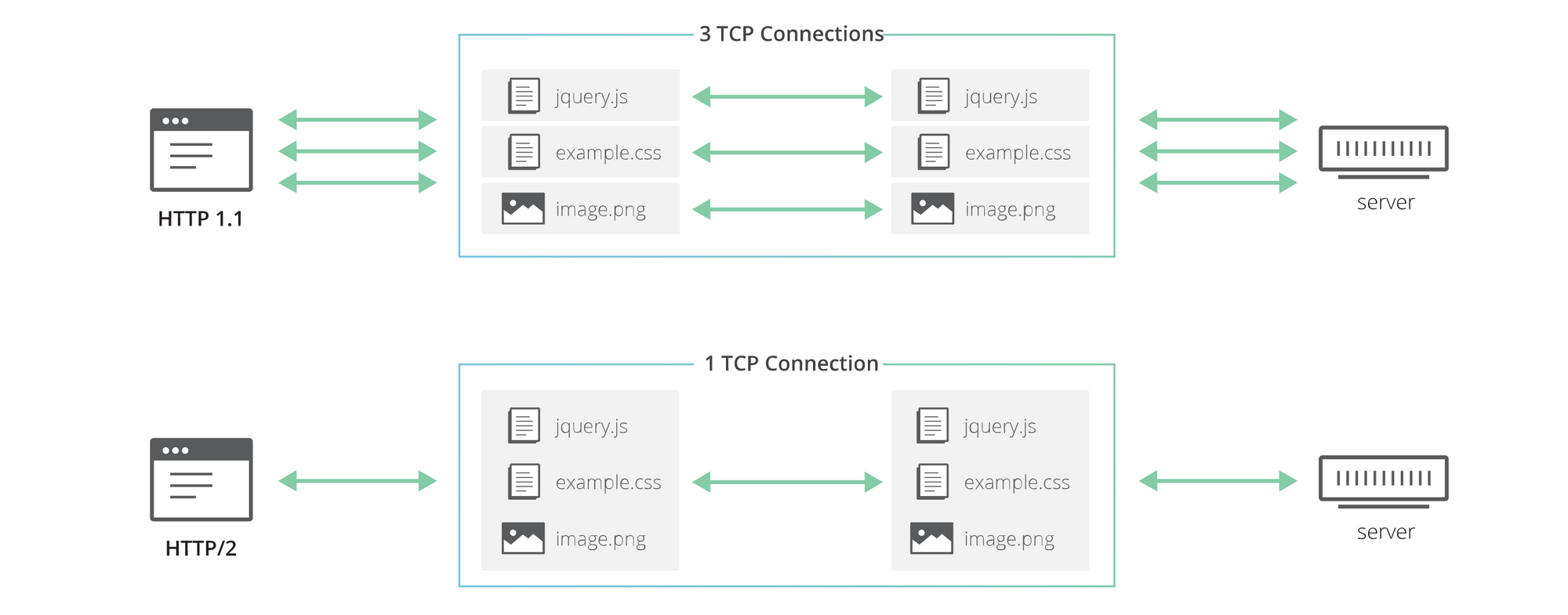

As the web evolved, browsers found themselves needing more and more concurrency when fetching and rendering web pages as the number of resources (CSS, JavaScript, images, …) required by each web site increased over the years. But since HTTP/1.1 only allowed clients to do one HTTP request/response exchange at a time, the only way to gain concurrency at the network layer was to use multiple TCP connections to the same origin in parallel, thus losing most of the benefits of keep-alive connections. While connections would still be reused to a certain (but lesser) extent, we were back at square one.

Finally, more than a decade later, came SPDY and then HTTP/2, which, among other things, introduced the concept of HTTP “streams”: an abstraction that allows HTTP implementations to concurrently multiplex different HTTP exchanges onto the same TCP connection, allowing browsers to more efficiently reuse TCP connections.

But, yet again, this was no silver bullet! HTTP/2 solves the original problem — inefficient use of a single TCP connection — since multiple requests/responses can now be transmitted over the same connection at the same time. However, all requests and responses are equally affected by packet loss (e.g. due to network congestion), even if the data that is lost only concerns a single request. This is because while the HTTP/2 layer can segregate different HTTP exchanges on separate streams, TCP has no knowledge of this abstraction, and all it sees is a stream of bytes with no particular meaning.

The role of TCP is to deliver the entire stream of bytes, in the correct order, from one endpoint to the other. When a TCP packet carrying some of those bytes is lost on the network path, it creates a gap in the stream and TCP needs to fill it by resending the affected packet when the loss is detected. While doing so, none of the successfully delivered bytes that follow the lost ones can be delivered to the application, even if they were not themselves lost and belong to a completely independent HTTP request. So they end up getting unnecessarily delayed as TCP cannot know whether the application would be able to process them without the missing bits. This problem is known as “head-of-line blocking”.

Enter HTTP/3

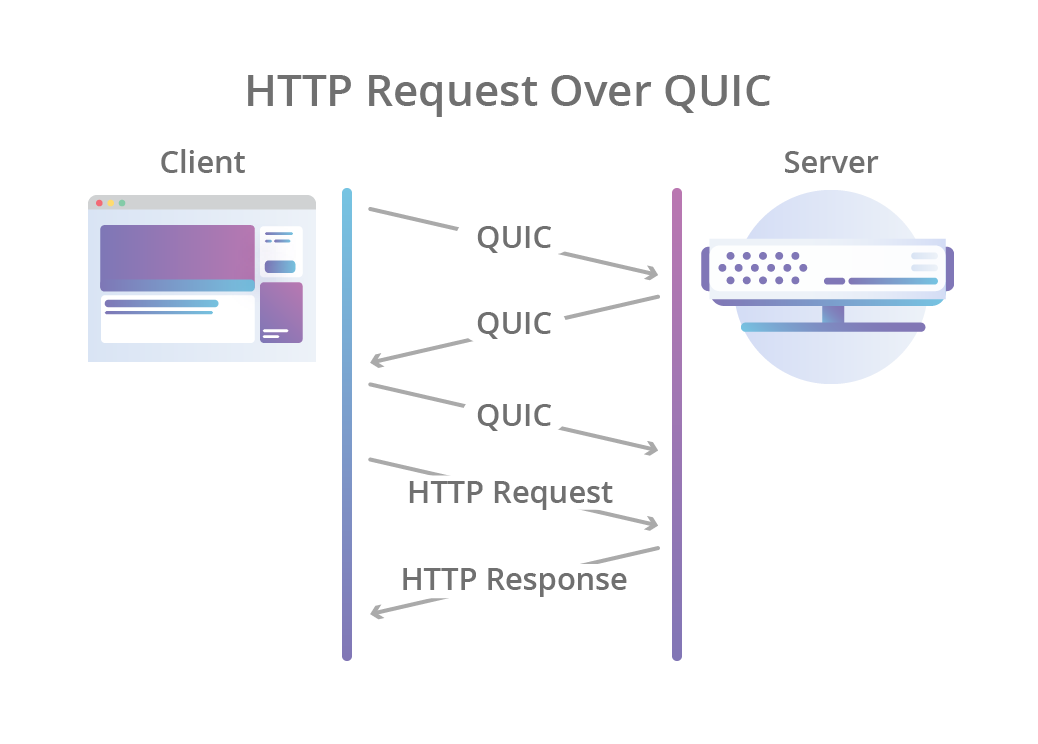

This is where HTTP/3 comes into play: instead of using TCP as the transport layer for the session, it uses QUIC, a new Internet transport protocol, which, among other things, introduces streams as first-class citizens at the transport layer. QUIC streams share the same QUIC connection, so no additional handshakes and slow starts are required to create new ones, but QUIC streams are delivered independently such that in most cases packet loss affecting one stream doesn't affect others. This is possible because QUIC packets are encapsulated on top of UDP datagrams.

Using UDP allows much more flexibility compared to TCP, and enables QUIC implementations to live fully in user-space — updates to the protocol’s implementations are not tied to operating systems updates as is the case with TCP. With QUIC, HTTP-level streams can be simply mapped on top of QUIC streams to get all the benefits of HTTP/2 without the head-of-line blocking.

QUIC also combines the typical 3-way TCP handshake with TLS 1.3's handshake. Combining these steps means that encryption and authentication are provided by default, and also enables faster connection establishment. In other words, even when a new QUIC connection is required for the initial request in an HTTP session, the latency incurred before data starts flowing is lower than that of TCP with TLS.

But why not just use HTTP/2 on top of QUIC, instead of creating a whole new HTTP revision? After all, HTTP/2 also offers the stream multiplexing feature. As it turns out, it’s somewhat more complicated than that.

While it’s true that some of the HTTP/2 features can be mapped on top of QUIC very easily, that’s not true for all of them. One in particular, HTTP/2’s header compression scheme called HPACK, heavily depends on the order in which different HTTP requests and responses are delivered to the endpoints. QUIC enforces delivery order of bytes within single streams, but does not guarantee ordering among different streams.

This behavior required the creation of a new HTTP header compression scheme, called QPACK, which fixes the problem but requires changes to the HTTP mapping. In addition, some of the features offered by HTTP/2 (like per-stream flow control) are already offered by QUIC itself, so they were dropped from HTTP/3 in order to remove unnecessary complexity from the protocol.

HTTP/3, powered by a delicious quiche

QUIC and HTTP/3 are very exciting standards, promising to address many of the shortcomings of previous standards and ushering in a new era of performance on the web. So how do we go from exciting standards documents to working implementation?

Cloudflare's QUIC and HTTP/3 support is powered by quiche, our own open-source implementation written in Rust.

You can find it on GitHub at github.com/cloudflare/quiche.

We announced quiche a few months ago and since then have added support for the HTTP/3 protocol, on top of the existing QUIC support. We have designed quiche in such a way that it can now be used to implement HTTP/3 clients and servers or just plain QUIC ones.

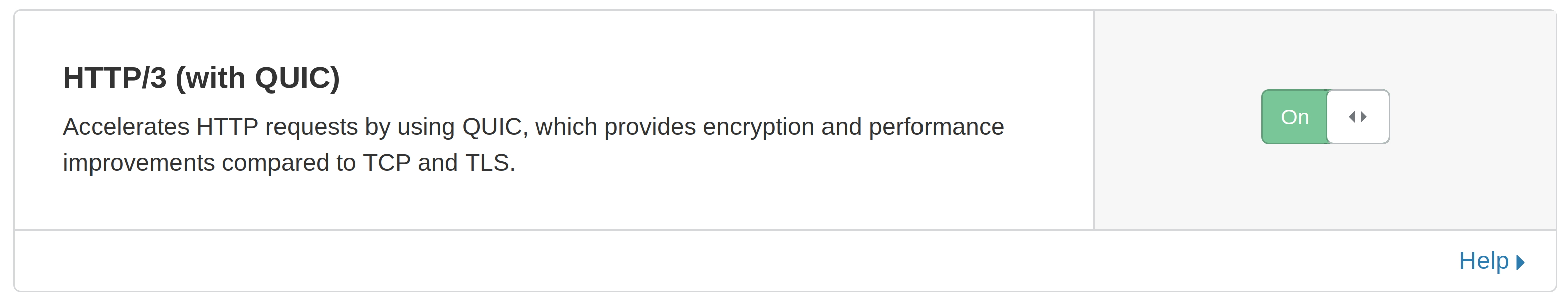

How do I enable HTTP/3 for my domain?

As mentioned above, we have started on-boarding customers that signed up for the waiting list. If you are on the waiting list and have received an email from us communicating that you can now enable the feature for your websites, you can simply go to the Cloudflare dashboard and flip the switch from the "Network" tab manually:

We expect to make the HTTP/3 feature available to all customers in the near future.

Once enabled, you can experiment with HTTP/3 in a number of ways:

Using Google Chrome as an HTTP/3 client

In order to use the Chrome browser to connect to your website over HTTP/3, you first need to download and install the latest Canary build. Then all you need to do to enable HTTP/3 support is starting Chrome Canary with the “--enable-quic” and “--quic-version=h3-23” command-line arguments.

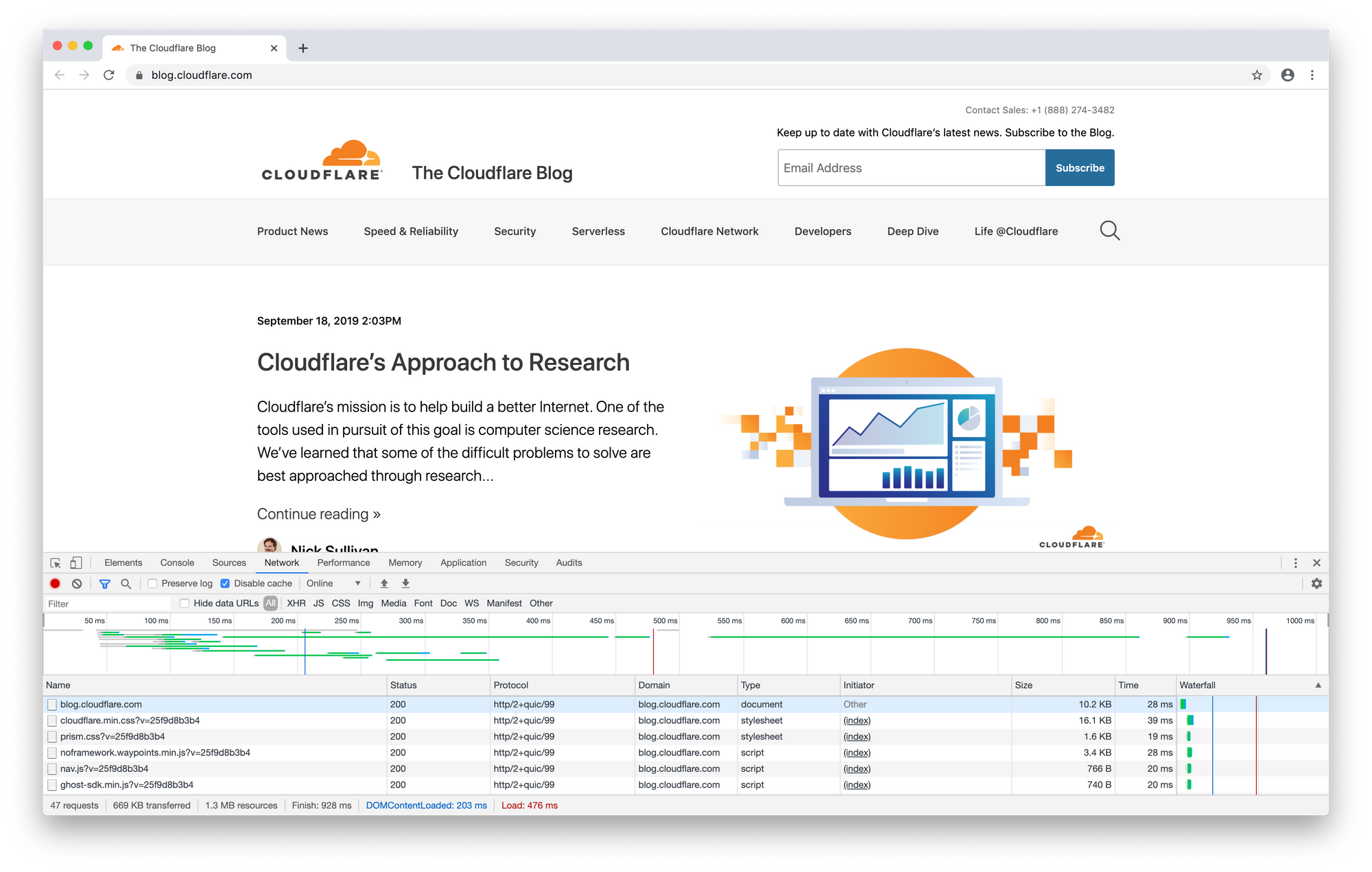

Once Chrome is started with the required arguments, you can just type your domain in the address bar, and see it loaded over HTTP/3 (you can use the Network tab in Chrome’s Developer Tools to check what protocol version was used). Note that due to how HTTP/3 is negotiated between the browser and the server, HTTP/3 might not be used for the first few connections to the domain, so you should try to reload the page a few times.

If this seems too complicated, don’t worry, as the HTTP/3 support in Chrome will become more stable as time goes on, enabling HTTP/3 will become easier.

This is what the Network tab in the Developer Tools shows when browsing this very blog over HTTP/3:

Note that due to the experimental nature of the HTTP/3 support in Chrome, the protocol is actually identified as “http2+quic/99” in Developer Tools, but don’t let that fool you, it is indeed HTTP/3.

Using curl

The curl command-line tool also supports HTTP/3 as an experimental feature. You’ll need to download the latest version from git and follow the instructions on how to enable HTTP/3 support.

If you're running macOS, we've also made it easy to install an HTTP/3 equipped version of curl via Homebrew:

% brew install --HEAD -s https://raw.githubusercontent.com/cloudflare/homebrew-cloudflare/master/curl.rbIn order to perform an HTTP/3 request all you need is to add the “--http3” command-line flag to a normal curl command:

% ./curl -I https://blog.cloudflare.com/ --http3

HTTP/3 200

date: Tue, 17 Sep 2019 12:27:07 GMT

content-type: text/html; charset=utf-8

set-cookie: __cfduid=d3fc7b95edd40bc69c7d894d296564df31568723227; expires=Wed, 16-Sep-20 12:27:07 GMT; path=/; domain=.blog.cloudflare.com; HttpOnly; Secure

x-powered-by: Express

cache-control: public, max-age=60

vary: Accept-Encoding

cf-cache-status: HIT

age: 57

expires: Tue, 17 Sep 2019 12:28:07 GMT

alt-svc: h3-23=":443"; ma=86400

expect-ct: max-age=604800, report-uri="https://report-uri.cloudflare.com/cdn-cgi/beacon/expect-ct"

server: cloudflare

cf-ray: 517b128df871bfe3-MANUsing quiche’s http3-client

Finally, we also provide an example HTTP/3 command-line client (as well as a command-line server) built on top of quiche, that you can use to experiment with HTTP/3.

To get it running, first clone quiche’s GitHub repository:

$ git clone --recursive https://github.com/cloudflare/quicheThen build it. You need a working Rust and Cargo installation for this to work (we recommend using rustup to easily setup a working Rust development environment).

$ cargo build --examplesAnd finally you can execute an HTTP/3 request:

$ RUST_LOG=info target/debug/examples/http3-client https://blog.cloudflare.com/What’s next?

In the coming months we’ll be working on improving and optimizing our QUIC and HTTP/3 implementation, and will eventually allow everyone to enable this new feature without having to go through a waiting list. We'll continue updating our implementation as standards evolve, which may result in breaking changes between draft versions of the standards.

Here are a few new features on our roadmap that we're particularly excited about:

Connection migration

One important feature that QUIC enables is seamless and transparent migration of connections between different networks (such as your home WiFi network and your carrier’s mobile network as you leave for work in the morning) without requiring a whole new connection to be created.

This feature will require some additional changes to our infrastructure, but it’s something we are excited to offer our customers in the future.

Zero Round Trip Time Resumption

Just like TLS 1.3, QUIC supports a mode of operation that allows clients to start sending HTTP requests before the connection handshake has completed. We don’t yet support this feature in our QUIC deployment, but we’ll be working on making it available, just like we already do for our TLS 1.3 support.

HTTP/3: it's alive!

We are excited to support HTTP/3 and allow our customers to experiment with it while efforts to standardize QUIC and HTTP/3 are still ongoing. We'll continue working alongside other organizations, including Google and Mozilla, to finalize the QUIC and HTTP/3 standards and encourage broad adoption.

Here's to a faster, more reliable, more secure web experience for all.