On the WWW team, we’re responsible for Cloudflare’s REST APIs, account management services and the dashboard experience. We take security and PCI compliance seriously, which means we move quickly to stay up to date with regulations and relevant laws.

A recent compliance project had a requirement of detecting certain end user request data at the edge, and reacting to it both in API responses as well as visually in the dashboard. We realized that this was an excellent opportunity to dogfood Cloudflare Workers.

Deploying workers to www.cloudflare.com and api.cloudflare.com

In this blog post, we’ll break down the problem we solved using a single worker that we shipped to multiple hosts, share the annotated source code of our worker, and share some best practices and tips and tricks we discovered along the way.

Since being deployed, our worker has served over 400 million requests for both calls to api.cloudflare.com and the www.cloudflare.com dashboard.

The task

First, we needed to detect when a client was connecting to our services using an outdated TLS protocol. Next, we wanted to pass this information deeper into our application stack so that we could act upon it and conditionally decorate our responses with notices providing a heads up about the imminent changes.

Our Edge team was quick to create a patch to capture TLS connection information for every incoming request, but how would we propagate it to our application layer where it could be acted upon?

The solution

The Workers team made a modification to the core platform to ingest the TLS protocol version data sent from the edge, making it available in the workers environment as a property of the cf object available to the worker Javascript context (You can now use this property in your own workers).

With our workers able to inspect the TLS protocol versions of requests, we needed only to append a custom HTTP header containing this information before forwarding them into our application layer.

Our APIs use this data to add deprecation warnings to responses, and our UI uses it to display banners explaining the upcoming changes.

Let's now take a look at the source code for our worker.

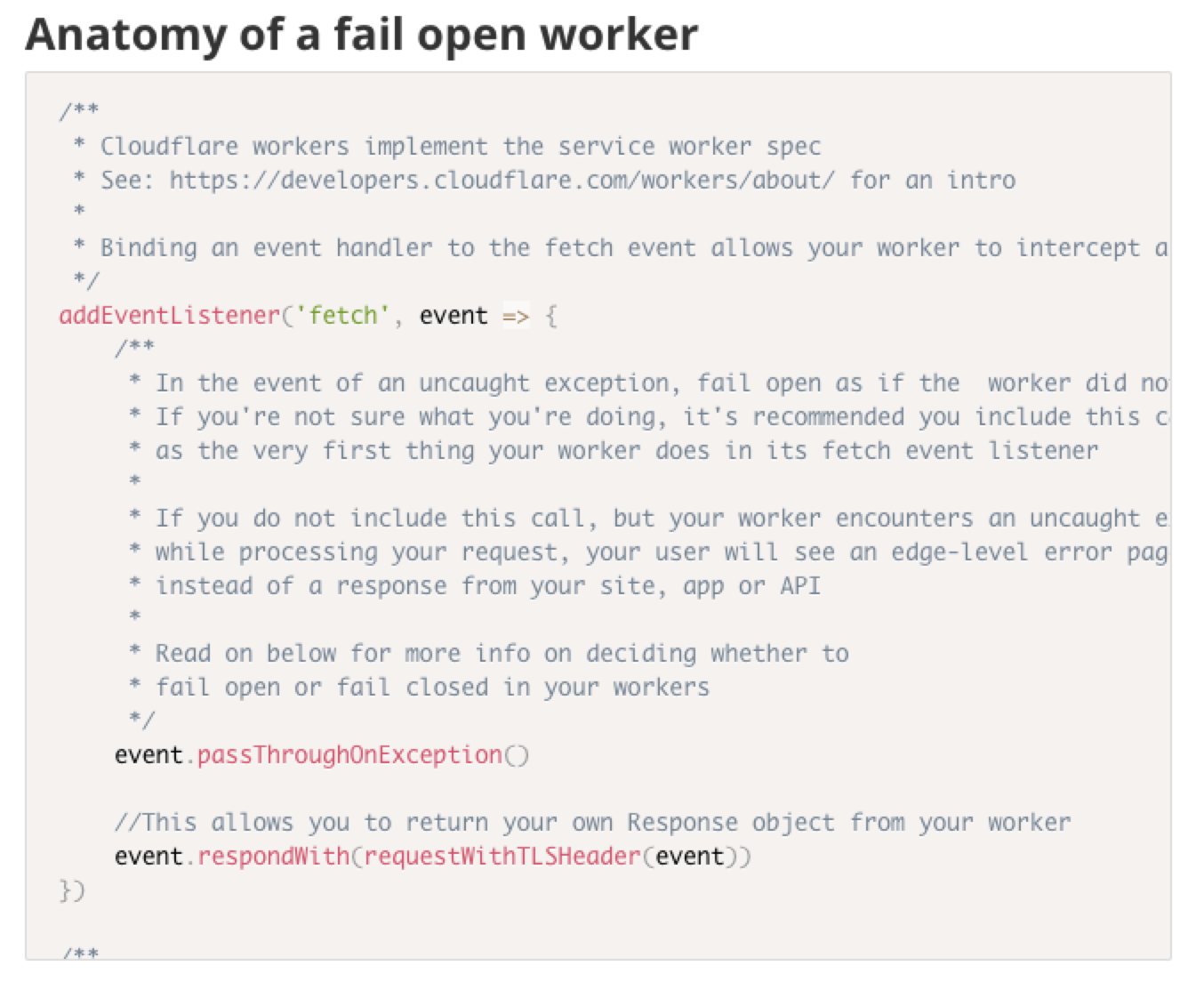

Anatomy of a fail open worker

/**

* Cloudflare workers implement the service worker spec

* See: https://developers.cloudflare.com/workers/about/ for an intro

*

* Binding an event handler to the fetch event allows your worker to intercept a request for your zone

*/

addEventListener('fetch', event => {

/**

* In the event of an uncaught exception, fail open as if the worker did not exist

* If you're not sure what you're doing, it's recommended you include this call

* as the very first thing your worker does in its fetch event listener

*

* If you do not include this call, but your worker encounters an uncaught exception

* while processing your request, your user will see an edge-level error page

* instead of a response from your site, app or API

*

* Read on below for more info on deciding whether to

* fail open or fail closed in your workers

*/

event.passThroughOnException()

//This allows you to return your own Response object from your worker

event.respondWith(requestWithTLSHeader(event))

})

/**

* Calls out to our Sentry account to create an exception event

*

* Note that for this to work properly with the event.waitUntil() call in the

* exception block within requestWithTLSHeader, this function must return a promise

*

* @returns {Promise}

*/

function promisifiedSentryLog(ex) {

//Change these constants to your own Sentry values if you want to use this script

const sentryProjectId = '<Replace-Me-With-Your-Sentry-Project-Id>'

const sentryAPIKey = '<Replace-Me-With-Your-Sentry-API-Key>'

const sentrySecretKey = '<Replace-Me-With-Your-Sentry-Secret-Key>'

//Manually configure our call to Sentry

let b = {

project: sentryProjectId,

logger: "javascript",

platform: "javascript",

exception: {

values: [

{ type: "Error", value: ((ex) && (ex.message)) ? ex.message : 'Unknown' }

]

}

}

let sentryUrl = `https://sentry.io/api/${sentryProjectId}/store/?sentry_version=7&sentry_client=raven-js%2F3.24.2&sentry_key=${sentryAPIKey}&sentry_secret=${sentrySecretKey}`

/**

* Fire off a POST request to Sentry's API, which includes our project

* and credentials information, plus arbitrary logging data

*

* In this case, we're passing along the exception message,

* but you could use this pattern to log anything you want

*

* Keep in mind that fetch returns a promise,

* which is what makes this function compatible with event.waitUntil

*/

return fetch(sentryUrl, { body: JSON.stringify(b), method: 'POST' })

}

/**

* Creates a new request for the backend that mirrors the incoming request,

* with the addition of a new header that specifies which TLS version was used

* in the connection to the edge

*

* This is the main function that contains the core logic for this worker

*

* It works by checking for the presence of a property 'tlsVersion' that is being forwarded

* from the edge into the workers platform so that worker scripts can access it

*

* The worker starts with a default TLS header. If the tlsVersion property,

* which represents the version of the TLS protocol the client connected with,

* is present, the worker sets its local tlsVersion variable to the value of this property

*

* It then wraps the incoming request headers in a new headers object,

* which enables us to append our own custom X-Client-SSL-Protocol header

*

* The worker then forwards the original request

* (overriding the headers with our new headers object) to the origin

*

* Now, our application layer can act upon this information

* to show modals and include deprecation warnings as necessary

*

* @returns {Promise}

*/

async function requestWithTLSHeader(event) {

//It's strongly recommended that you wrap your core worker logic in a try / catch block

try {

let tlsVersion = "NONE"

//Create a new Headers object that includes the original request's headers

let reqHeaders = new Headers(request.headers)

if (event && event.request && event.request.cf && event.request.cf.tlsVersion && typeof event.request.cf.tlsVersion === "string" && event.request.cf.tlsVersion !== "") {

tlsVersion = event.request.cf.tlsVersion

}

//Add our new header

reqHeaders.append('X-Client-SSL-Protocol', tlsVersion)

//Extend the original request's headers with our own, but otherwise fetch the original request

return await fetch(event.request, { headers: reqHeaders })

} catch (ex) {

/**

* Signal the runtime that it should wait until the promise resolves

*

* This avoids race conditions where the runtime stops execution before

* our async Sentry task completes

*

* If you do not do this, the passthrough subrequest will race

* your pending asychronous request to Sentry, and you will

* miss many events / fail to capture them correctly

*/

event.waitUntil(promisifiedSentryLog(ex))

/**

* Intentionally throw the exception in order to trigger the pass-through

* behavior defined by event.passThroughOnException()

*

* This means that our worker will fail open - and not block requests

* to our backend services due to unexpected exceptions

*/

throw ex

}

}The above Workers script was updated on 5/17/18 to correct how the Sentry's API works.

Asynchrony, logging and alerts via Sentry

We decided to use Sentry to capture events we sent from our worker, but you could follow this same pattern with any similar service.

The critical piece to making this work is understanding that you must signal the Cloudflare worker runtime that it needs to wait upon your asynchronous logging subrequest (and not cancel it).

You do this by:

Ensuring that your logging function returns a promise (what your promise resolves to does not matter)

Wrapping your call to your logging function in event.waitUntil as we have done above

This pattern fixes a common race condition: if you don't leverage event.waitUntil, the runtime will race the passthrough subrequest and your logging subrequest.

If the passthrough subrequest completes significantly faster than your logging subrequest, the logging request could be cancelled. In practice, you'll notice this issue manifesting as dropped logging messages - whether or not a given exception will be logged properly becomes a roll of the dice on every request.

For additional insight, check out our official guide to debugging Cloudflare workers.

Failing open to ensure service continuity

A key consideration when designing your worker is failure behavior. Depending on what your particular worker is accomplishing, you either want it to fail open or failed closed. Failing open means that if something goes horribly wrong, the original request will be passed through as if your worker did not exist, while failing closed means that a request that raises an exception in your worker will not be processed further.

If you are editing metadata, collecting metrics, or adding new non-critical HTTP headers, to name a few examples, you probably don't want an unhandled exception in your worker to prevent the request from being serviced.

In this case, you can leverage event.passThroughOnException as we have above, and it's recommended that you call this method in the first line of your fetch event handler. This sets a flag that the Cloudflare worker request handler can inspect in case of an exception to determine the desired passthrough behavior.

On the other hand, if you're employing your worker in a security-centric role, tasking it with blocking malicious requests from nefarious bots, or blocking access to sensitive endpoints when valid security credentials are not supplied, you may want your worker to fail closed. This is the default behavior, and it will prevent requests that raise exceptions in your worker from being processed further.

Our services are in the critical path for our customers, yet our use case was to conditionally add a new HTTP header, so we knew we wanted to fail open.

Having your worker generate PagerDuty and Hipchat alerts

Now that you've done the work to get data from your worker logged to Sentry, you can leverage Sentry's PagerDuty integration to configure alerts for exceptions that occur in your workers.

This will increase your team's confidence in your worker-based solutions, and alert you immediately to any new issues that occur in production.

Share your worker recipes

You can find additional worker recipes and examples in our official documentation.

Have you written a worker that you'd like to share? Send it to us and you might get featured on our blog or added to our Cloudflare worker recipe collection with a credit.