I originally wrote this article for the Web Performance Calendar website, which is a terrific resource of expert opinions on making your website as fast as possible. We thought CloudFlare users would be interested so we reproduced it here. Enjoy!

In 2004, Lee Holloway and I started Project Honey Pot. The site, which tracks online fraud and abuse, primarily consists of web pages that report the reputation of IP addresses. While we had limited resources and tried to get the most of them, I just checked Google which lists more than 31 million pages in its index that make up the www.projecthoneypot.org site.

Project Honey Pot's pages are relatively simple and asset light, but like many sites today they include significant dynamic content that is regularly updated at unpredictable intervals. To deliver near realtime updates, the pages need to be database driven.

To maximize performance of the site, from the beginning we used a number of different caching layers to store the most frequently accessed pages. Lee, whose background is high-performance database design, studied reports from services like Google Analytics to understand how visitors moved through the site and built caching to keep regularly accessed pages from needing to hit the database.

We thought we were pretty smart but, in spite of following the best practices of web application performance design, with alarming frequency the site would grind to a halt. The culprit turned out to be something unexpected and hidden from the view of many people optimizing web performance: automated bots.

The average website sees more than 20% of its requests coming from some sort of automated bot. These bots include the usual suspects like search engine crawlers, but also include malicious bots scanning for vulnerabilities or harvesting data. We've been tracking this data at CloudFlare across hundreds of thousands of sites on our network and have found that on average approximately 15% of web total requests originate a web threat of one form or another, with swings up and down depending on the day.

In Project Honey Pot's case, the traffic from these bots had a significant performance impact. Because they did not follow the typical human visitation pattern, they were often triggering pages that weren't hot in our cache. Moreover, since the bots typically didn't fire Javascript beacons like those used in systems like Google Analytics, their traffic and its impact weren't immediately obvious.

To solve the problem, we implemented two different systems to deal with two different types of bots. Because we had great data on web threats, we were able to leverage that to restrict known malicious crawlers from requesting dynamic pages on the site. Just taking off the threat traffic had an immediate impact and freed up database resources for legitimate visitors.

The same approach didn't make sense for the other type of automated bots: search engine crawlers. We wanted Project Honey Pot's pages to be found through online searches, so we didn't want to block search engine crawlers entirely. However, in spite of removing the threat traffic, Google, Yahoo, and Microsoft's crawlers all accessing the site at the same time would sometimes cause the web server and database to slow to a crawl.

The solution was a modification of our caching strategy. While we wanted to deliver the latest results to human visitors, we began serving search crawlers from a cache with a longer time to live (TTL). We experimented with the right TTLs for pages, but eventually settled on 1 day as being optimal for the Project Honey Pot site. If a page is crawled by Google today and then Baidu requests the same page less in the next 24 hours, we return the cached version without regenerating the page from the database.

Search engines, by their nature, see a snapshot of the Internet. While it is important to not serve deceptively different content to their crawlers, modifying your caching strategy to minimize their performance impact on your web application is well within the bounds of good web practices.

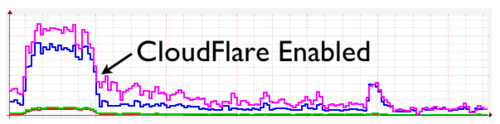

Since starting CloudFlare, we've taken the caching strategy we developed at Project Honey Pot and made it more intelligent and dynamic to optimize performance. We automatically tune the search crawler TTL to the characteristics of the site, and are very good at keeping malicious crawlers from ever hitting your web application. On average, we're able to offload 70% of the requests from a web application — which is stunning given the entire CloudFlare configuration process takes about 5 minutes. While some of this performance benefit comes from traditional CDN-like caching, some of the biggest cache wins actually come from handling bots' deep page views that aren't alleviated by traditional caching strategies.

The results can be dramatic. For example, SXSW's website employs extensive traditional web application and database caching systems but was able to reduce the load on their web servers and database machinesby more than 50% in large part because of CloudFlare's bot-aware caching.

When you're tuning your web application for maximum performance, if you're only looking at a beacon-based analytics tool like Google Analytics you may be missing one of the biggest sources of web application load. This is why CloudFlare's analytics reports the visits from all visitors to your site. Even without CloudFlare, digging through your raw server logs, being bot-aware, and building caching strategies that differentiate between the behaviors of different classes of visitors can be an important aspect of any site's web performance strategy.