During Developer Week, we announced LangChain support for Cloudflare Workers. Langchain is an open-source framework that allows developers to create powerful AI workflows by combining different models, providers, and plugins using a declarative API — and it dovetails perfectly with Workers for creating full stack, AI-powered applications.

Since then, we’ve been working with the LangChain team on deeper integration of many tools across Cloudflare’s developer platform and are excited to share what we’ve been up to.

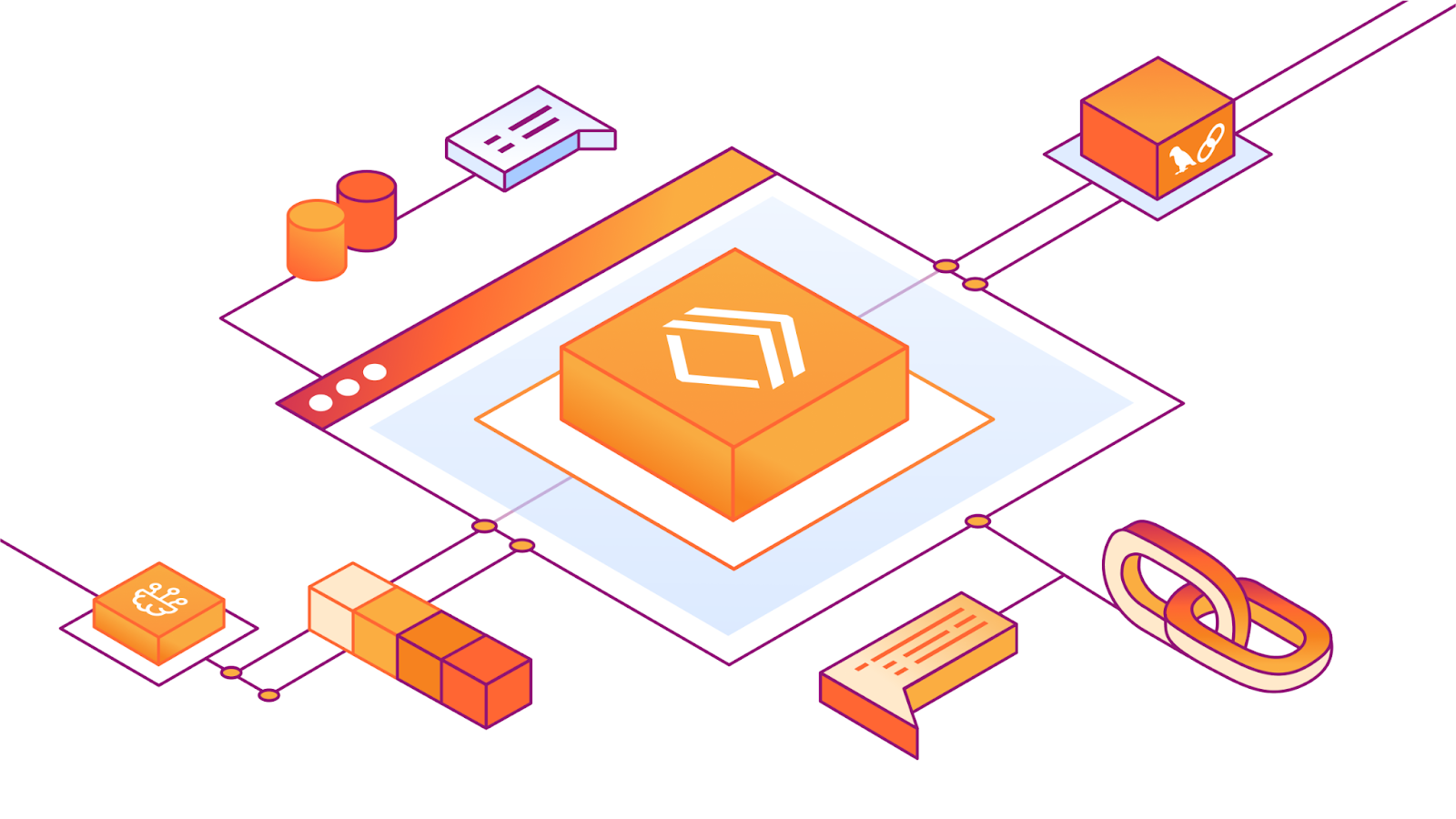

Today, we’re announcing five new key integrations with LangChain:

Workers AI Chat Models: This allows you to use Workers AI text generation to power your chat model within your LangChain.js application.

Workers AI Instruct Models: This allows you to use Workers AI models fine-tuned for instruct use-cases, such as Mistral and CodeLlama, inside your Langchain.js application.

Text Embeddings Models: If you’re working with text embeddings, you can now use Workers AI text embeddings with LangChain.js.

Vectorize Vector Store: When working with a Vector database and LangChain.js, you now have the option of using Vectorize, Cloudflare’s powerful vector database.

Cloudflare D1-Backed Chat Memory: For longer-term persistence across chat sessions, you can swap out LangChain’s default in-memory chatHistory that backs chat memory classes like BufferMemory for a Cloudflare D1 instance.

With the addition of these five Cloudflare AI tools into LangChain, developers have powerful new primitives to integrate into new and existing AI applications. With LangChain’s expressive tooling for mixing and matching AI tools and models, you can use Vectorize, Cloudflare AI’s text embedding and generation models, and Cloudflare D1 to build a fully-featured AI application in just a few lines of code.

This is a full persistent chat app powered by an LLM in 10 lines of code–deployed to @Cloudflare Workers, powered by @LangChainAI and @Cloudflare D1.

You can even pass in a unique sessionId and have completely user/session-specific conversations 🤯 https://t.co/le9vbMZ7Mc pic.twitter.com/jngG3Z7NQ6

— Kristian Freeman (@kristianf_) September 20, 2023

Getting started with a Cloudflare + LangChain + Nuxt Multi-source Chatbot template

You can get started by using LangChain’s Cloudflare Chatbot template: https://github.com/langchain-ai/langchain-cloudflare-nuxt-template

This application shows how various pieces of Cloudflare Workers AI fit together and expands on the concept of retrieval augmented generation (RAG) to build a conversational retrieval system that can route between multiple data sources, choosing the one more relevant based on the incoming question. This method helps cut down on distraction from off-topic documents getting pulled in by a vector store’s similarity search, which could occur if only a single database were used.

The base version runs entirely on the Cloudflare Workers AI stack with the Llama 2-7B model. It uses:

A chat variant of Llama 2-7B run on Cloudflare Workers AI

A Cloudflare Workers AI embeddings model

Two different Cloudflare Vectorize DBs (though you could add more!)

Cloudflare Pages for hosting

LangChain.js for orchestration

Nuxt + Vue for the frontend

The two default data sources are a PDF detailing some of Cloudflare's features and a blog post by Lilian Weng at OpenAI that talks about autonomous agents.

The bot will classify incoming questions as being about Cloudflare, AI, or neither, and draw on the corresponding data source for more targeted results. Everything is fully customizable - you can change the content of the ingested data, the models used, and all prompts!

And if you have access to the LangSmith beta, the app also has tracing set up so that you can easily see and debug each step in the application.

We can’t wait to see what you build

We can't wait to see what you all build with LangChain and Cloudflare. Come tell us about it in discord or on our community forums.