Historically Cloudflare's core competency was operating an HTTP reverse proxy. We've spent significant effort optimizing traditional HTTP/1.1 and HTTP/2 servers running on top of TCP. Recently though, we started operating big scale stateful UDP services.

Stateful UDP gains popularity for a number of reasons:

— QUIC is a new transport protocol based on UDP, it powers HTTP/3. We see the adoption accelerating.

— We operate WARP — our Wireguard protocol based tunneling service — which uses UDP under the hood.

— We have a lot of generic UDP traffic going through our Spectrum service.

Although UDP is simple in principle, there is a lot of domain knowledge needed to run things at scale. In this blog post we'll cover the basics: all you need to know about UDP servers to get started.

Connected vs unconnected

How do you "accept" connections on a UDP server? If you are using unconnected sockets, you generally don't.

But let's start with the basics. UDP sockets can be "connected" (or "established") or "unconnected". Connected sockets have a full 4-tuple associated {source ip, source port, destination ip, destination port}, unconnected sockets have 2-tuple {bind ip, bind port}.

Traditionally the connected sockets were mostly used for outgoing flows, while unconnected for inbound "server" side connections.

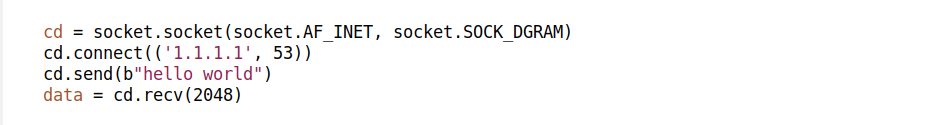

UDP client

As we'll learn today, these can be mixed. It is possible to use connected sockets for ingress handling, and unconnected for egress. To illustrate the latter, consider these two snippets. They do the same thing — send a packet to the DNS resolver. First snippet is using a connected socket:

Second, using unconnected one:

Which one is better? In the second case, when receiving, the programmer should verify the source IP of the packet. Otherwise, the program can get confused by some random inbound internet junk — like port scanning. It is tempting to reuse the socket descriptor and query another DNS server afterwards, but this would be a bad idea, particularly when dealing with DNS. For security, DNS assumes the client source port is unpredictable and short-lived.

Generally speaking for outbound traffic it's preferable to use connected UDP sockets.

Connected sockets can save route lookup on each packet by employing a clever optimization — Linux can save a route lookup result on a connection struct. Depending on the specifics of the setup this might save some CPU cycles.

For completeness, it is possible to roll a new source port and reuse a socket descriptor with an obscure trick called "dissolving of the socket association". It can be done with connect(AF_UNSPEC), but this is rather advanced Linux magic.

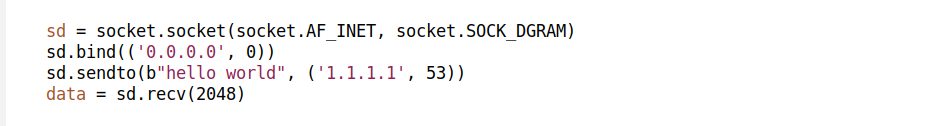

UDP server

Traditionally on the server side UDP requires unconnected sockets. Using them requires a bit of finesse. To illustrate this, let's write an UDP echo server. In practice, you probably shouldn't write such a server, due to a risk of becoming a DoS reflection vector. Among other protections, like rate limiting, UDP services should always respond with a strictly smaller amount of data than was sent in the initial packet. But let's not digress, the naive UDP echo server might look like:

This code begs questions:

— Received packets can be longer than 2048 bytes. This can happen over loop back, when using jumbo frames or with help of IP fragmentation.

— It's totally possible for the received packet to have an empty payload.

— What about inbound ICMP errors?

These problems are specific to UDP, they don't happen in the TCP world. TCP can transparently deal with MTU / fragmentation and ICMP errors. Depending on the specific protocol, a UDP service might need to be more complex and pay extra care to such corner cases.

Sourcing packets from a wildcard socket

There is a bigger problem with this code. It only works correctly when binding to a specific IP address, like ::1 or 127.0.0.1. It won't always work when we bind to a wildcard. The issue lies in the sendto() line — we didn't explicitly set the outbound IP address! Linux doesn't know where we'd like to source the packet from, and it will choose a default egress IP address. It might not be the IP the client communicated to. For example, let's say we added ::2 address to loop back interface and sent a packet to it, with src IP set to a valid ::1:

marek@mrprec:~$ sudo tcpdump -ni lo port 1234 -t

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on lo, link-type EN10MB (Ethernet), capture size 262144 bytes

IP6 ::1.41879 > ::2.1234: UDP, length 2

IP6 ::1.1234 > ::1.41879: UDP, length 2

Here we can see the packet correctly flying from ::1 to ::2, to our server. But then when the server responds, it sources the response from ::1 IP which in this case is wrong.

On the server side, when binding to a wildcard:

— we might receive packets destined to a number of IP addresses

— we must be very careful when responding and use appropriate source IP address

BSD Sockets API doesn't make it easy to understand where the received packet was destined to. On Linux and BSD it is possible to request useful CMSG metadata with IP_RECVPKTINO and IPV6_RECVPKTINFO.

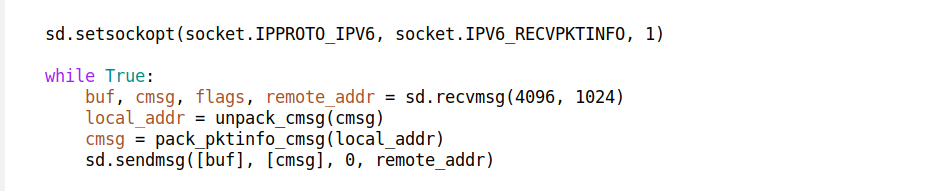

An improved server loop might look like:

The recvmsg and sendmsg syscalls, as opposed to recvfrom / sendto allow the programmer to request and set extra CMSG metadata, which is very handy when dealing with UDP.

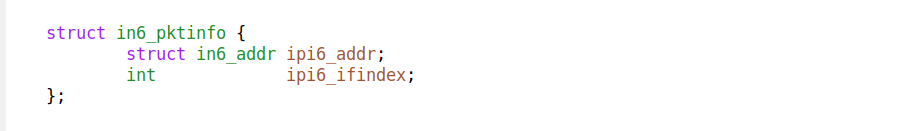

The IPV6_PKTINFO CMSG contains this data structure:

We can find here the IP address and interface number of the packet target. Notice, there's no place for a port number.

Graceful server restart

Many traditional UDP protocols, like DNS, are request-response based. Since there is no state associated with a higher level "connection", the server can restart, to upgrade or change configuration, without any problems. Ideally, sockets should be managed with the usual systemd socket activation to avoid the short time window where the socket is down.

Modern protocols are often connection-based. For such servers, on restart, it's beneficial to keep the old connections directed to the old server process, while the new server instance is available for handling the new connections. The old connections will eventually die off, and the old server process will be able to terminate. This is a common and easy practice in the TCP world where each connection has its own file descriptor. The old server process stops accept()-ing new connections and just waits for the old connections to gradually go away. NGINX has a good documentation on the subject.

Sadly, in UDP you can't accept() new connections. Doing graceful server restarts for UDP is surprisingly hard.

Established-over-unconnected technique

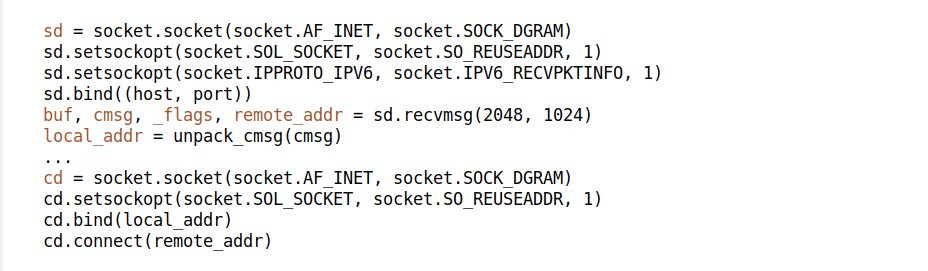

For some services we are using a technique which we call "established-over-unconnected". This comes from a realization that on Linux it's possible to create a connected socket *over* an unconnected one. Consider this code:

Does this look hacky? Well, it should. What we do here is:

— We start a UDP unconnected socket.

— We wait for a client to come in.

— As soon as we receive the first packet from the client, we immediately create a new fully connected socket, *over* the unconnected socket! It shares the same local port and local IP.

This is how it might look in ss:

marek@mrprec:~$ ss -panu sport = :1234 or dport = :1234 | cat

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

ESTAB 0 0 [::1]:1234 [::1]:44592 python3

UNCONN 0 0 *:1234 *:* python3

ESTAB 0 0 [::1]:44592 [::1]:1234 nc

Here you can see the two sockets managed in our python test server. Notice the established socket is sharing the unconnected socket port.

This trick is basically reproducing the 'accept()` behaviour in UDP, where each ingress connection gets its own dedicated socket descriptor.

While this trick is nice, it's not without drawbacks — it's racy in two places. First, it's possible that the client will send more than one packet to the unconnected socket before the connected socket is created. The application code should work around it — if a packet received from the server socket belongs to an already existing connected flow, it shall be handed over to the right place. Then, during the creation of the connected socket, in the short window after bind() before connect() we might receive unexpected packets belonging to the unconnected socket! We don't want these packets here. It is necessary to filter the source IP/port when receiving early packets on the connected socket.

Is this approach worth the extra complexity? It depends on the use case. For a relatively small number of long-lived flows, it might be ok. For a high number of short-lived flows (especially DNS or NTP) it's an overkill.

Keeping old flows stable during service restarts is particularly hard in UDP. The established-over-unconnected technique is just one of the simpler ways of handling it. We'll leave another technique, based on SO_REUSEPORT ebpf, for a future blog post.

Summary

In this blog post we started by highlighting connected and unconnected UDP sockets. Then we discussed why binding UDP servers to a wildcard is hard, and how IP_PKTINFO CMSG can help to solve it. We discussed the UDP graceful restart problem, and hinted on an established-over-unconnected technique.

| Socket type | Created with | Appropriate syscalls |

|---|---|---|

| established | connect() | recv()/send() |

| established | bind() + connect() | recvfrom()/send(), watch out for the race after bind(), verify source of the packet |

| unconnected | bind(specific IP) | recvfrom()/sendto() |

| unconnected | bind(wildcard) | recvmsg()/sendmsg() with IP_PKTINFO CMSG |

Stay tuned, in future blog posts we might go even deeper into the curious world of production UDP servers.