quiche, Cloudflare's IETF QUIC implementation has been running CUBIC congestion control for a while in our production environment as mentioned in Comparing HTTP/3 vs. HTTP/2 Performance. Recently we also added HyStart++ to the congestion control module for further improvements.

In this post, we will talk about QUIC congestion control and loss recovery briefly and CUBIC and HyStart++ in the quiche congestion control module. We will also discuss lab test results and how to visualize those using qlog which was recently added to the quiche library as well.

QUIC Congestion Control and Loss Recovery

In the network transport area, congestion control is how to decide how much data the connection can send into the network. It has an important role in networking so as not to overrun the link but also at the same time it needs to play nice with other connections in the same network to ensure that the overall network, the Internet, doesn’t collapse. Basically congestion control is trying to detect the current capacity of the link and tune itself in real time and it’s one of the core algorithms for running the Internet.

QUIC congestion control has been written based on many years of TCP experience, so it is little surprise that the two have mechanisms that bear resemblance. It’s based on the CWND (congestion window, the limit of how many bytes you can send to the network) and the SSTHRESH (slow start threshold, sets a limit when slow start will stop). Congestion control mechanisms can have complicated edge cases and can be hard to tune. Since QUIC is a new transport protocol that people are implementing from scratch, the current draft recommends Reno as a relatively simple mechanism to get people started. However, it has known limitations and so QUIC is designed to have pluggable congestion control; it’s up to implementers to adopt any more advanced ones of their choosing.

Since Reno became the standard for TCP congestion control, many congestion control algorithms have been proposed by academia and industry. Largely there are two categories: loss-based congestion control such as Reno and CUBIC, where the congestion control responds to a packet loss event, and delay-based congestion control, such as Vegas and BBR , which the algorithm tries to find a balance between the bandwidth and RTT increase and tune the packet send rate.

You can port TCP based congestion control algorithms to QUIC without much change by implementing a few hooks. quiche provides a modular API to add a new congestion control module easily.

Loss detection is how to detect packet loss at the sender side. It’s usually separated from the congestion control algorithm but helps the congestion control to quickly respond to the congestion. Packet loss can be a result of the congestion on the link, but the link layer may also drop a packet without congestion due to the characteristics of the physical layer, such as on a WiFi or mobile network.

Traditionally TCP uses 3 DUP ACKs for ACK based detection, but delay-based loss detection such as RACK has also been used over the years. QUIC combines the lesson from TCP into two categories . One is based on the packet threshold (similar to 3 DUP ACK detection) and the other is based on a time threshold (similar to RACK). QUIC also has ACK Ranges similar to TCP SACK to provide a status of the received packets but ACK Ranges can keep a longer list of received packets in the ACK frame than TCP SACK. This simplifies the implementation overall and helps provide quick recovery when there is multiple loss.

Reno

Reno (often referred as NewReno) is a standard congestion control for TCP and QUIC .

Reno is easy to understand and doesn't need additional memory to store the state so can be implemented in low spec hardware too. However, its slow start can be very aggressive because it keeps increasing the CWND quickly until it sees congestion. In other words, it doesn’t stop until it sees the packet loss.

Note that there are multiple states for Reno; Reno starts from "slow start" mode which increases the CWND very aggressively, roughly 2x for every RTT until the congestion is detected or CWND > SSTHRESH. When packet loss is detected, it enters into the “recovery” mode until packet loss is recovered.

When it exits from recovery (no lost ranges) and CWND > SSTHRESH, it enters into the "congestion avoidance" mode where the CWND grows slowly (roughly a full packet per RTT) and tries to converge on a stable CWND. As a result you will see a “sawtooth” pattern when you make a graph of the CWND over time.

Here is an example of Reno congestion control CWND graph. See the “Congestion Window” line.

CUBIC

CUBIC was announced in 2008 and became the default congestion control in the Linux kernel. Currently it's defined in RFC8312 and implemented in many OS including Linux, BSD and Windows. quiche's CUBIC implementation follows RFC8312 with a fix made by Google in the Linux kernel .

What makes the difference from Reno is during congestion avoidance its CWND growth is based on a cubic function as follows:

Wmax is the value of CWND when the congestion is detected. Then it will reduce the CWND by 30% and then the CWND starts to grow again using a cubic function as in the graph, approaching Wmax aggressively in the beginning in the first half but slowly converging to Wmax later. This makes sure that CWND growth approaches the previous point carefully and once we pass Wmax, it starts to grow aggressively again after some time to find a new CWND (this is called "Max Probing").

Also it has a "TCP-friendly" (actually a Reno-friendly) mode to make sure CWND growth is always bigger than Reno. When the congestion event happens, CUBIC reduces its CWND by 30%, where Reno cuts down CWND by 50%. This makes CUBIC a little more aggressive on packet loss.

Note that the original CUBIC only defines how to update the CWND during congestion avoidance. Slow start mode is exactly the same as Reno.

HyStart++

The authors of CUBIC made a separate effort to improve slow start because CUBIC only changed the way the CWND grows during congestion avoidance. They came up with the idea of HyStart .

HyStart is based on two ideas and basically changes how the CWND is updated during slow start:

- RTT delay samples: when the RTT is increased during slow start and over the threshold, it exits slow start early and enters into congestion avoidance.

- ACK train: When ACK inter-arrival time gets higher and over the threshold, it exits slow start early and enters into congestion avoidance.

However in the real world, ACK train may not be very useful because of ACK compression (merging multiple ACKs into one). Also RTT delay may not work well when the network is unstable.

To improve such situations there is a new IETF draft proposed by Microsoft engineers named HyStart++ . HyStart++ is included in the Windows 10 TCP stack with CUBIC.

It's a little different from original HyStart:

- No ACK Train, only RTT sampling.

- Add a LSS (Limited Slow Start) phase after exiting slow start. LSS grows the CWND faster than congestion avoidance but slower than Reno slow start. Instead of going into congestion avoidance directly, slow start exits to LSS and LSS exits to congestion avoidance when packet loss happens.

- Simpler implementation.

In quiche, HyStart++ is turned on by default for both Reno and CUBIC congestion control and can be configured via API.

Lab Test

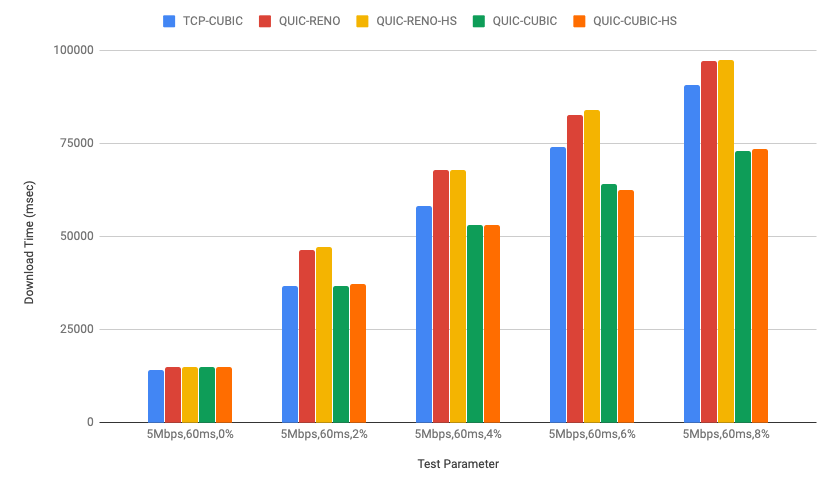

Here is a test result using the test lab . The test condition is as follows:

- 5Mbps bandwidth, 60ms RTT with a different packet loss from 0% to 8%

- Measure download time of 8MB file

- NGINX 1.16.1 server with the HTTP3 patch

- TCP: CUBIC in Linux kernel 4.14

- QUIC: Cloudflare quiche

- Download 20 times and take a median download time

I run the test with the following combination:

- TCP CUBIC (TCP-CUBIC)

- QUIC Reno (QUIC-RENO)

- QUIC Reno with Hystart++ (QUIC-RENO-HS)

- QUIC CUBIC (QUIC-CUBIC)

- QUIC CUBIC with Hystart++ (QUIC-CUBIC-HS)

Overall Test Result

Here is a chart of overall test results:

In these tests, TCP-CUBIC (blue bars) is the baseline to which we compare the performance of QUIC congestion control variants. We include QUIC-RENO (red and yellow bars) because that is the default QUIC baseline. Reno is simpler so we expect it to perform worse than TCP-CUBIC. QUIC-CUBIC (green and orange bars) should perform the same or better than TCP-CUBIC.

You can see with 0% packet loss TCP and QUIC are almost doing the same (but QUIC is slightly slower). As packet loss increases QUIC CUBIC performs better than TCP CUBIC. QUIC loss recovery looks to work well, which is great news for real-world networks that do encounter loss.

With HyStart++, overall performance doesn’t change but that is to be expected, because the main goal of HyStart++ is to prevent overshooting the network. We will see that in the next section.

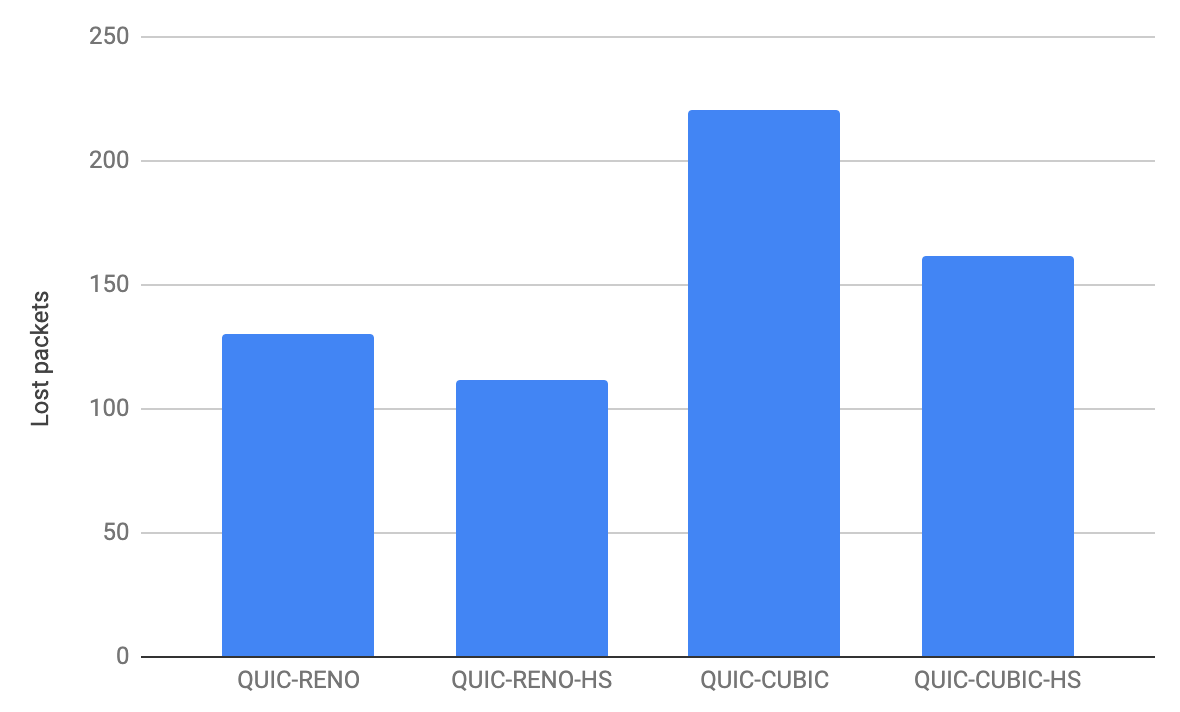

The impact of HyStart++

HyStart++ may not improve the download time but it will reduce packet loss while maintaining the same performance without it. Since slow start will exit to congestion avoidance when packet loss is detected, we focus on 0% packet loss where only network congestion creates packet loss.

Packet Loss

For each test, the number of detected packets lost (not the retransmit count) is shown in the following chart. The lost packets number is the average of 20 runs for each test.

As shown above, you can see that HyStart++ reduces a lot of packet loss.

Note that compared with Reno, CUBIC can create more packet loss in general. This is because the CUBIC CWND can grow faster than Reno during congestion avoidance and also reduces the CWND less (30%) than Reno (50%) at the congestion event.

Visualization using qlog and qvis

qvis is a visualization tool based on qlog . Since quiche has implemented qlog support , we can take qlogs from a QUIC connection and use the qvis tool to visualize connection stats. This is a very useful tool for protocol development. We already used qvis for the Reno graph but let’s see a few more examples to understand how HyStart++ works.

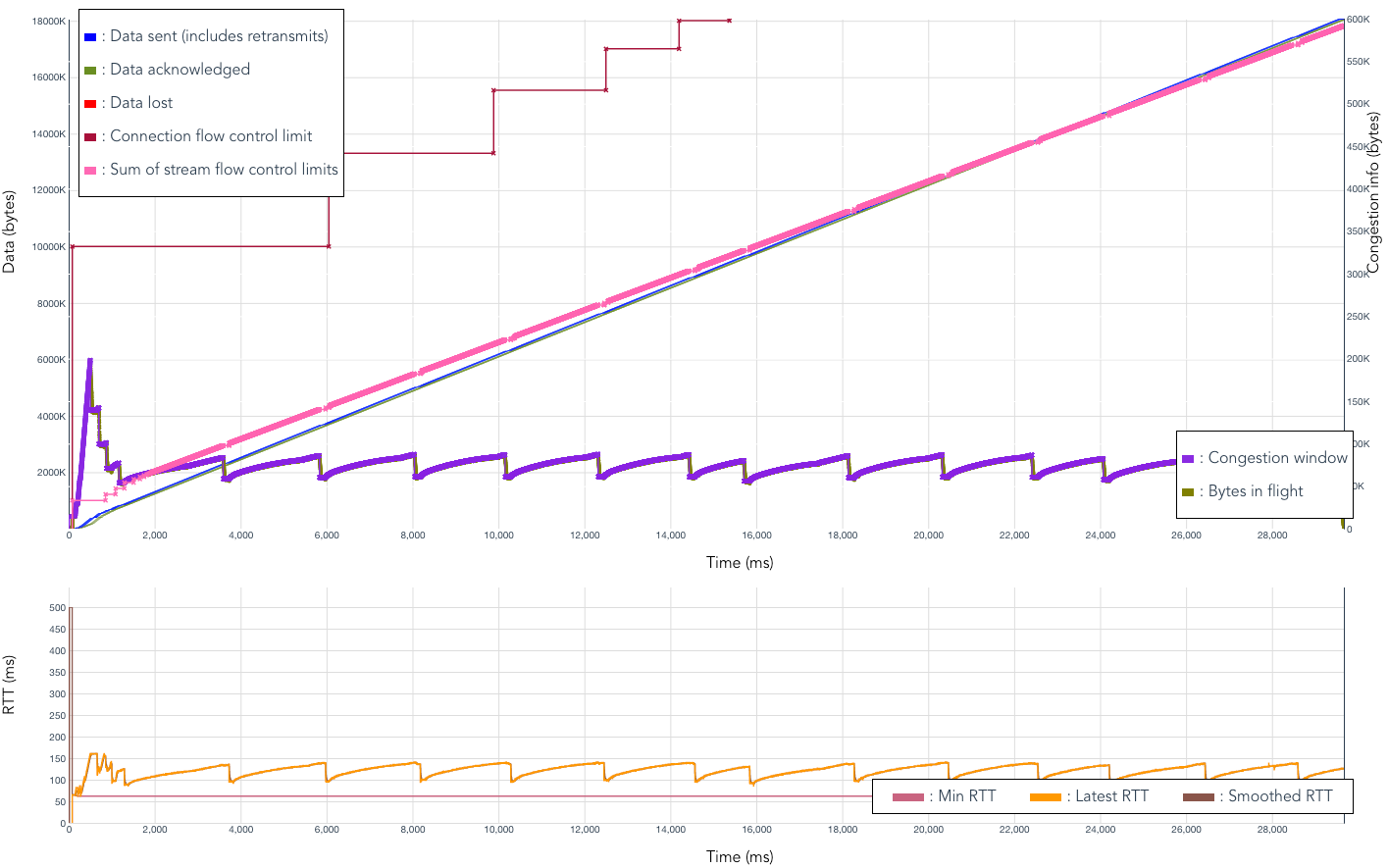

CUBIC without HyStart++

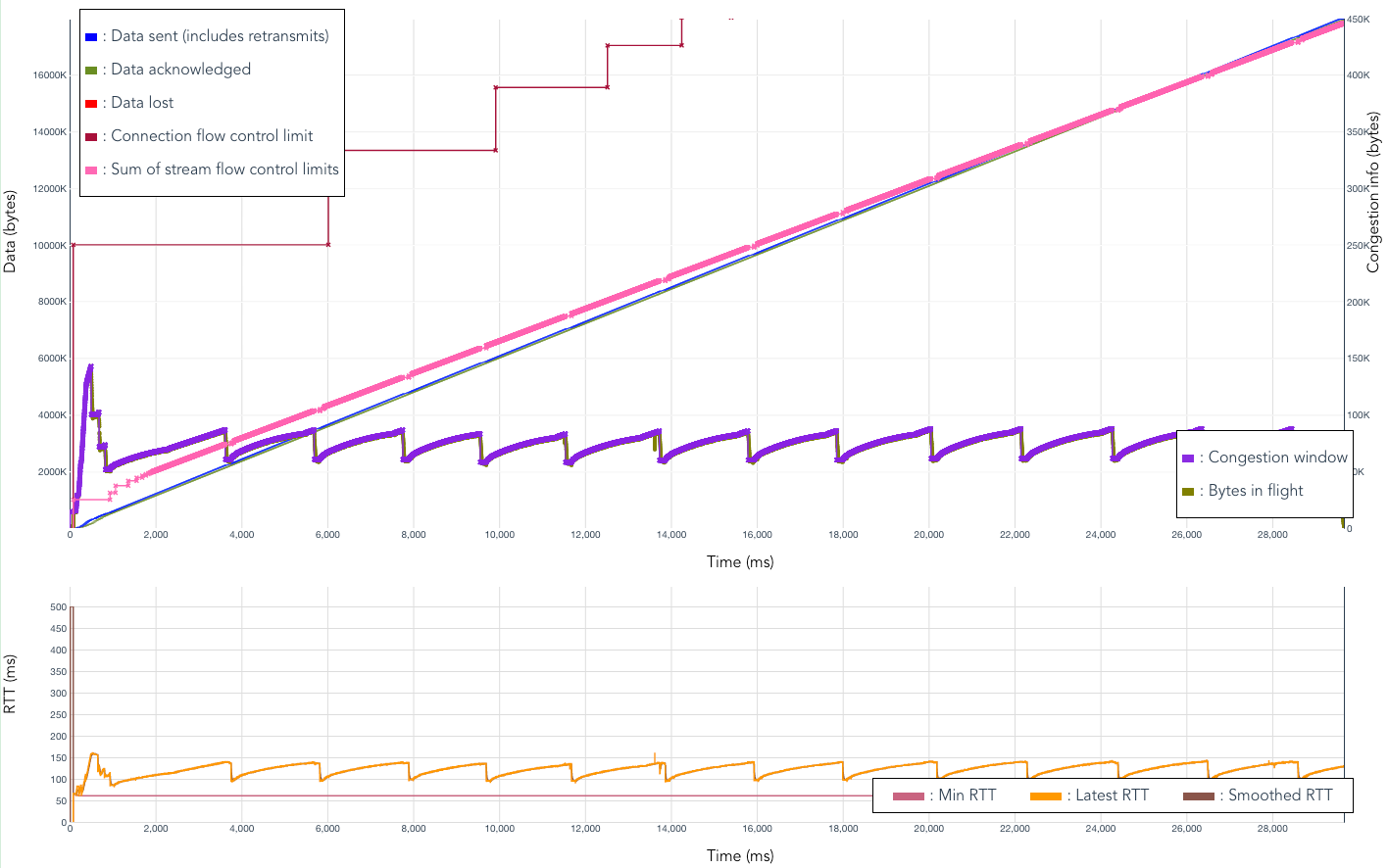

Here is a qvis congestion chart for a 16MB transfer in the same lab test conditions, with 0% packet loss. You can see a high peak of CWND in the beginning due to slow start. After some time, it starts to show the CUBIC window growth pattern (concave function).

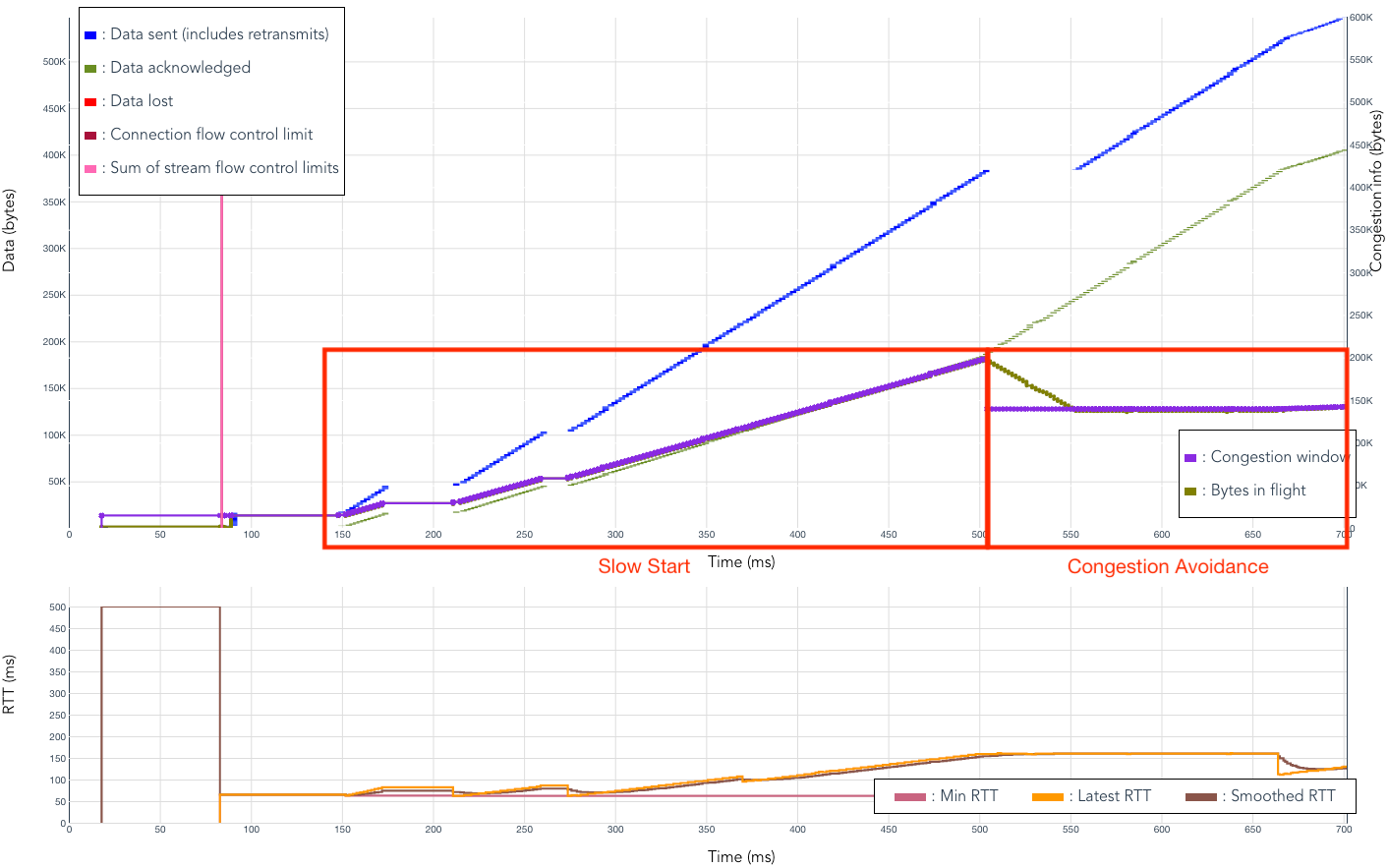

When we zoom into the slow start section (the first 0.7 seconds), we can see there is a linear increase of CWND during slow start. This continues until we see a packet lost around 500ms and enters into congestion avoidance after recovery, as you can see in the following chart:

CUBIC with HyStart++

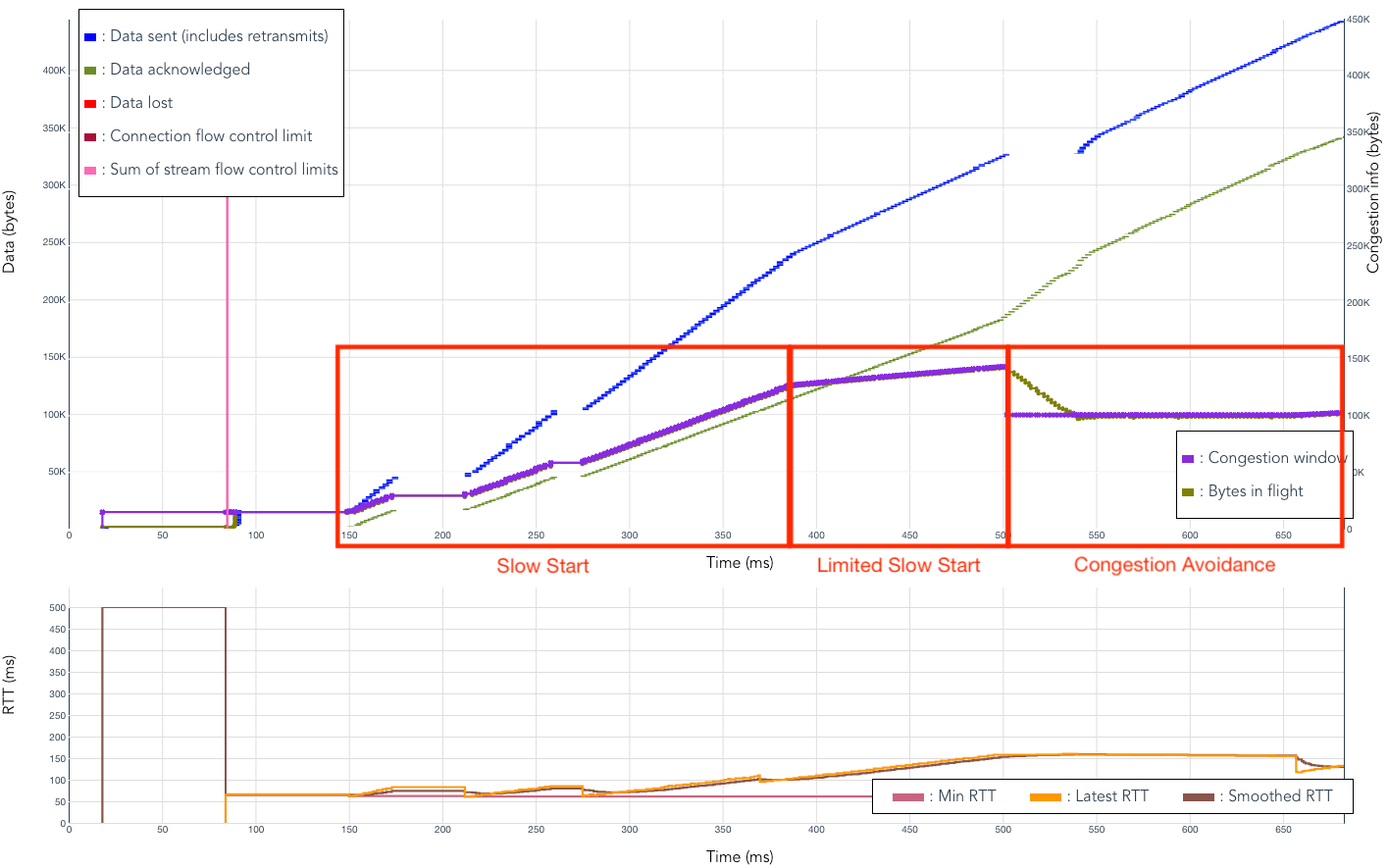

Let’s see the same graph when HyStart++ is enabled. You can see the slow start peak is smaller than without HyStart++, which will lead to less overshooting and packet loss:

When we zoom in the slow start part again, now we can see that the slow start exits to Limited Slow Start (LSS) around 390ms and exit to congestion avoidance at the congestion event around 500ms.

As a result you can see the slope is less steep until congestion is detected. It will lead to less packet loss due to less overshooting the network and faster convergence to a stable CWND.

Conclusions and Future Tasks

The QUIC draft spec already has integrated a lot of experience from TCP congestion control and loss recovery. It recommends the simple Reno mechanism as a means to get people started implementing the protocol but is under no illusion that there are better performing ones out there. So QUIC is designed to be pluggable in order for it to adopt mechanisms that are being deployed in state-of-the-art TCP implementations.

CUBIC and HyStart++ are known implementations in the TCP world and give better performance (faster download and less packet loss) than Reno. We've made quiche pluggable and have added CUBIC and HyStart++ support. Our lab testing shows that QUIC is a clear performance winner in lossy network conditions, which is the very thing it is designed for.

In the future, we also plan to work on advanced features in quiche, such as packet pacing, advanced recovery and BBR congestion control for better QUIC performance. Using quiche you can switch among multiple congestion control algorithms using the config API at the connection level, so you can play with it and choose the best one depending on your need. qlog endpoint logging can be visualized to provide high accuracy insight into how QUIC is behaving, greatly helping understanding and development.

CUBIC and HyStart++ code is available in the quiche primary branch today. Please try it!