We use nginx throughout our network for front-line web serving, proxying and traffic filtering. In some cases, we've augmented the core C code of nginx with our own modules, but recently we've made a major move to using Lua in conjunction with nginx.

One project that's now almost entirely written in Lua is the new CloudFlare WAF that we blogged about the other day.

The Lua WAF uses the nginx Lua module to embed Lua code and execute that code as part of the normal nginx handling of phases. The entire execution of the [WAF](https://www.cloudflare.com/learning/ddos/glossary/web-application-firewall-waf/) is actually handled by the following nginx configuration:

location / {

set $backend_waf "WAF_CORE";

default_type 'text/plain';

access_by_lua '

local waf = require "waf"

waf.execute()

';

}

The access_by_lua directive tells nginx to execute the Lua code shown as an access phase handler. Then the Lua code in the waf module determines whether the request should be blocked or passed to the origin for processing. The waf module terminates by calling ngx.exit() with either a 200 (which causes nginx to continue processing the request) or a 403 (to block the request).

All the real WAF work is done by the waf module which is written in Lua.

To implement the WAF we wanted to be able to read existing WAF configurations written for the popular mod_security open source WAF and support our own simplified WAF language. The mod_security language has to work inside Apache configuration files which leads to it being somewhat hard to read, but there are a large body of rules written using it (such as the popular OWASP ruleset) that we wanted to be able to run natively.

Prior to writing the new WAF we had actually been running Apache alongside nginx just for mod_security, but the combination was slow, cumbersome, and not scalable with CloudFlare's growing business. We were already working with compromises just to keep it running.

A rule written in mod_security's native language might look like the following (this is a slightly obfuscated version of a real custom rule we put in place). The first block of code shows the mod_security version, the second the equivalent in CloudFlare's own rule language.

SecRule REQUEST_HEADERS:User-Agent "@beginsWith DataStore/"

"id:100000,phase:0,t:none,deny,chain,msg:'DataStore Attack'"

SecRule REQUEST_METHOD "@streq GET" "chain"

SecRule REQUEST_URI "\/\?-?\d+=-?\d+" ""

rule 100000 DataStore Attack

REQUEST_HEADERS:User-Agent has-prefix DataStore/ and

REQUEST_METHOD is GET and

REQUEST_URI matches /?-?\d+=-?\d+

deny

We'll be opening up the ability to write custom rules in either mod_security or CloudFlare style as the WAF rolls out more widely. Most importantly the CloudFlare WAF language is not Lua despite the fact that the WAF is implemented in Lua.

In fact, the WAF operates by first taking rules written in either mod_security or CloudFlare style and converting them to a common JSON format that encodes the rule and adds additional information used by the WAF UI (such as whether the rule can be disabled or not).

The JSON format is then compiled into a Lua program that is executed by the WAF. The compilation step allows us to support different input languages (like the two above) and perform optimization so that the WAF executes quickly.

For example, the compiler performs tasks such as:

- Clause reordering so that rules can be quickly skipped if a sub-clause doesn't match

- Regular expression optimization and simplification

- Operator replacement so that fast operators (such as simple string matches) are used where possible

- Providing hints to the WAF runtime about whether macro expansion is needed.

- Global optimizations such as recognizing repeated use of the same strings or variables and ensuring that they are computed only once

- Lua optimizations such as the use of local references to global functions

The rule above ends up as the following Lua code:

if waf_begins(waf, v3_6, '3_6', t3_1, '3_1', 'DataStore/', false) then

waf.vars['RULE']['ID'] = '100000'

if waf_eq(waf, v3_7, '3_7', t3_1, '3_1', 'GET', false) then

if waf_regex(waf, v3_4, '3_4', t3_1, '3_1', [=[\/\?-?\d+=-?\d+]=],

false, nil, false) then

waf_activate(waf, rulefile)

waf_msg(waf, 'DataStore Attack')

waf_deny(waf, rulefile)

end

end

end

The resulting code is hard to read because it's essentially the WAF's assembly language and has been automatically generated. The WAF runtime implements functions like waf_begins, waf_eq, and waf_regex that are used to perform rule matching. Those functions themselves are highly optimized.

The overall goal was to get the median WAF block/allow decision made in <1ms when running in the real world.

The optimization in the WAF runtime comes from examining the WAF's performance under a test harness with line-level timing information and from running the WAF in CloudFlare's network with very detailed systemtap-based instrumention.

To get line-level information when running in the test suite we wrote a small line-level code profiler which we use when running the WAF core code against a test set of requests. The profiler, called lulip, is an open source project. It outputs information about which lines were called the most frequently and which consumed the most execution time.

For example, here's a simplified version of output from a test:

file:line count elapsed (ms) line wr.lua:1129 2 822.455 hash = ngx_sha1_bin(value) wr.lua:1172 428 470.849 captures, err = ngx_re_match(v, p) wr.lua:1197 3762 207.487 x = string_find(v, f) wr.lua:212 157 154.386 string_gsub(v, "//([^/]+)//", "%1") wr.lua:1196 3788 87.475 for i=1,g() do wr.lua:1158 1563 52.906 if not f() then

It shows that a call to ngx_sha1_bin (which is actually a local Lua reference to the ngx.sha1_bin function in nginx Lua) was called only twice but took 823ms to run. The next most expensive line was line 1172 which took 471ms in total but was called 428 times. Using this detailed information we were able to optimize specific hot spots of code.

Information from the systemtap or a stack trace is fed into our own pastebin which parses it and automatically produces a flame graph showing where the code is running. Hovering over any segment gives information about percentage of time spent in any part of the code.

Early on in the optimization of the WAF the flame graph quickly showed that extensive used of closures was causing slowness because of their cost in LuaJIT. A change to the compiler removed their use.

Another view generated from the same information shows the total time spent within a function (ignoring any functions it itself calls). This enables quick identification of hot functions. Here it's easy to see that regular expression processing and string matching are the most expensive operations (which isn't surprising given that that's what the WAF does, mostly).

Examining these traces we decided that it would be worthwhile sponsoring improvements to the LuaJIT open source project.

The key to optimizing the WAF came from two things: repeatable test data and tools for inspecting the code as it ran. The combination of flame graphs and lulip meant that it was possible to make huge leaps in WAF performance by seeing precisely where time was spent. The use of a rule compiler meant that optimizations could be made rapidly to all the rules as needed. There's no point guessing what's slow: measure it!

The resulting code makes extensive use of local variables (many of which are automatically generated by the compiler) and memoization. We also use nginx Lua's cache of loaded Lua code to speed up loading of custom rules and a two stage in-memory cache that uses by lua_shared_dict and memcached for additional loading speed. And our globally distributed data store means that new rules can be rolled out in seconds.

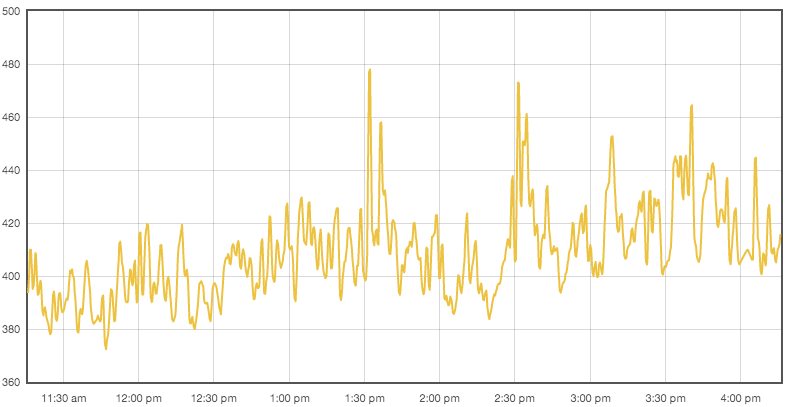

And, finally, to measure the operation of the WAF in production we have a global metrics system that gathers metrics about all parts of the CloudFlare network. Here's a chart showing a few hours of WAF operation; it shows the average time to process a request in microseconds. The WAF is running at between 380us and 480us per request, well within the goal of 1ms.

The combination of optimization in the language, the compiler and the WAF core mean that we now have a very fast all Lua WAF that gives us great flexibility and which runs within the core of nginx. This is yet another project that points to Lua being an excellent embedded language in which to write the sorts of scalable logic that CloudFlare needs.